These materials are for informational purposes only and do not constitute legal advice. You should contact an attorney to obtain advice with respect to the development of a research app and any applicable laws.

Active Tasks

Active tasks invite users to perform activities under partially controlled conditions while iPhone sensors are used to collect data. For example, an active task for analyzing gait and balance might ask the user to walk a short distance, while collecting accelerometer data on the device.

Predefined Active Tasks

ResearchKit™ includes a number of predefined tasks, which fall into seven categories: motor activities, fitness, cognition, speech, hearing, hand dexterity, and vision. Table 1 summarizes each task and describes the data it generates.

| CATEGORY | TASK | SENSOR | DATA COLLECTED |

| Motor Activities | Range of Motion | Accelerometer Gyroscope |

Device motion |

Gait and Balance | Accelerometer Gyroscope |

Device motion Pedometer |

| Tapping Speed | Multi-Touch display Accelerometer (optional) |

Touch activity |

|

| Fitness | Fitness | GPS Gyroscope |

Device motion Pedometer Location Heart rate |

| Timed Walk | GPS Gyroscope |

Device motion Pedometer Location |

|

| Cognition | Spatial Memory | Multi-Touch display Accelerometer (optional) |

Touch activity Correct answer Actual sequences |

| Stroop Test | Multi-Touch display | Actual color Actual text User selection Completion time |

|

| Trail Making Test | Multi-Touch display | Completion time Touch activity |

|

| Paced Serial Addition Test (PSAT) | Multi-Touch display | Addition results from user | |

| Tower of Hanoi | Multi-Touch display | Every move taken by the user | |

| Reaction Time | Accelerometer Gyroscope |

Device motion | |

| Speech | Sustained Phonation | Microphone | Uncompressed audio |

| Speech Recognition | Microphone | Raw audio recording Transcription in the form of an SFTranscription object. Edited transcript (if any, by the user) |

|

| Speech-in-Noise | Microphone | Raw audio recording Transcription in the form of an SFTranscription object Edited transcript (if any, by the user). This can be used to calculate the Speech Reception Threshold (SRT) for a user. |

|

| Hearing | Environment SPL | Microphone | Environment sound pressure level in dBA | Tone Audiometry | AirPods Headphones |

Minimum amplitude for the user to recognize the sound |

| dBHL Tone Audiometry | AirPods Headphones |

Hearing threshold in dB HL scale User response timestamps |

|

| Hand Dexterity | 9-Hole Peg | Multi-Touch display | Completion time Move distance |

| Vision | Amsler Grid | Multi-Touch display | Touch activity Eye side Areas of distortions as annotated by the user |

You can disable the instruction or completion steps that are automatically

included in the framework by passing appropriate options when you create an active task. See the

ORKPredefinedTaskOption constants for the available options.

You can use options flags to exclude data collection for data types that are not needed for your study. For example, to perform the fitness task without recording heart rate data, use the ORKPredefinedTaskOptionExcludeHeartRate option.

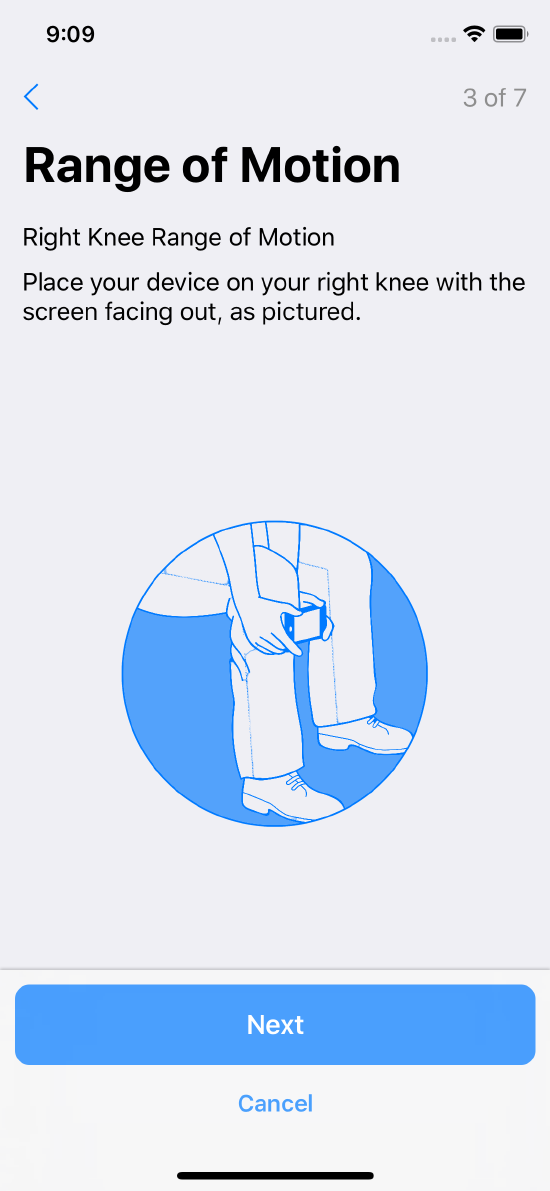

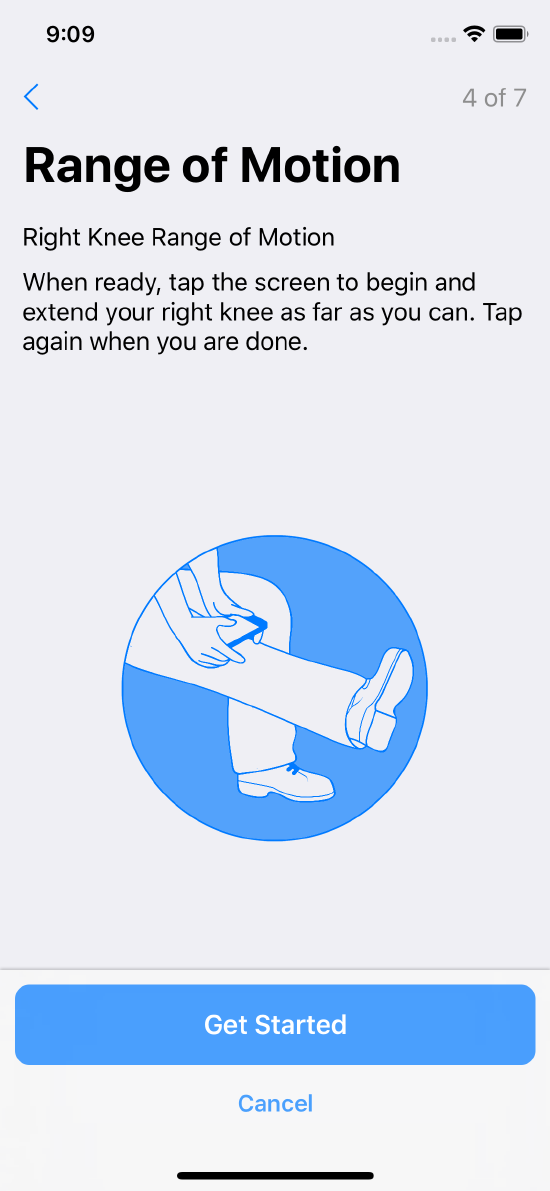

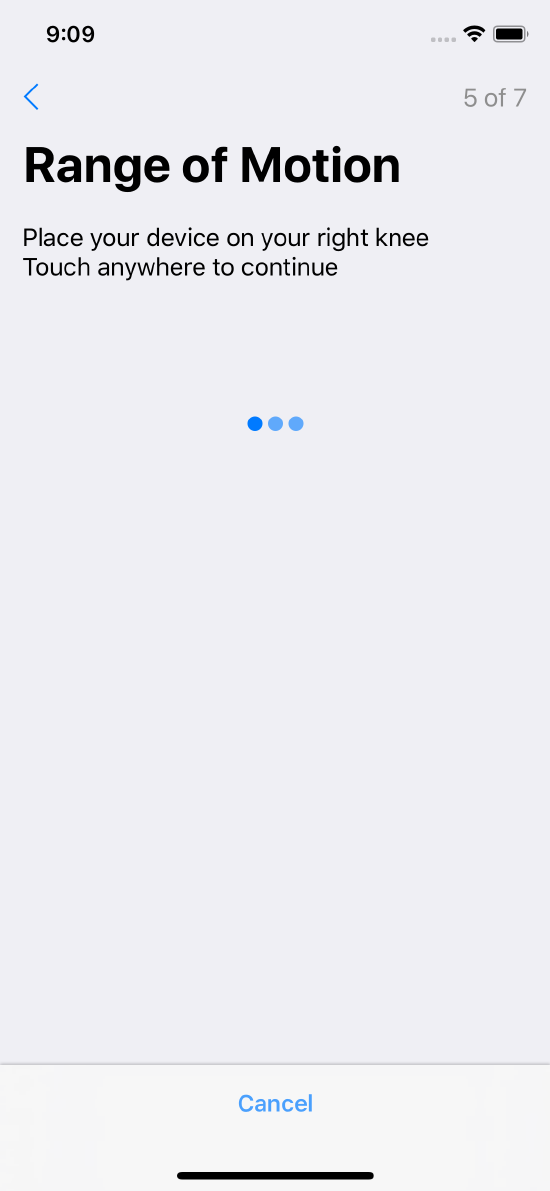

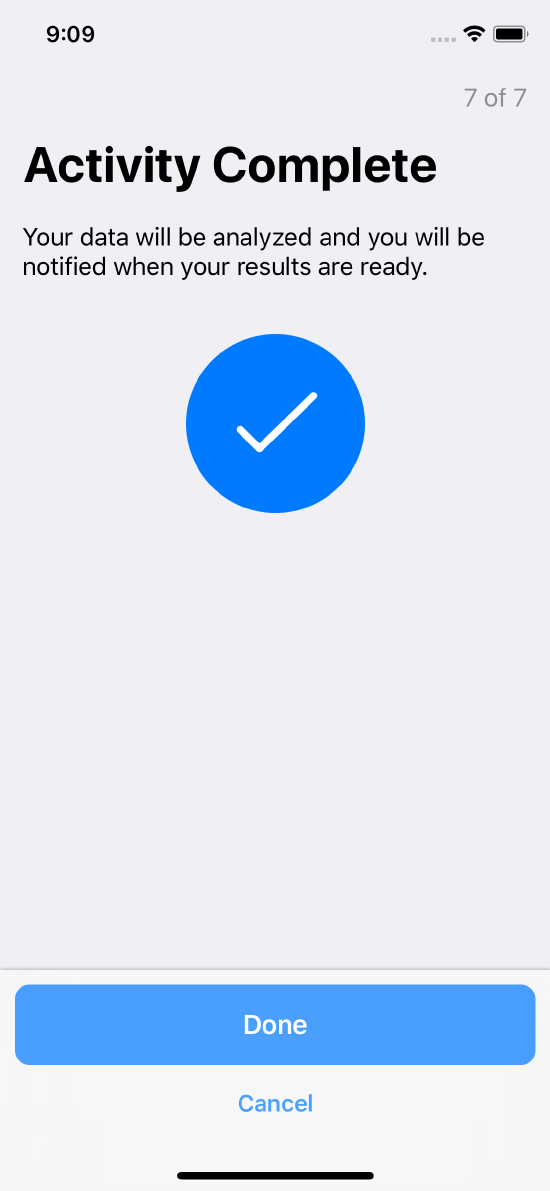

Range of Motion

In the range of motion task, participants follow movement instructions while accelerometer and gyroscope data is captured to measure flexed and extended positions for the knee or shoulder. Range of motion steps for the knee are shown in Figure 1.

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Specific instructions with an illustration

Specific instructions with an illustration

Further instructions with an illustration

Further instructions with an illustration

A touch anywhere step

A touch anywhere step

A further touch anywhere step

A further touch anywhere step

Confirms task completion

Confirms task completion

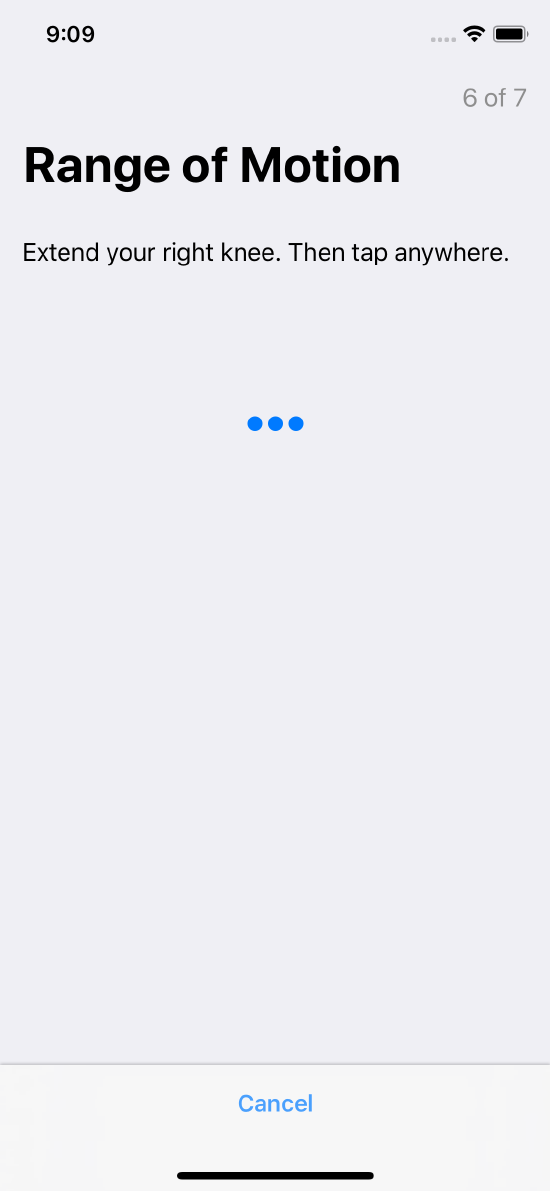

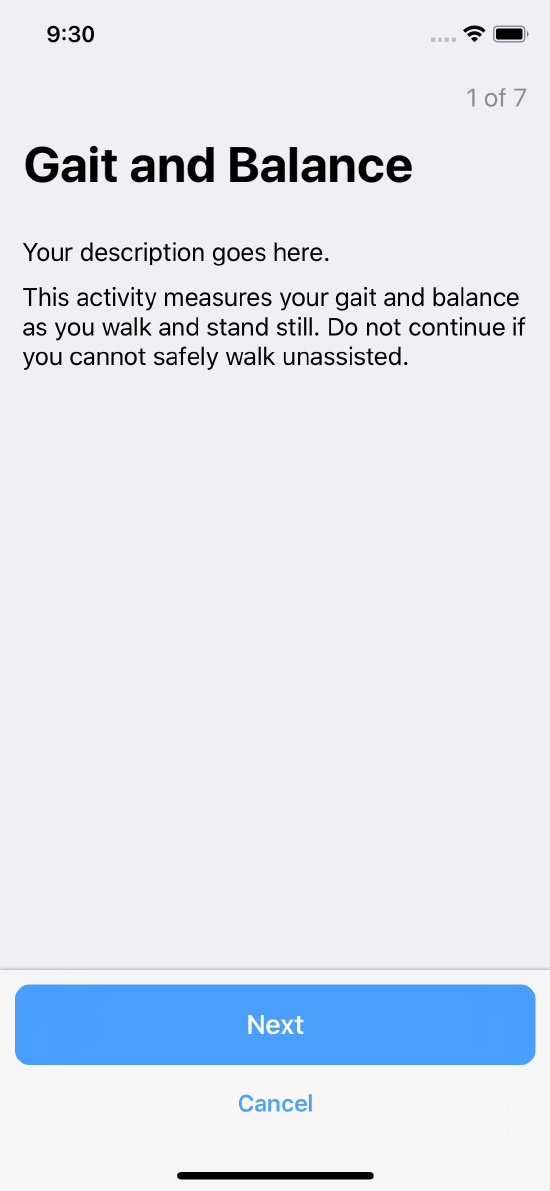

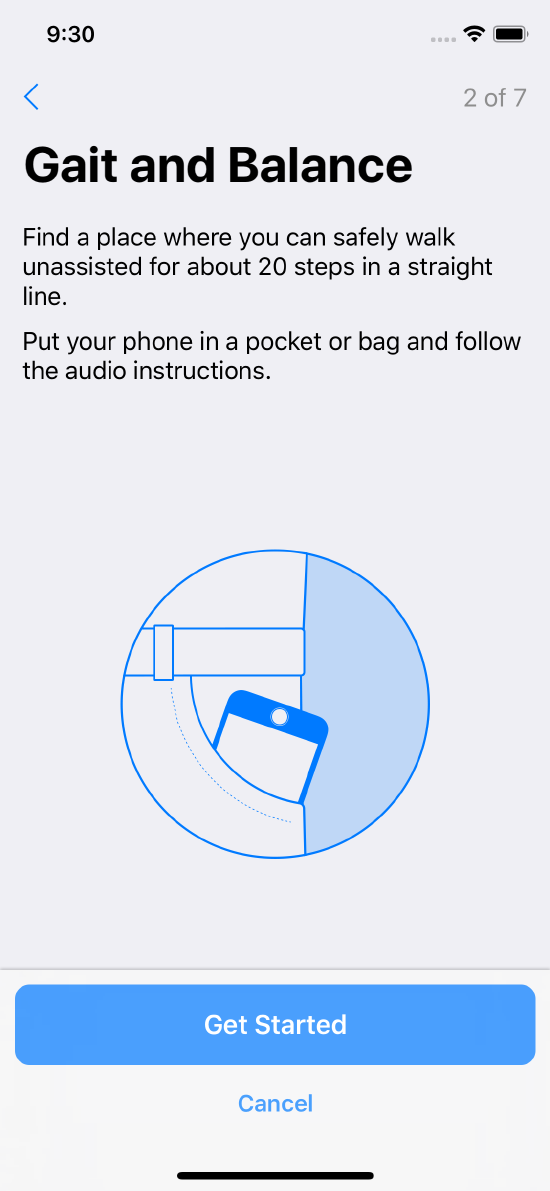

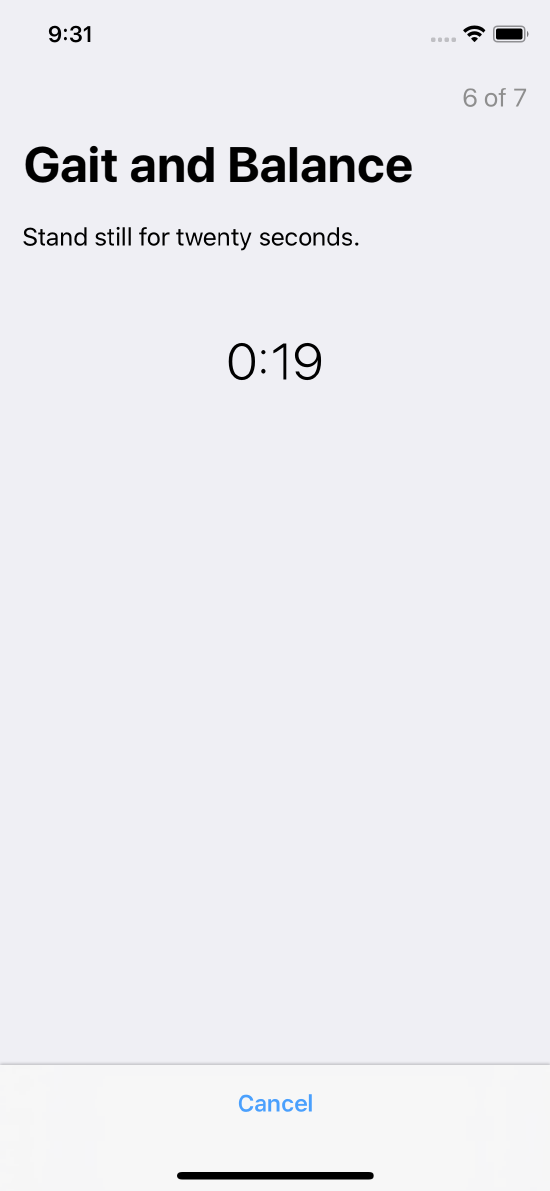

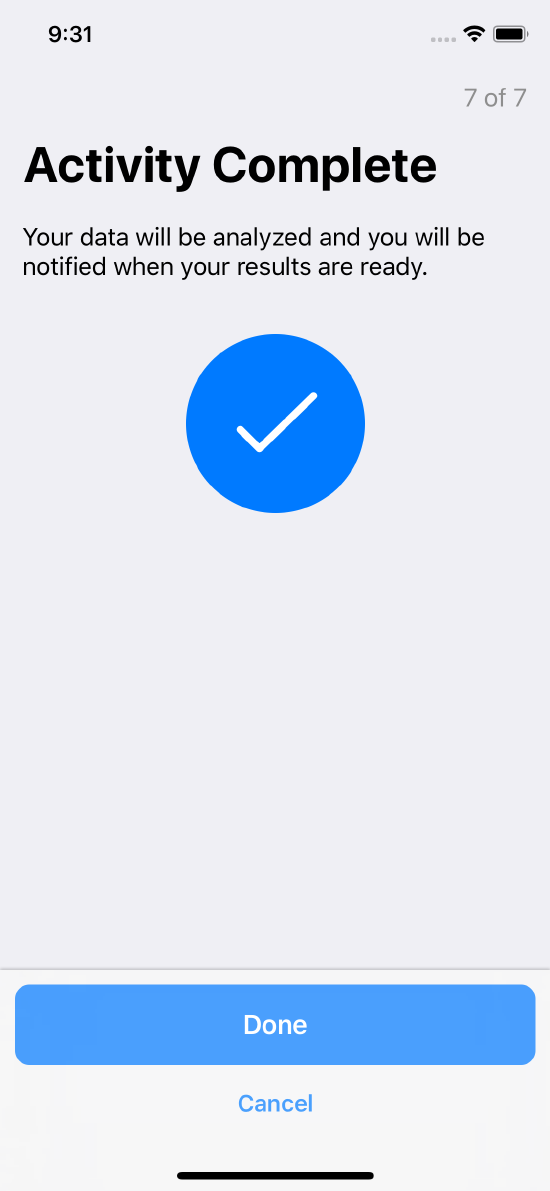

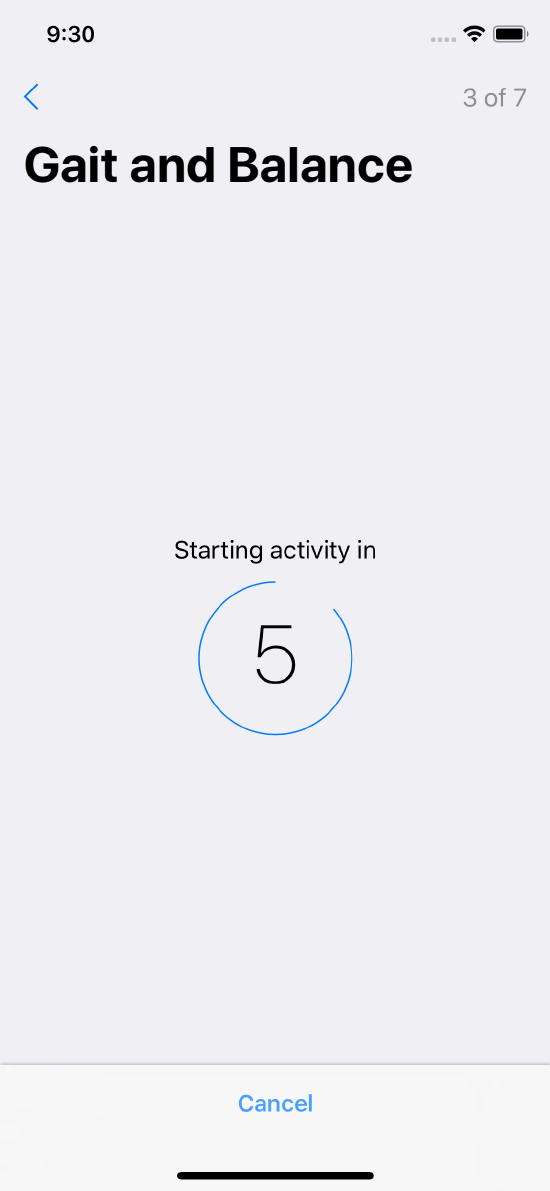

Gait and Balance

In the gait and balance task (see the method ORKOrderedTask shortWalkTaskWithIdentifier:intendedUseDescription:numberOfStepsPerLeg:restDuration:options), the user walks for a short distance, which may be indoors. You might use this semi-controlled task to collect objective measurements that can be used to estimate stride length, smoothness, sway, and other aspects of the participant’s walking.

Gait and balance steps are shown in Figure 2.

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

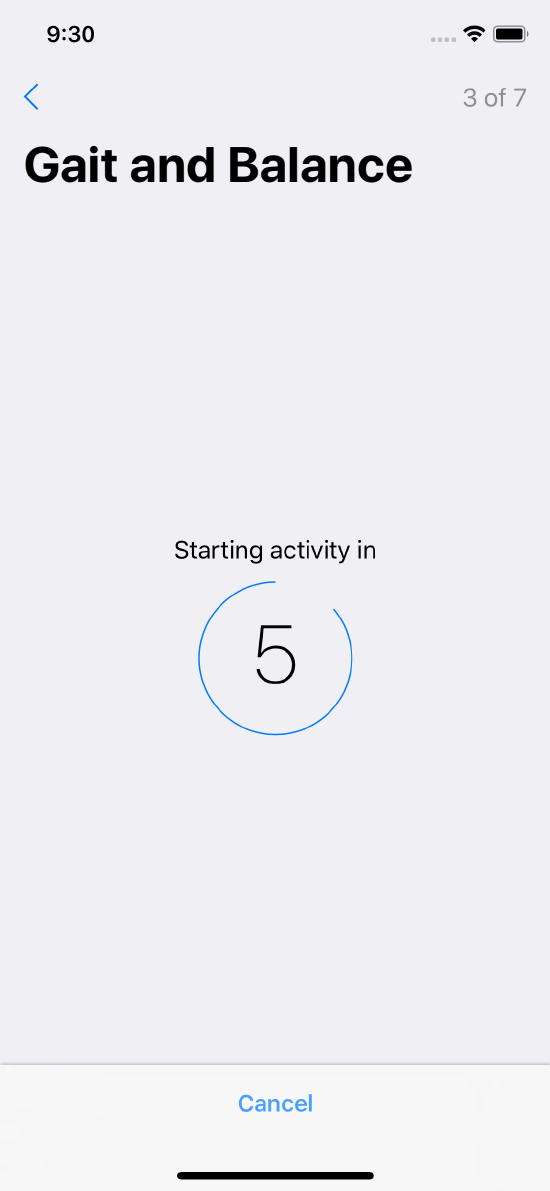

Count down a specified duration into the task

Count down a specified duration into the task

Asking user to walk

Asking user to walk

Asking user to walk

Asking user to walk

Asking user to rest

Asking user to rest

Confirms task completion

Confirms task completion

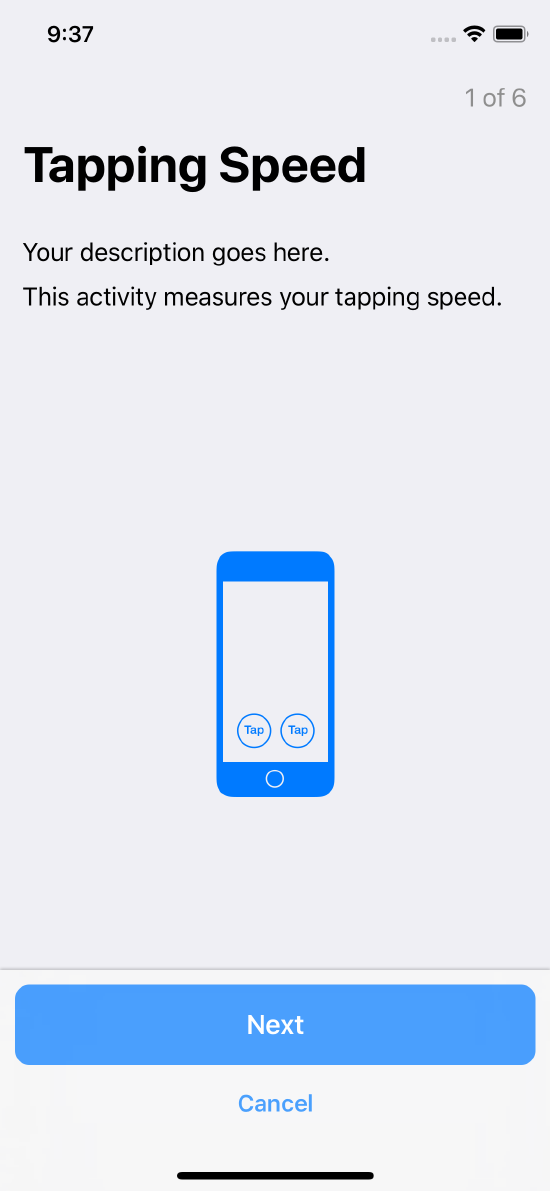

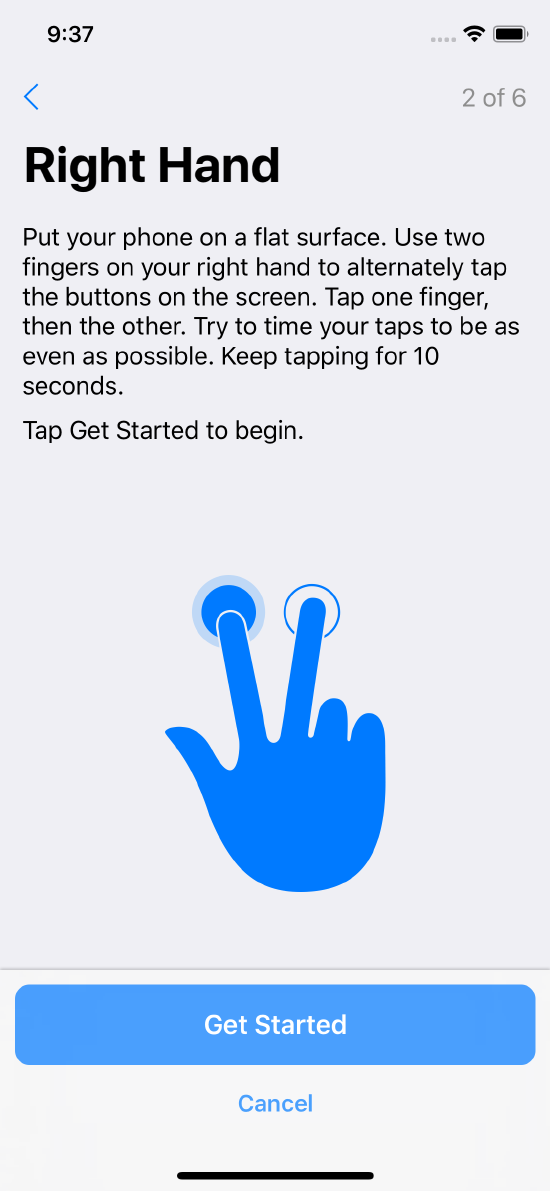

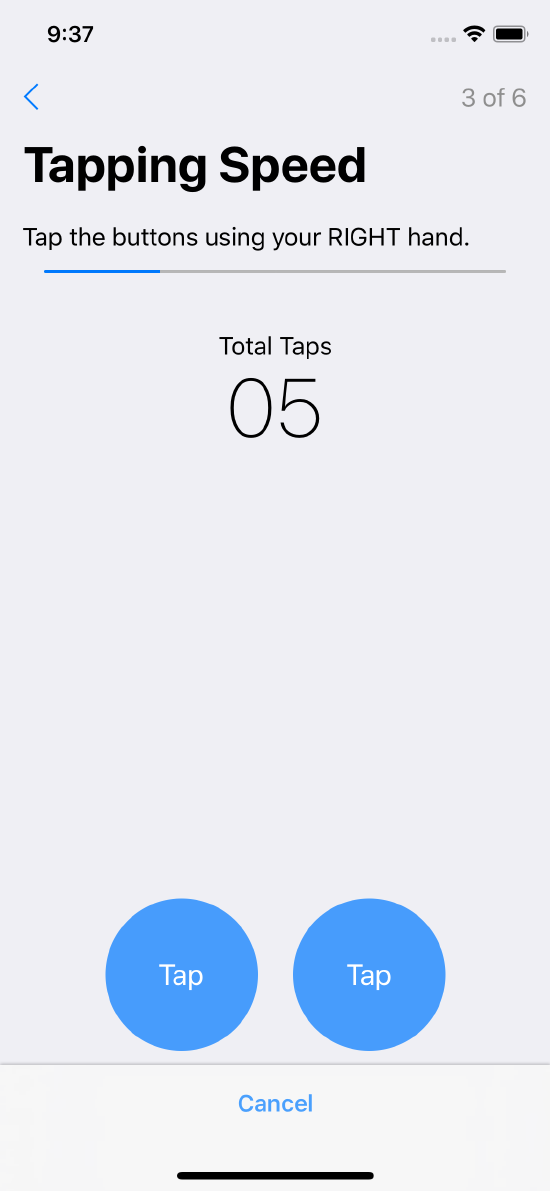

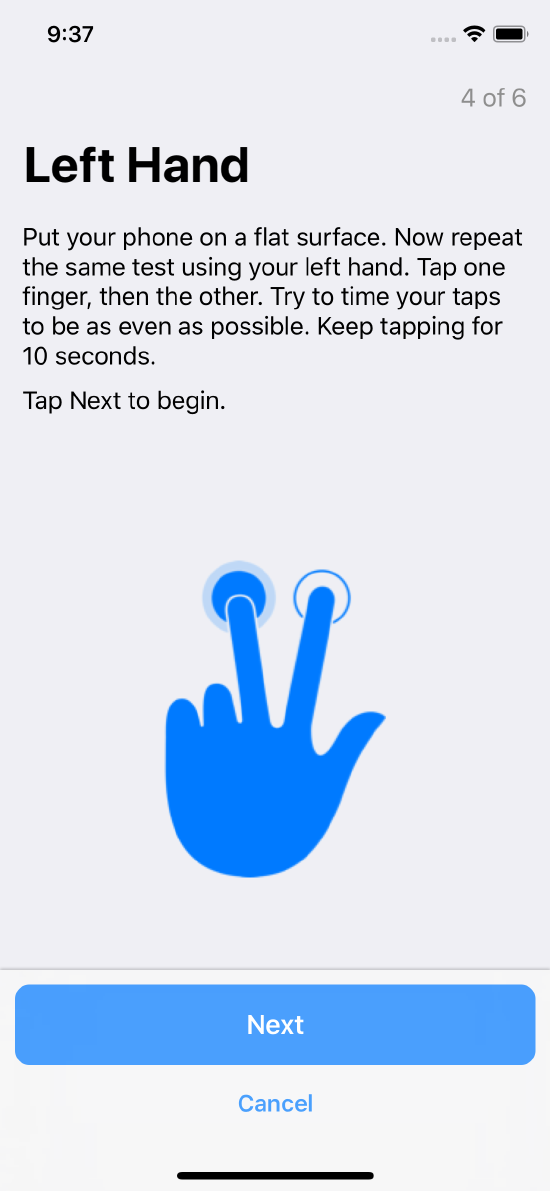

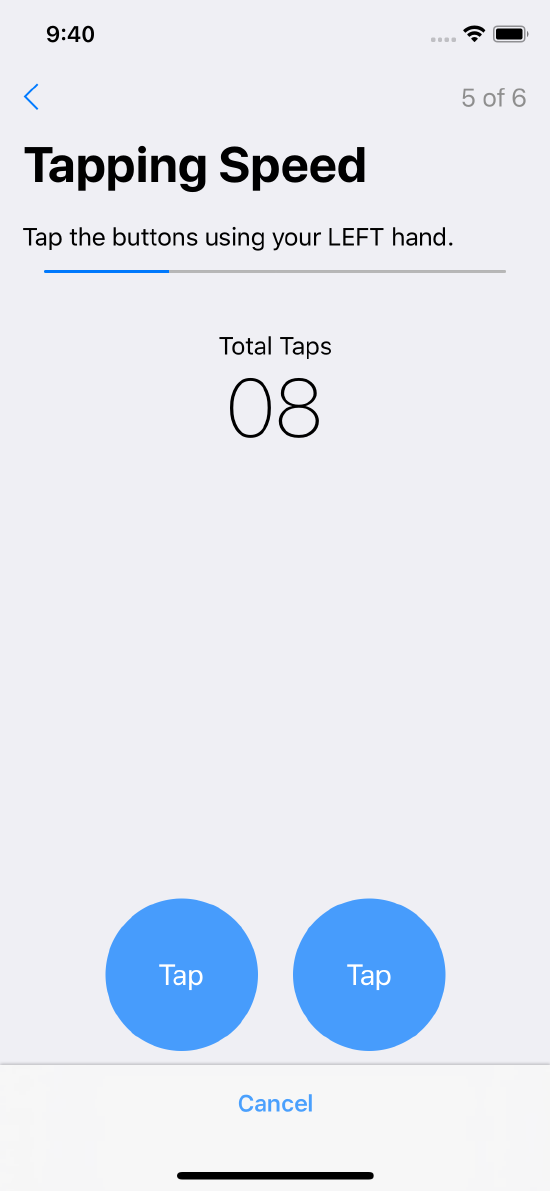

Tapping Speed

In the tapping task (see the method ORKOrderedTask twoFingerTappingIntervalTaskWithIdentifier:intendedUseDescription:duration:handOptions:options), the user rapidly alternates between tapping two targets on the touch screen. The resulting touch data can be used to assess basic motor capabilities such as speed, accuracy, and rhythm.

Touch data, and optionally accelerometer data from CoreMotion in iOS, are collected using public APIs. No analysis is performed by the ResearchKit framework on the data.

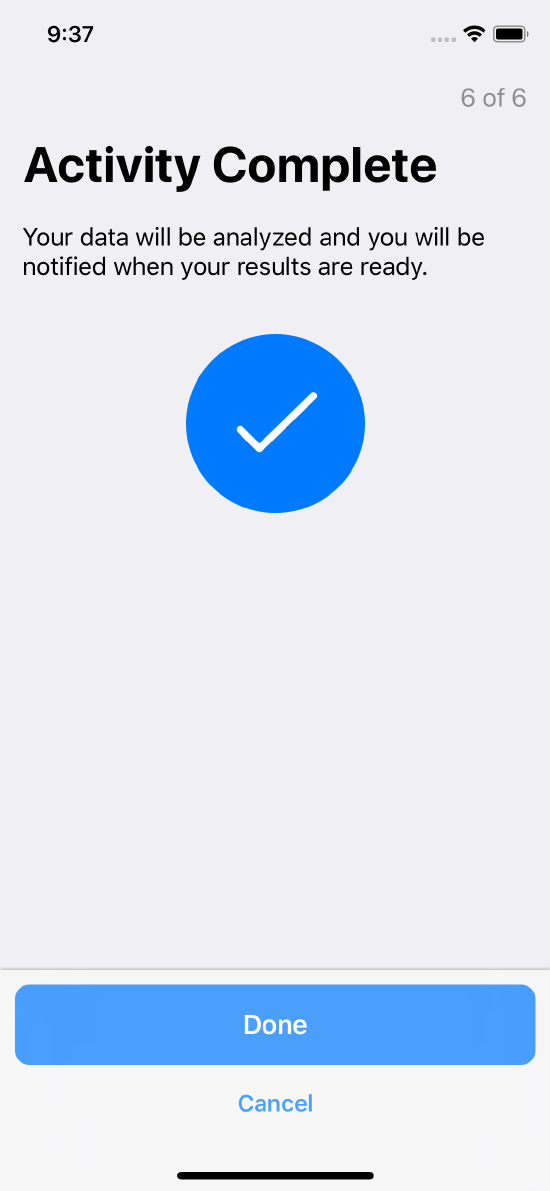

Tapping speed steps are shown in Figure 3.

Instruction step introducing the task

Instruction step introducing the task

Providing instruction for the right hand task

Providing instruction for the right hand task

The user rapidly taps on the targets using the right hand

The user rapidly taps on the targets using the right hand

Providing instruction for the left hand task

Providing instruction for the left hand task

The user rapidly taps on the targets using the left hand

The user rapidly taps on the targets using the left hand

Confirms task completion

Confirms task completion

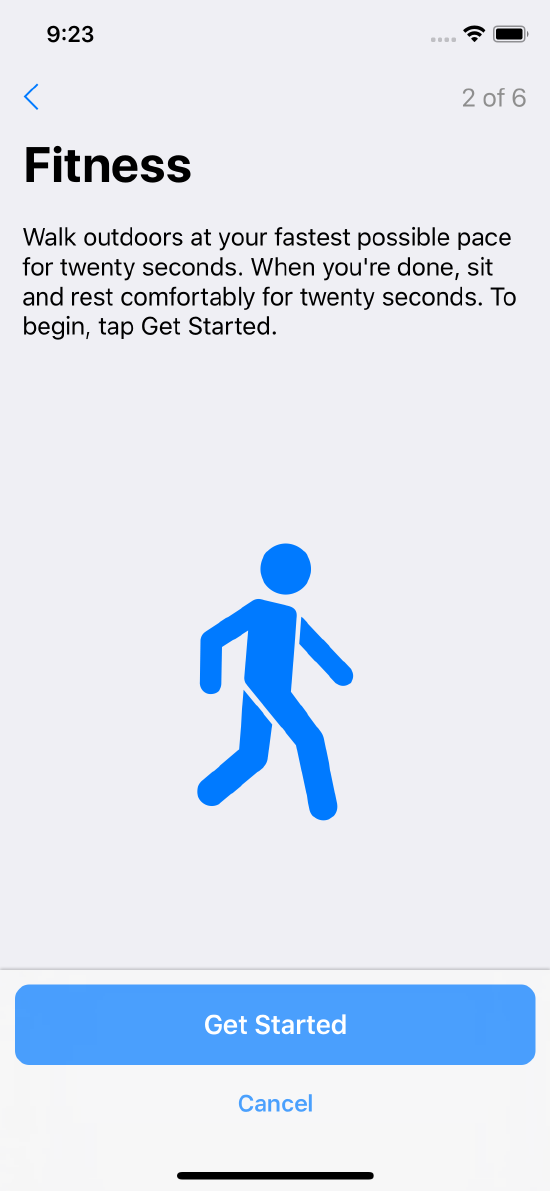

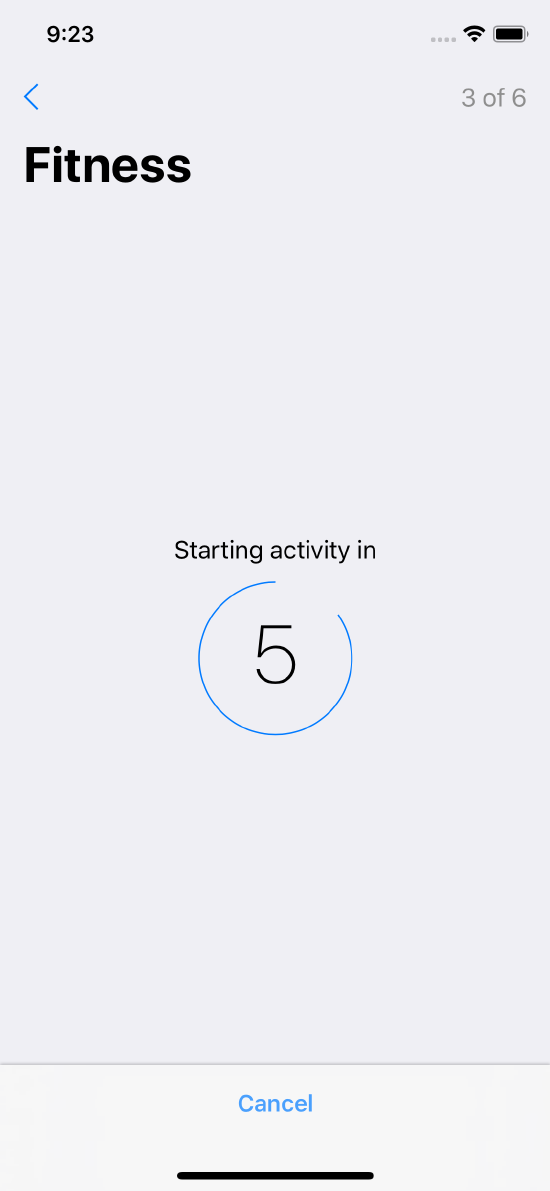

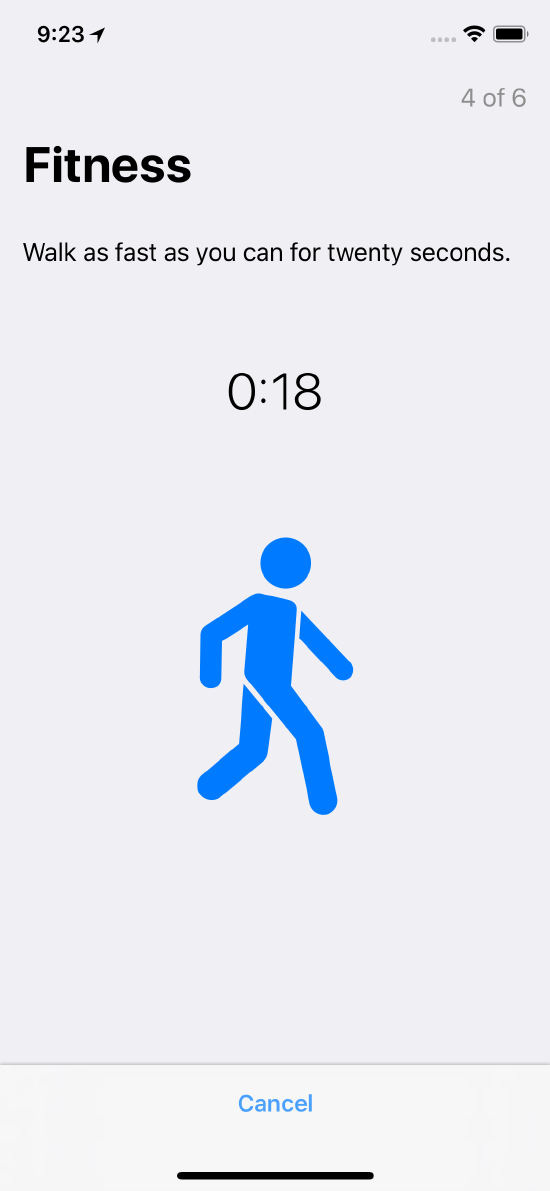

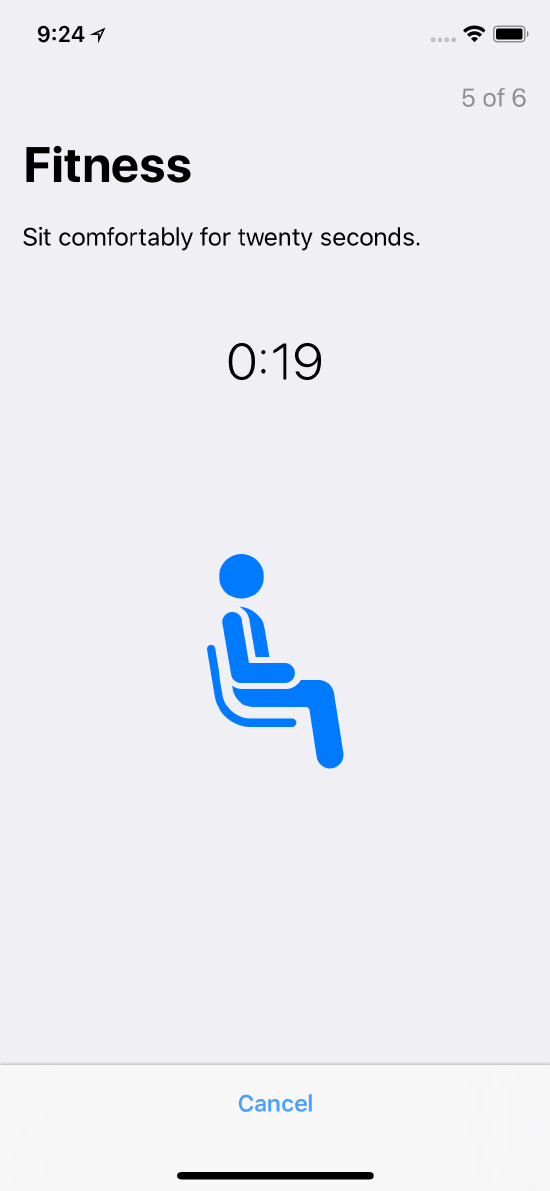

Fitness

In the fitness task (see the method ORKOrderedTask fitnessCheckTaskWithIdentifier:intendedUseDescription:walkDuration:restDuration:options), the user walks for a specified duration (usually several minutes). Sensor data is collected and returned through the task view controller’s delegate. Sensor data can include accelerometer, device motion, pedometer, location, and heart rate data where available.

Toward the end of the walk, if heart rate data is available, the user is asked to sit down and rest for a period. Data collection continues during the rest period.

Fitness steps are shown in Figure 4.

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Count down a specified duration to begin the task

Count down a specified duration to begin the task

Displays distance and heart rate

Displays distance and heart rate

The rest step, which can be skipped if heart rate data is unavailable

The rest step, which can be skipped if heart rate data is unavailable

Confirms task completion

Confirms task completion

All of the data is collected from public CoreMotion and HealthKit APIs on iOS, and serialized to JSON. No analysis is applied to the data by the ResearchKit framework.

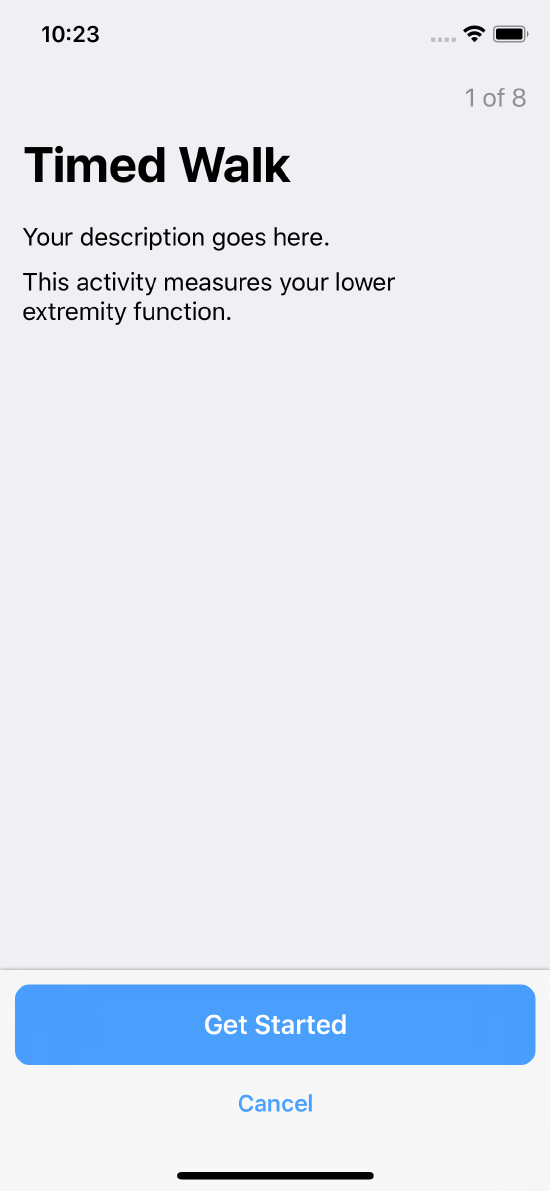

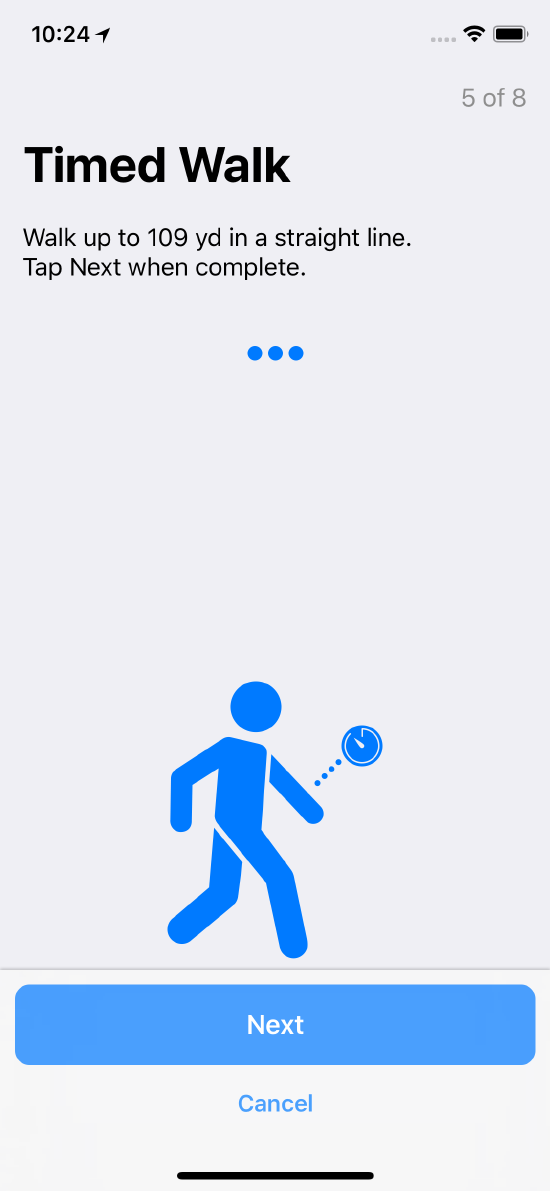

Timed Walk

In the timed walk task (see the method [ORKOrderedTask timedWalkTaskWithIdentifier:intendedUseDescription:distanceInMeters:timeLimit:turnAroundLimit:includeAssistiveDeviceForm:options:]), the user is asked to walk quickly and safely for a specific distance. The task is immediately administered again by having the user walk the same distance in the opposite direction. The timed walk task differs from both the fitness and the short walk tasks in that the distance walked by the user is fixed. A timed walk task measures the user’s lower-extremity function.

The data collected by this task includes accelerometer, device motion, pedometer data, and location of the user. Note that the location is available only if the user agrees to share their location.

Data collected by the task is in the form of an ORKTimedWalkResult object.

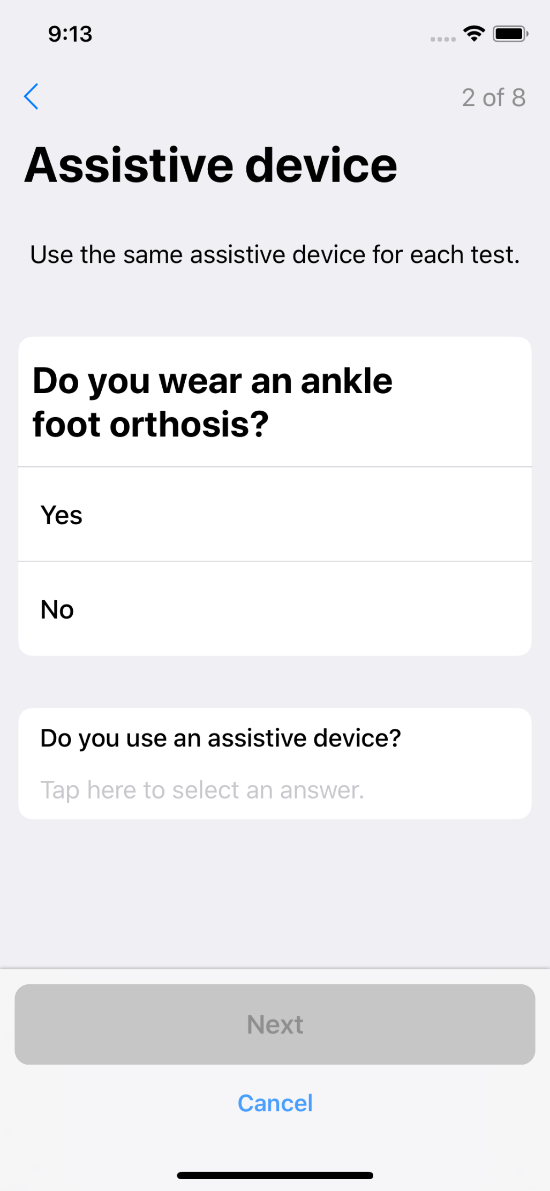

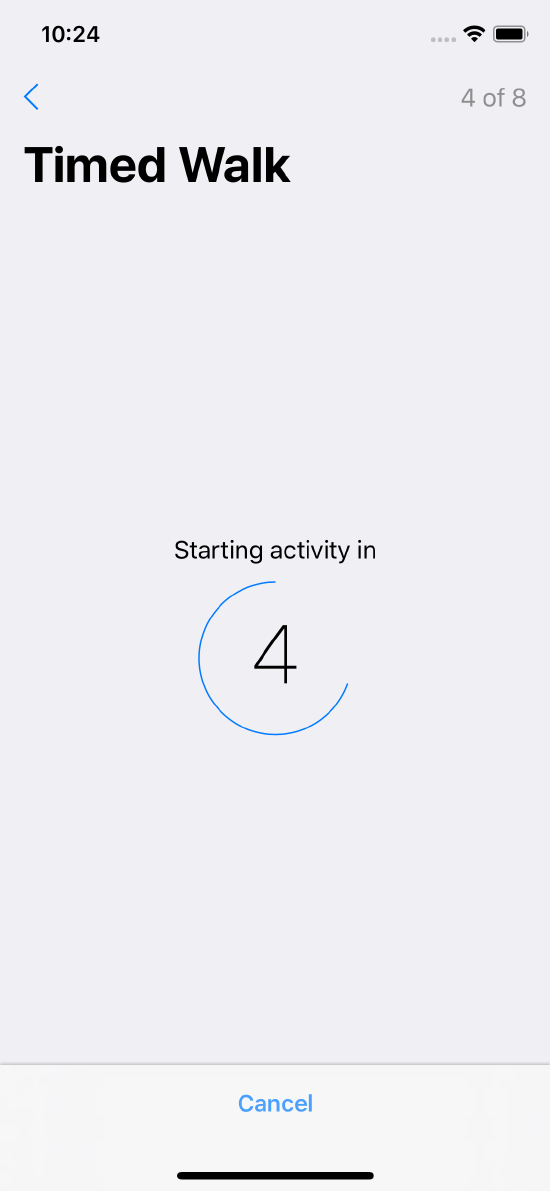

Timed walk steps are shown in Figure 5.

Instruction step introducing the task

Instruction step introducing the task

Gathers information about the user’s assistive device

Gathers information about the user’s assistive device

Instructions on how to perform the task

Instructions on how to perform the task

Count down a specified duration to begin the task

Count down a specified duration to begin the task

Actual task screen

Actual task screen

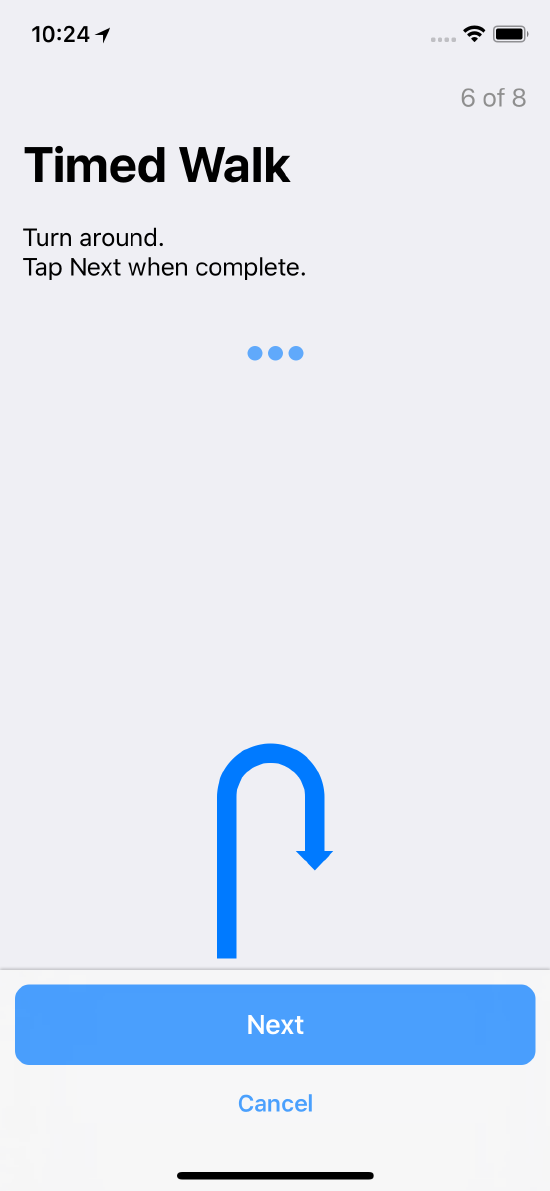

Instruct the user to turn around

Instruct the user to turn around

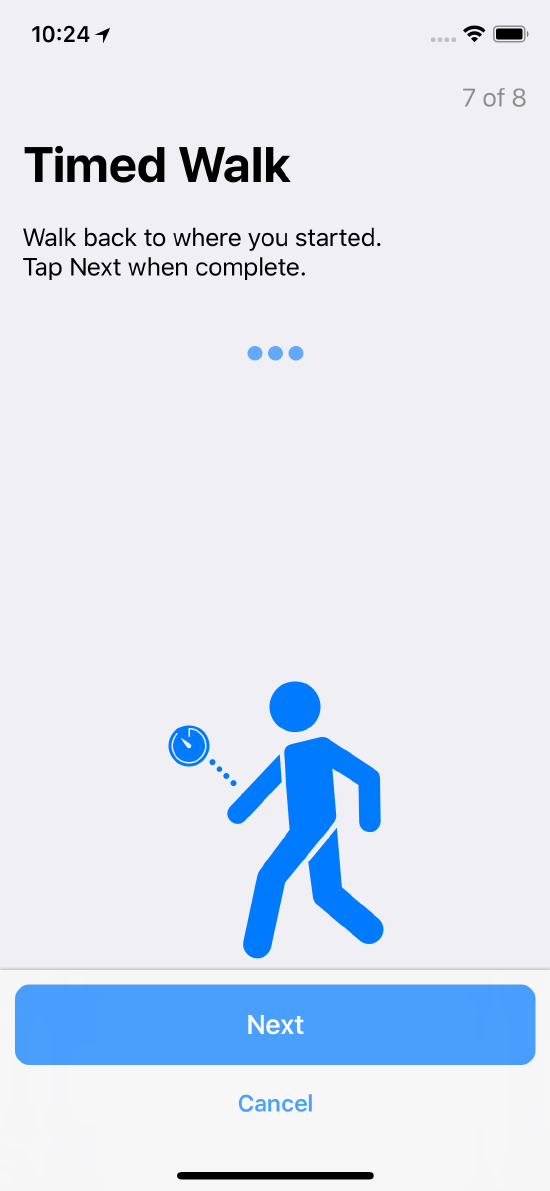

Actual task screen

Actual task screen

Task completion

Task completion

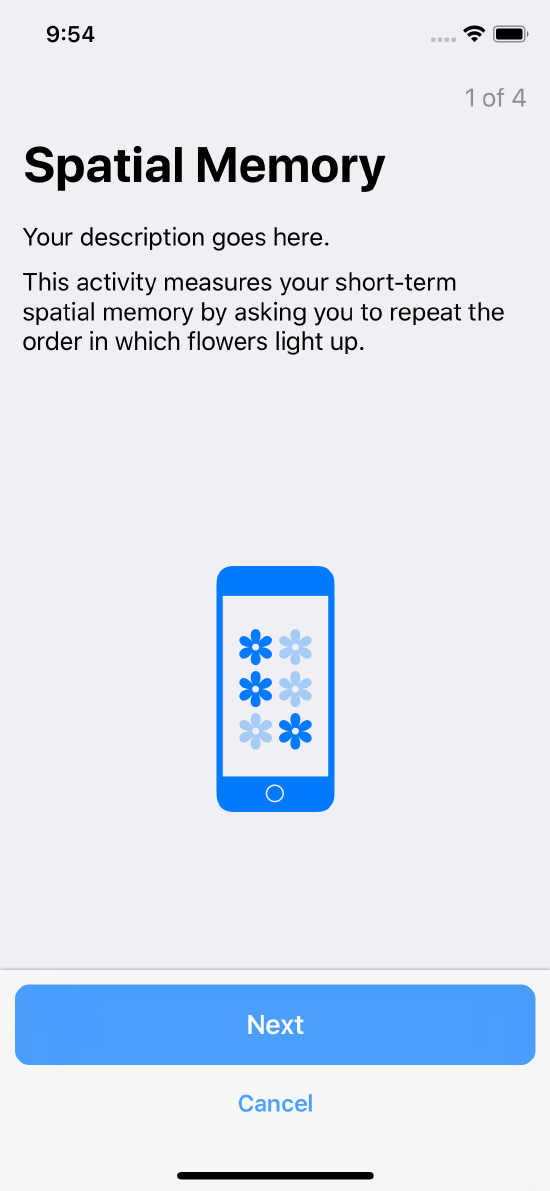

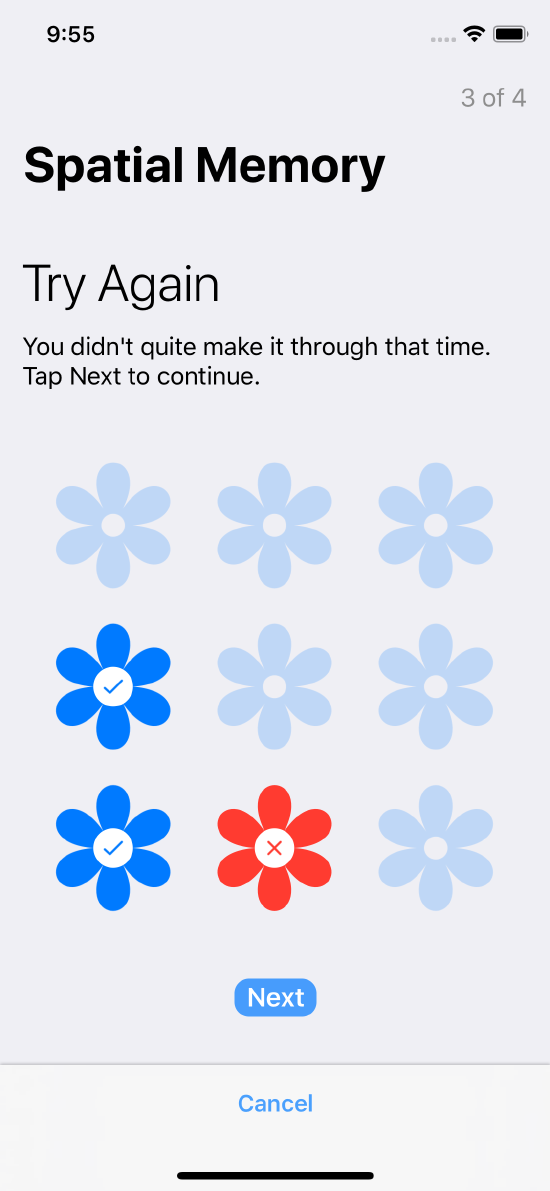

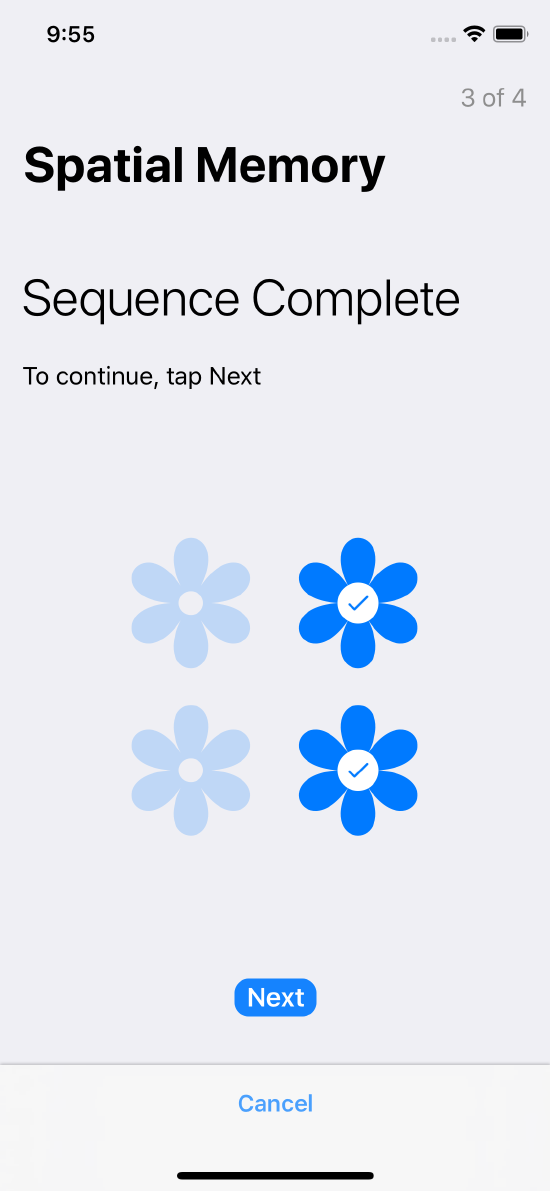

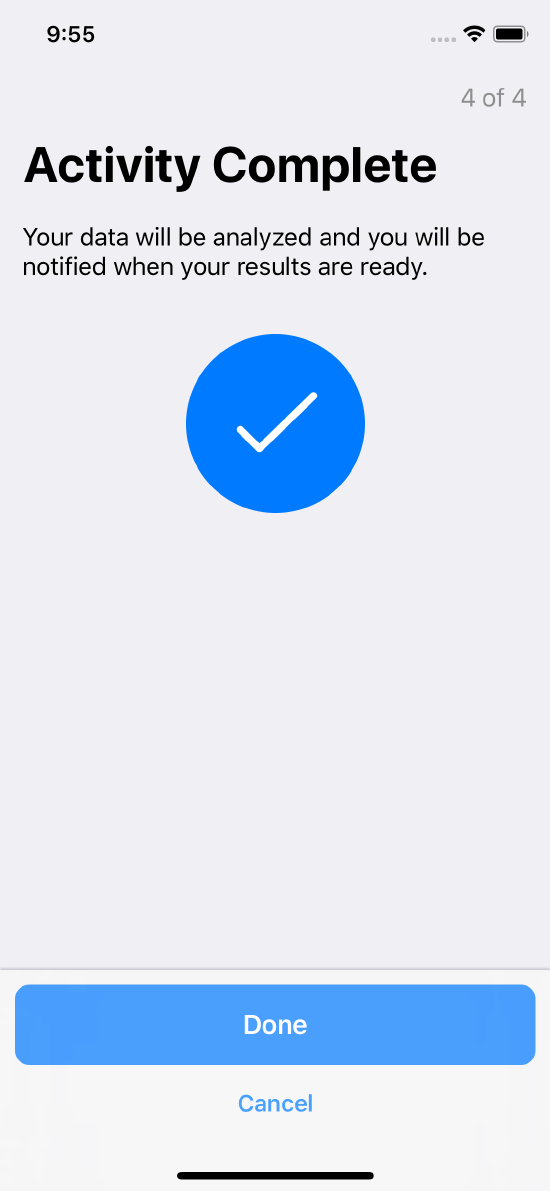

Spatial Memory

In the spatial memory task (see the method ORKOrderedTask spatialSpanMemoryTaskWithIdentifier:intendedUseDescription:initialSpan:minimumSpan:maximumSpan:playSpeed:maximumTests:maximumConsecutiveFailures:customTargetImage:customTargetPluralName:requireReversal:options:), the user is asked to observe and then recall pattern sequences of increasing length in a game-like environment. The task collects data that can be used to assess visuospatial memory and executive function.

The span (that is, the length of the pattern sequence) is automatically varied

during the task, increasing after successful completion of a sequence,

and decreasing after failures, in the range from minimumSpan to

maximumSpan. The playSpeed property lets you control the speed of sequence

playback, and the customTargetImage property lets you customize the shape of the tap target. The game finishes when either maxTests tests have been

completed, or the user has made maxConsecutiveFailures errors in a

row.

The results collected are scores derived from the game, the details of the game, and the touch inputs made by the user.

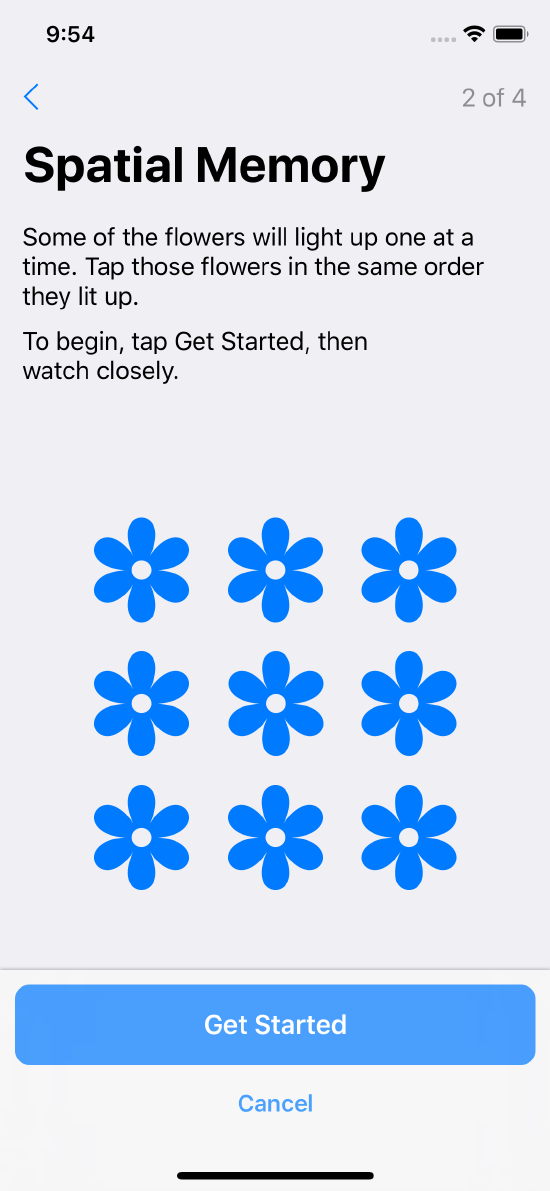

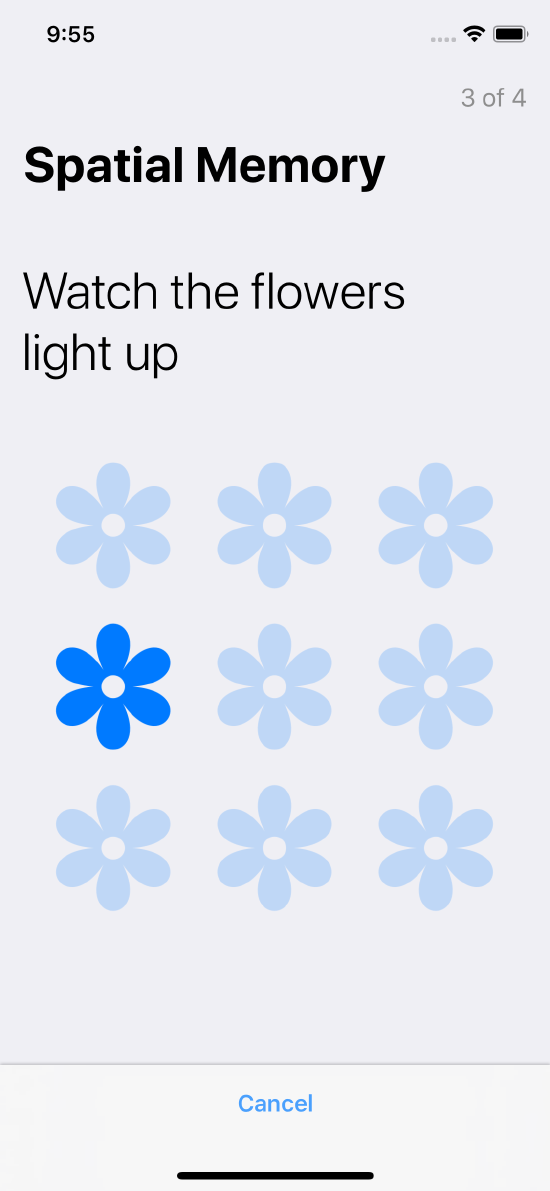

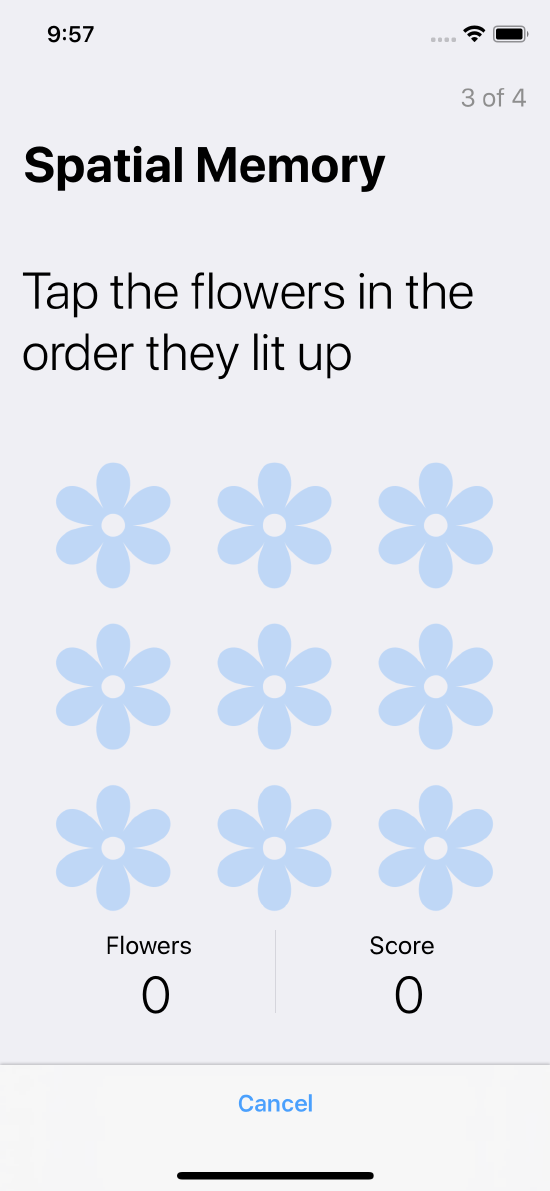

Spatial memory test steps are shown in Figure 6.

Instruction step introducing the task

Instruction step introducing the task

Describes what the user must do

Describes what the user must do

The flowers light up in sequence

The flowers light up in sequence

The user must recall the sequence

The user must recall the sequence

If users make a mistake, they will be offered a new pattern

If users make a mistake, they will be offered a new pattern

The user is offered a shorter sequence

The user is offered a shorter sequence

Confirms task completion

Confirms task completion

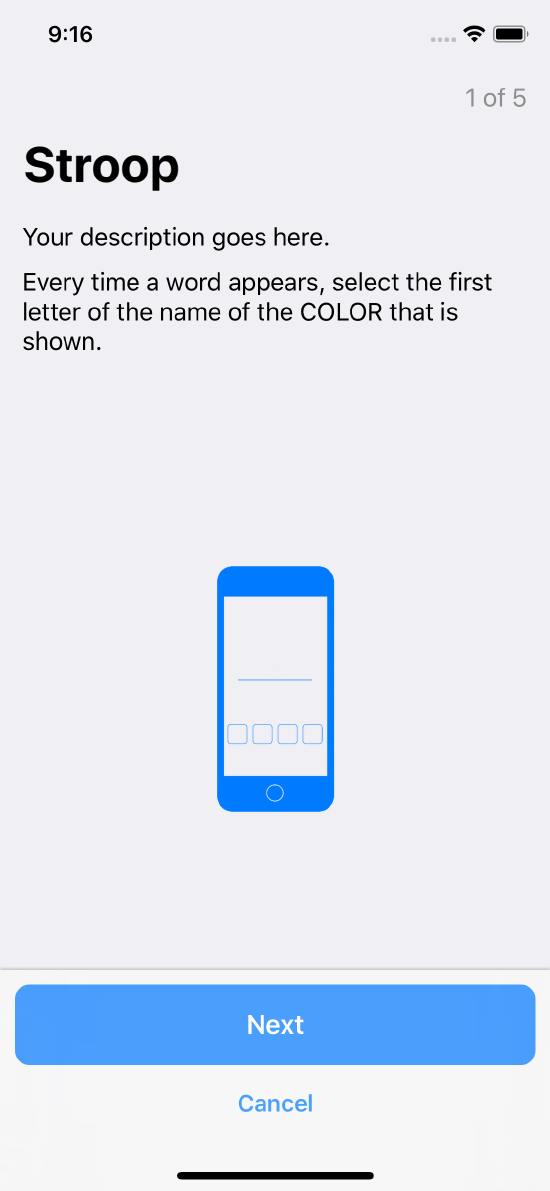

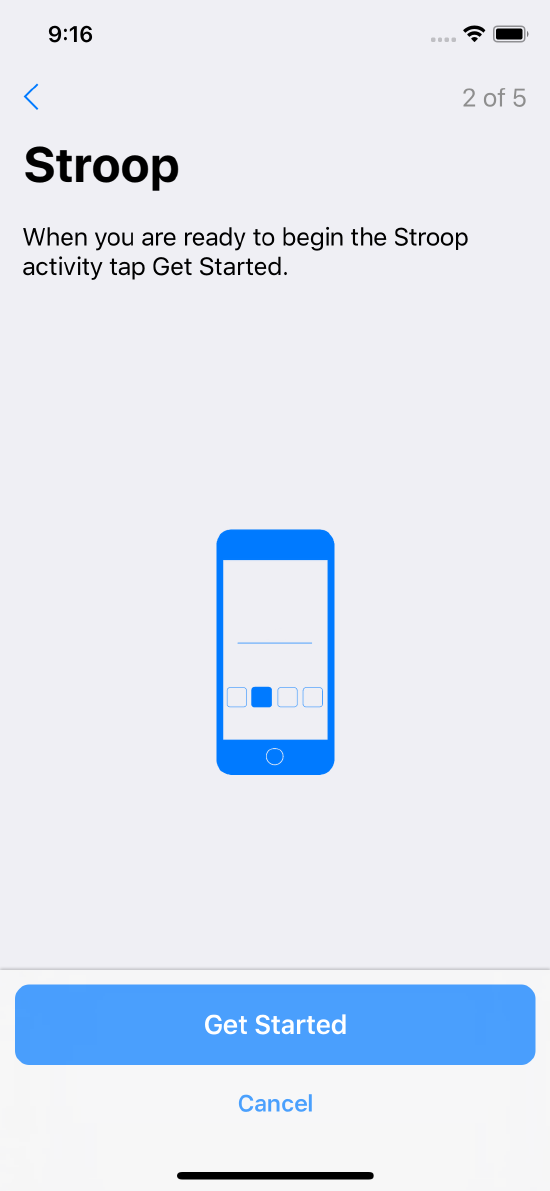

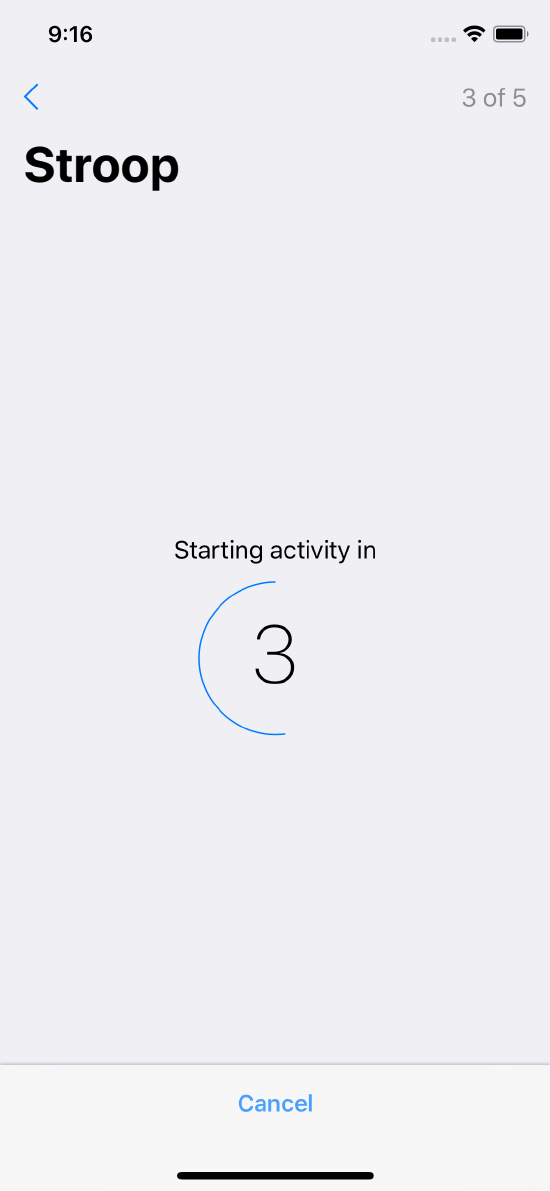

Stroop Test

In the Stroop test, the participant is shown a series of words that are displayed in color, and must select the first letter of the color’s name. Stroop test steps are shown in Figure 7.

Instruction step introducing the task

Instruction step introducing the task

Further instructions

Further instructions

Count down a specified duration to begin the activity

Count down a specified duration to begin the activity

A typical Stroop test; the correct answer is “B” for blue

A typical Stroop test; the correct answer is “B” for blue

Confirms task completion

Confirms task completion

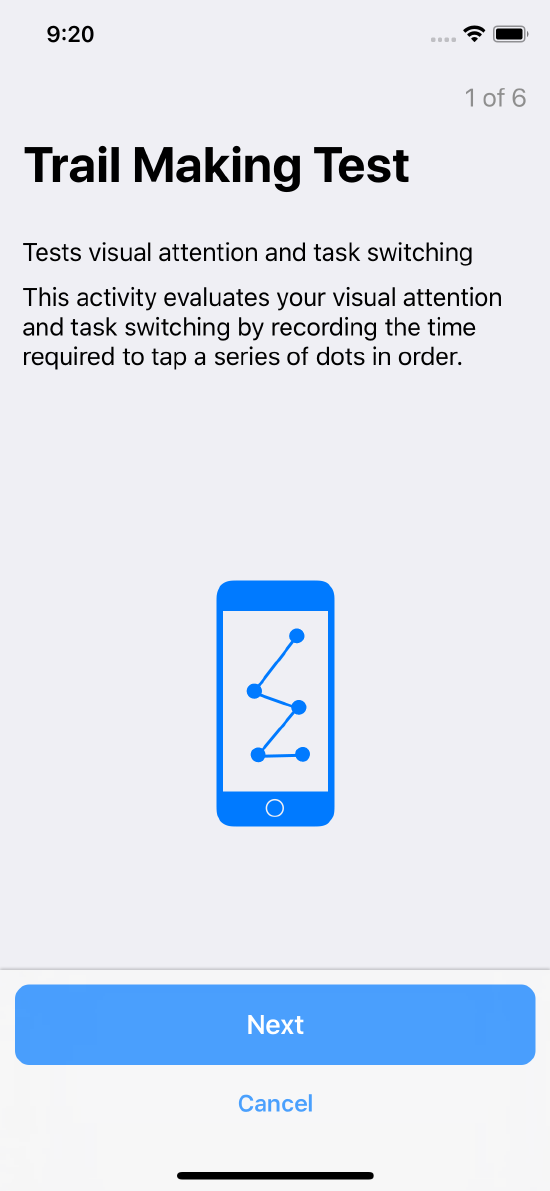

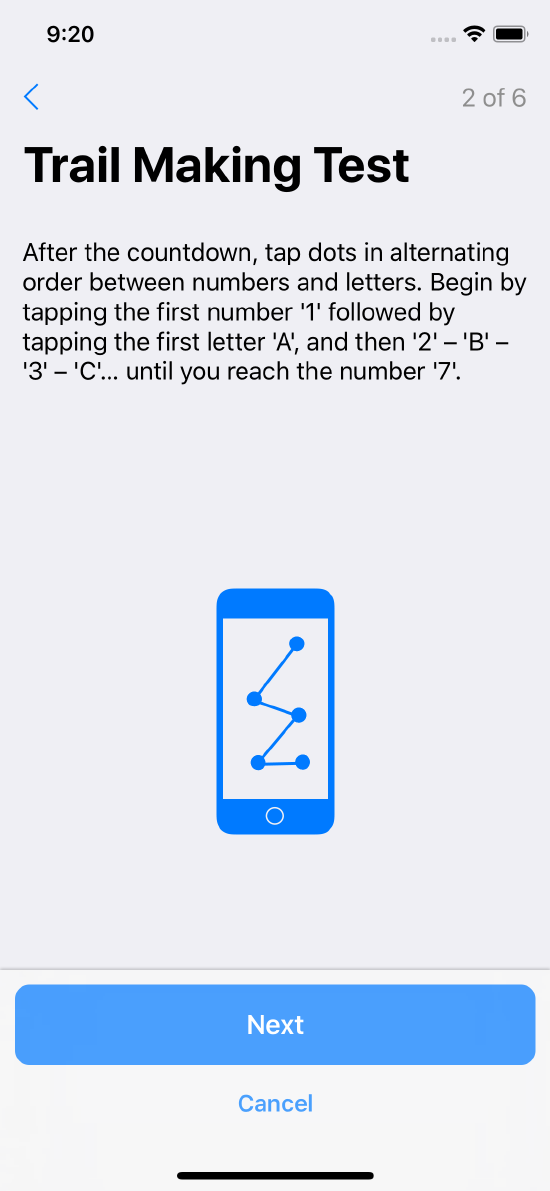

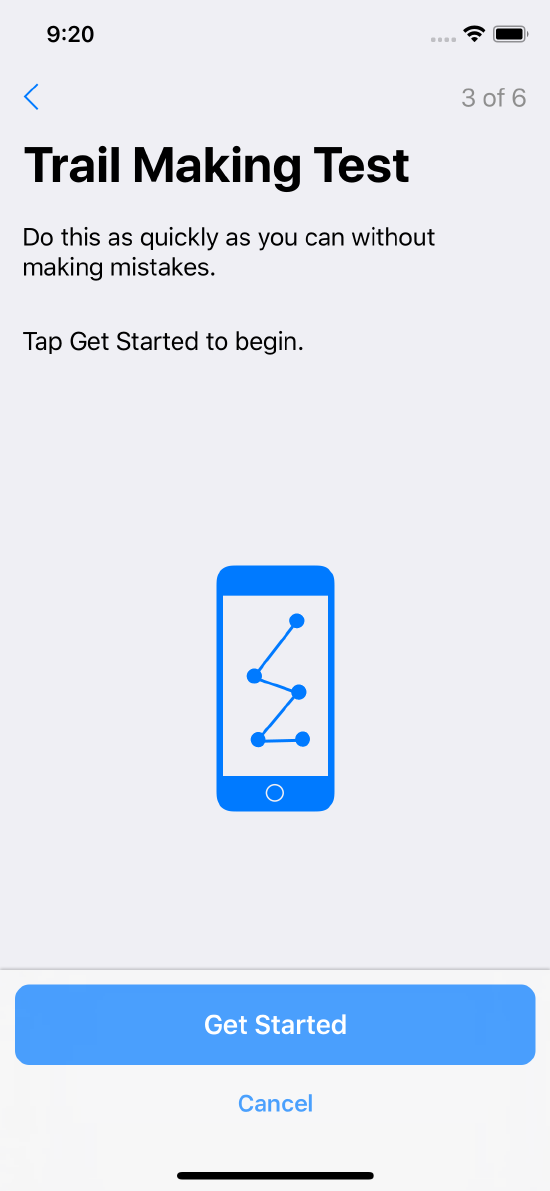

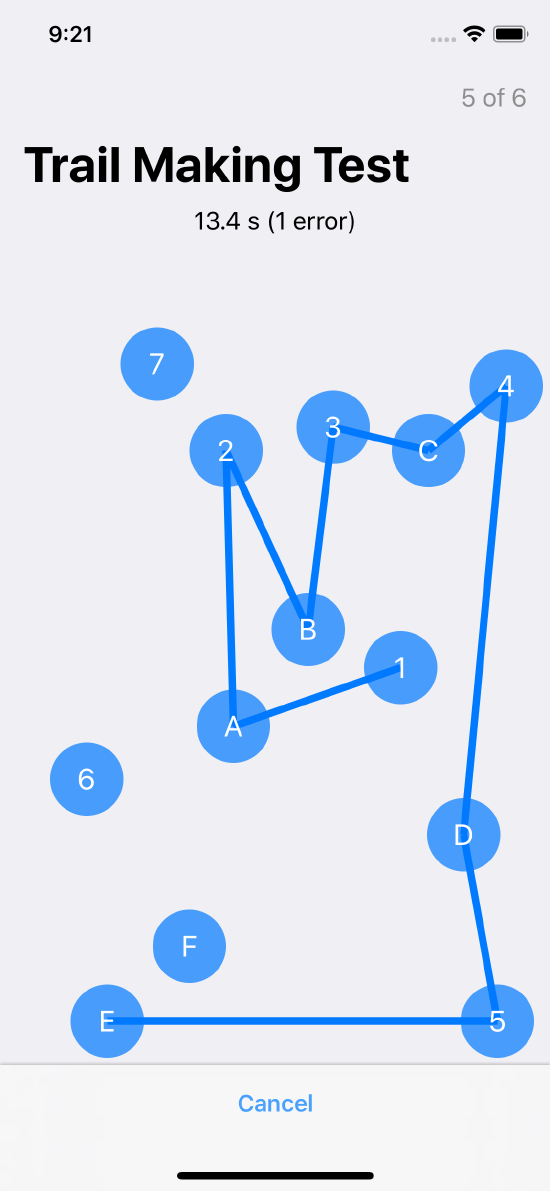

Trail Making Test

In the trail making test, the participant connects a series of labelled circles, in order. The time to complete the test is recorded. The circles can be labelled with sequential numbers (1, 2, 3, …) or with alternating numbers and letters (1, a, 2, b, 3, c, …).

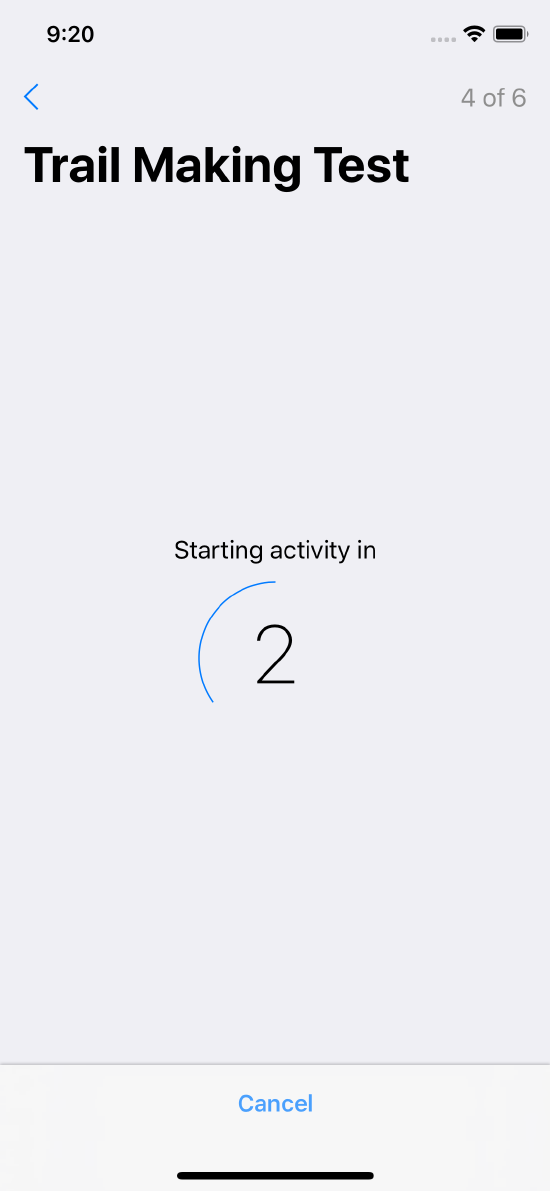

Trail making test steps are shown in Figure 8.

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Further instructions

Further instructions

Count down a specified duration to begin the activity

Count down a specified duration to begin the activity

The activity screen, shown mid-task

The activity screen, shown mid-task

Confirms task completion

Confirms task completion

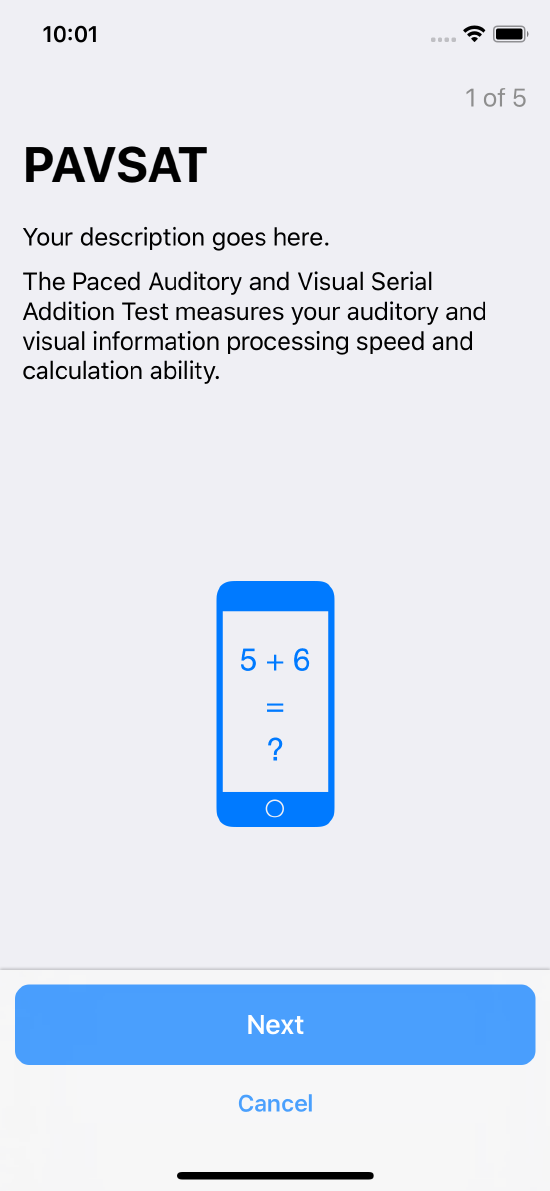

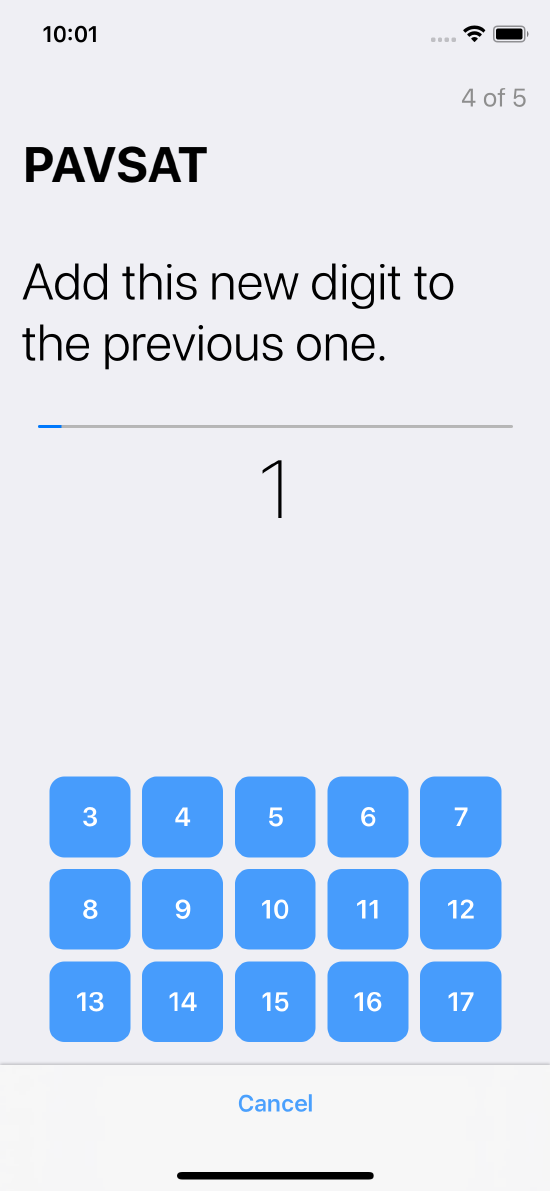

Paced Serial Addition Test (PSAT)

The Paced Serial Addition Test (PSAT) task (see method ORKOrderedTask PSATTaskWithIdentifier:intendedUseDescription:presentationMode:interStimulusInterval:stimulusDuration:seriesLength:options) measures the cognitive function that assesses auditory and/or visual information processing speed, flexibility, and the calculation ability of the user.

Single digits are presented every two or three seconds and the user must add each new digit to the one immediately before.

There are three variations of this test:

- PASAT: Paced Auditory Serial Addition Test - the device speaks the digit every two or three seconds.

- PVSAT: Paced Visual Serial Addition Test - the device shows the digit on screen.

- PAVSAT: Paced Auditory and Visual Serial Addition Test - the device speaks the digit and shows it onscreen every two to three seconds.

The score for the PSAT task is the total number of correct answers out of the number of possible correct answers. Data collected by the task is in the form of an ORKPSATResult object.

PVSAT steps are shown in Figure 9.

Note that the visual and auditory components of the task are optional. You can choose to include either of them or both.

Instruction step introducing the task

Instruction step introducing the task

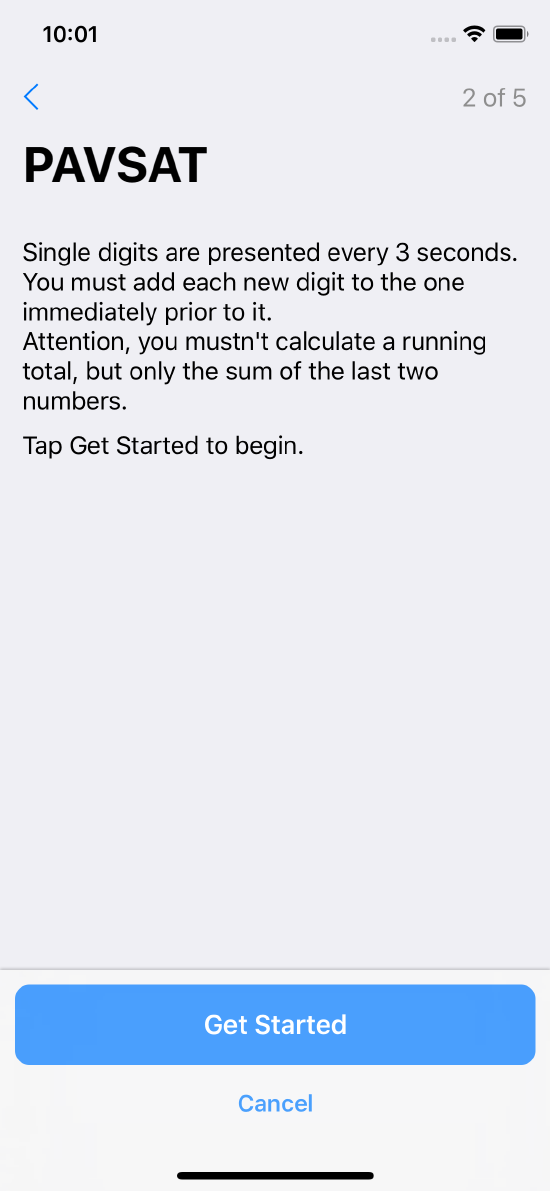

Describes what the user must do

Describes what the user must do

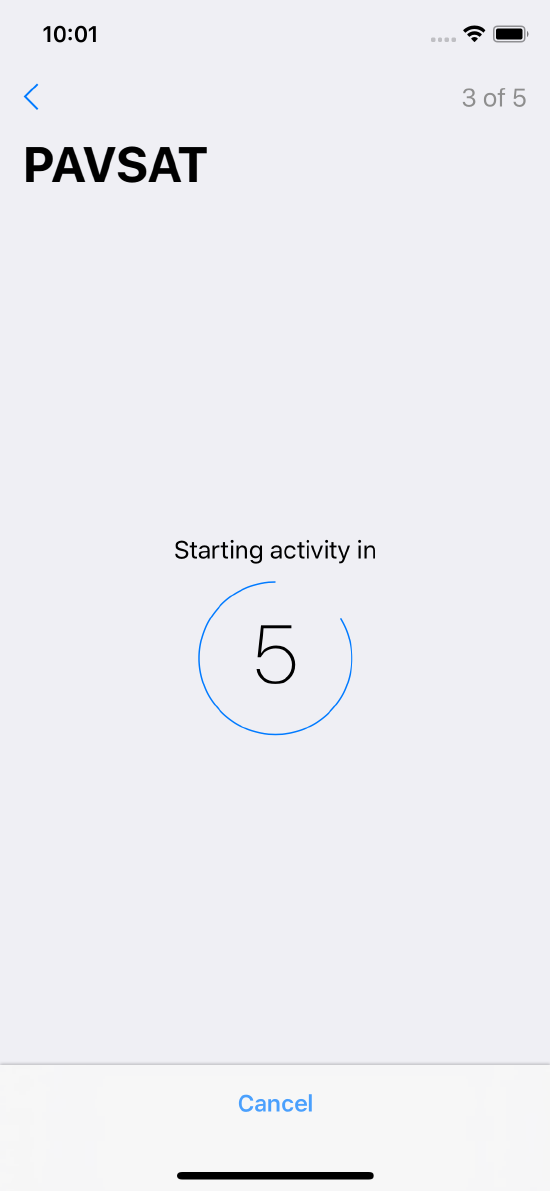

Count down a specified duration into the task

Count down a specified duration into the task

The user must add each new digit on the screen to the one immediately prior to it

The user must add each new digit on the screen to the one immediately prior to it

Confirms task completion

Confirms task completion

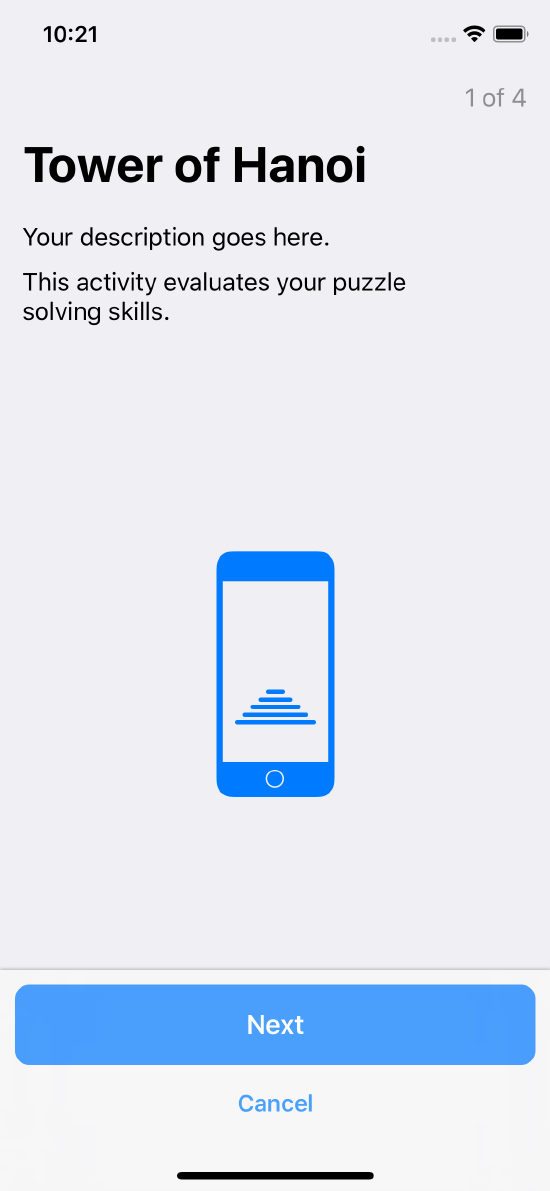

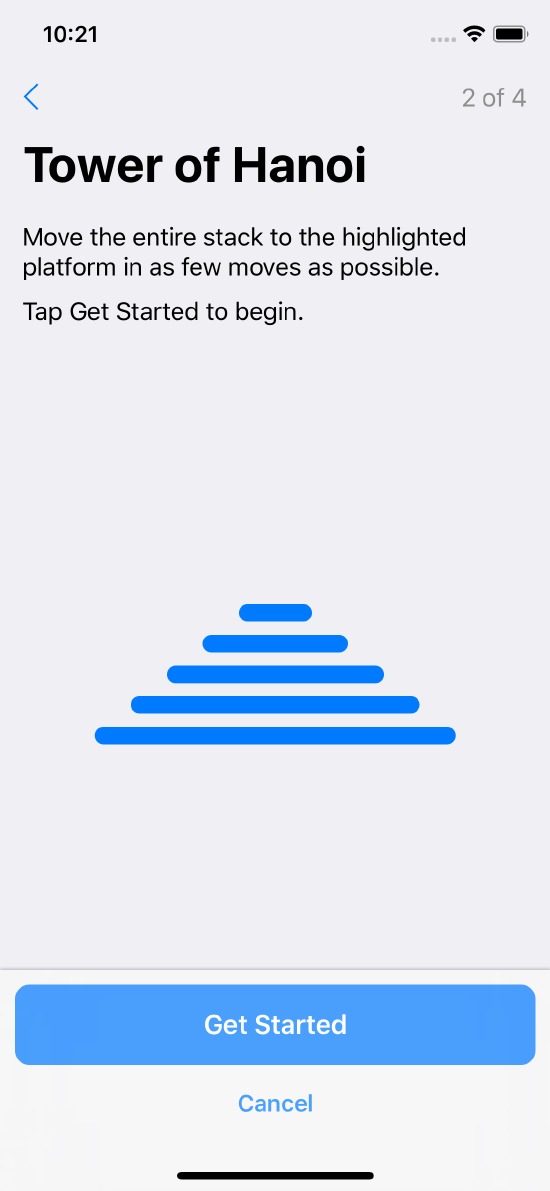

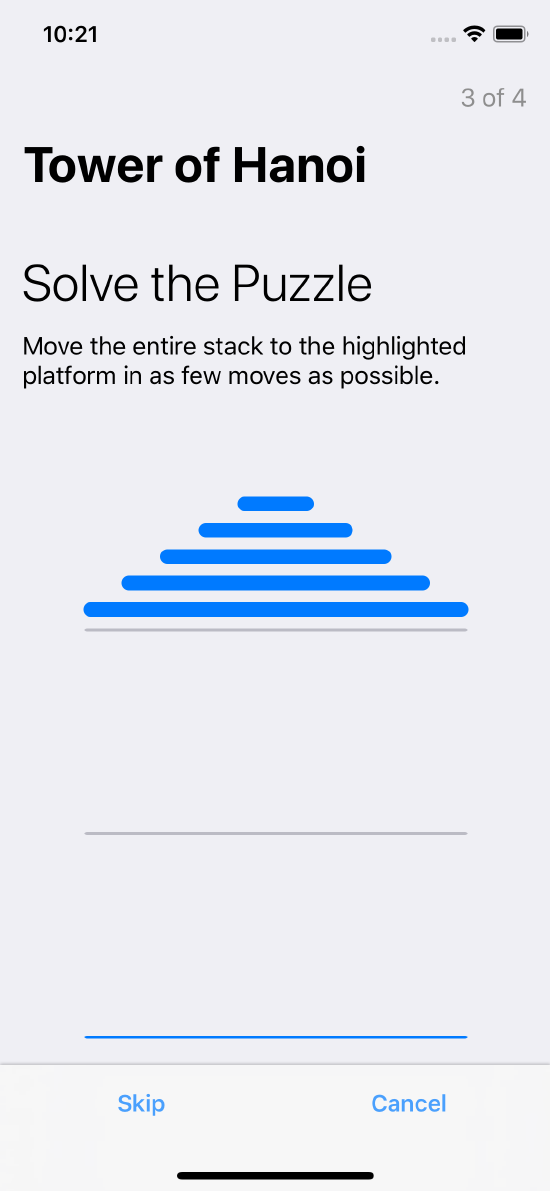

Tower of Hanoi

In the Tower of Hanoi task (see the method ORKOrderedTask towerOfHanoiTaskWithIdentifier:intendedUseDescription:numberOfDisks:options:), the user is asked to solve the classic Tower of Hanoi puzzle in a minimum number of moves. To solve the puzzle, the user must move the entire stack to the highlighted platform in as few moves as possible. This task measures the user’s problem solving skills. A Tower of Hanoi task finishes when the user completes the puzzle correctly or concedes that they cannot solve the puzzle.

Data collected by this task is in the form of an ORKTowerOfHanoiResult object. It contains every move taken by the user and indicates whether the puzzle was successfully completed or not.

Tower of Hanoi steps are shown in Figure 10.

Instruction step introducing the task

Instruction step introducing the task

Describes what the user must do

Describes what the user must do

Actual task

Actual task

Confirms task completion

Confirms task completion

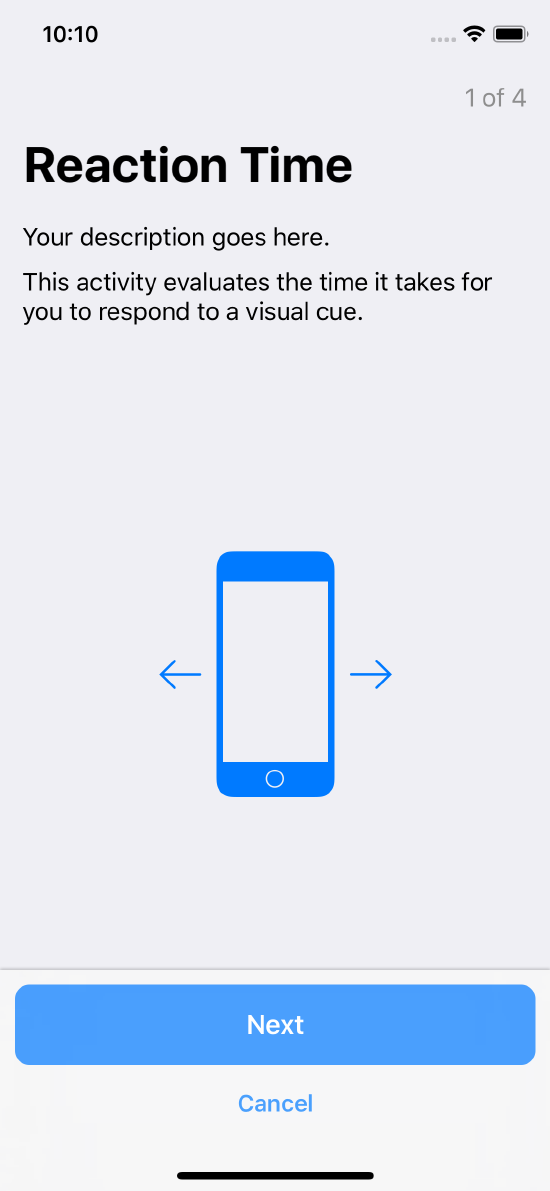

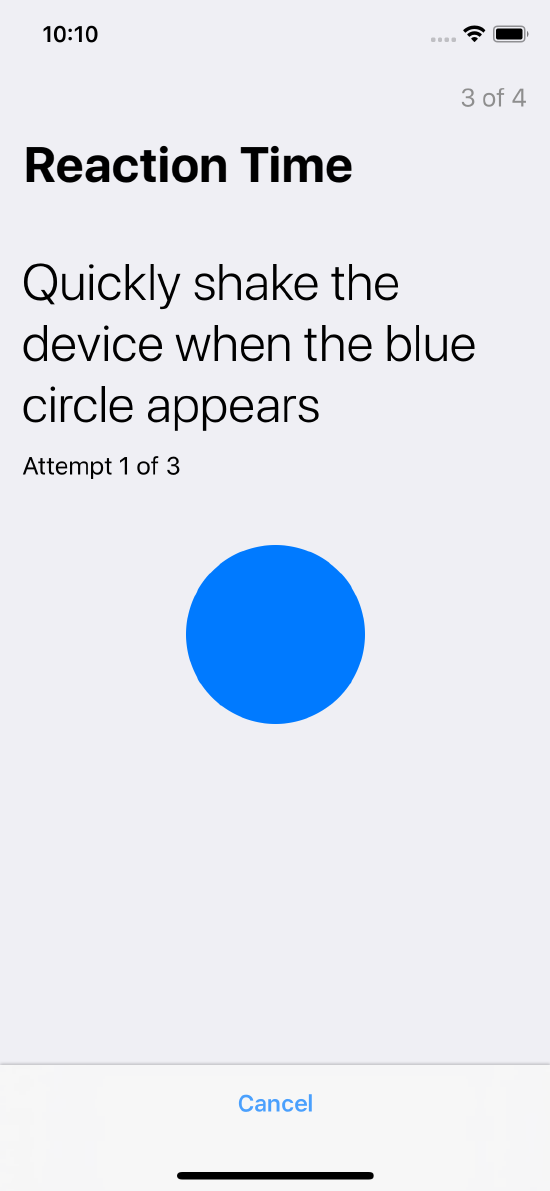

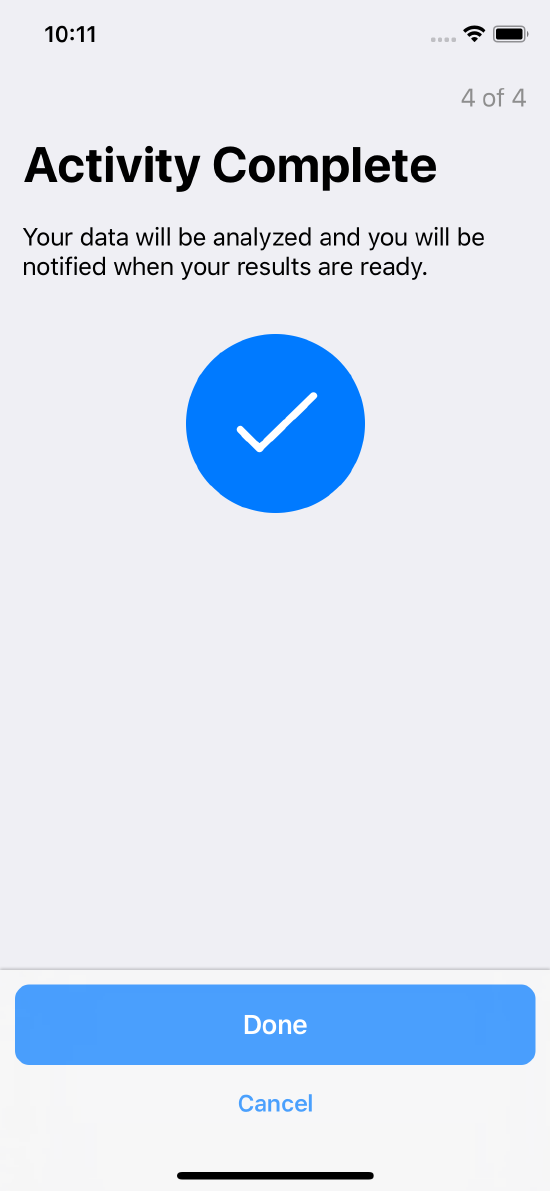

Reaction Time

In the reaction time task, the user shakes the device in response to a visual clue on the device’s screen. The task is divided into a number of attempts, which you determine. To complete an attempt in a task, the user must shake or move the device with an acceleration that exceeds a threshold value ( thresholdAcceleration property) within the given time. The task finishes when the user successfully completes all the attempts as instructed in the task. Use this task to evaluate a user’s response to the stimulus and calculate their reaction time. (See the method ORKOrderedTask reactionTimeTaskWithIdentifier:intendedUseDescription:maximumStimulusInterval:minimumStimulusInterval:thresholdAcceleration:numberOfAttempts:timeout:successSound:timeoutSound:failureSound:option).

Data collected by this task is in the form of ORKReactionTimeResult objects. Each of these objects contain a timestamp representing the delivery of the stimulus and an ORKFileResult object that references the motion data collected during an attempt. To present this task, use an ORKTaskViewController object.

Reaction time steps are shown in Figure 11.

Instruction step introducing the task

Instruction step introducing the task

Describes what the user must do

Describes what the user must do

Actual task

Actual task

Confirms task completion

Confirms task completion

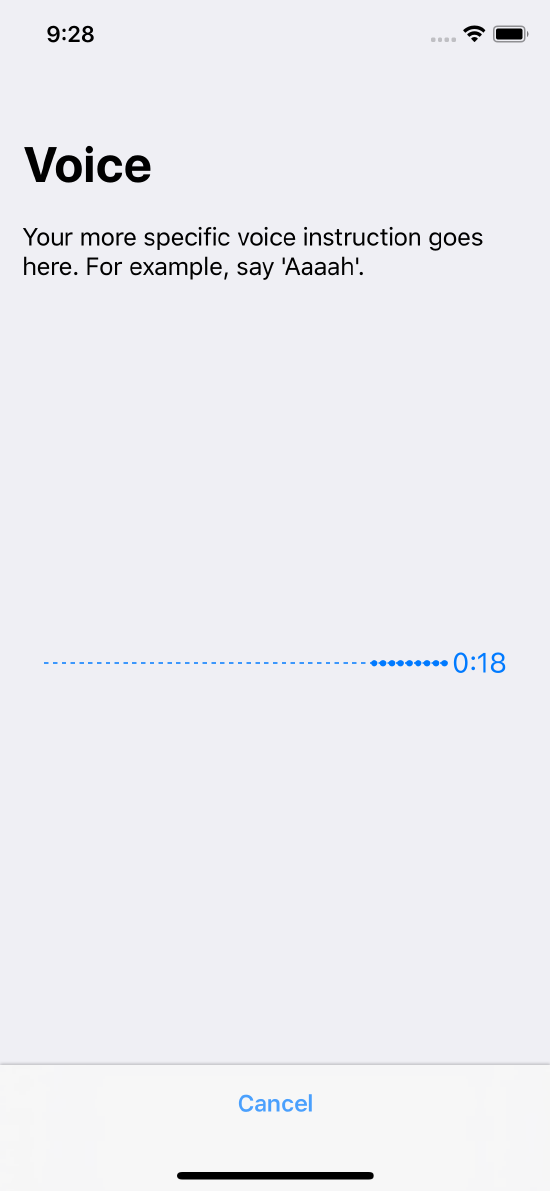

Sustained Phonation

In the sustained phonation task (see the method ORKOrderedTask audioTaskWithIdentifier:intendedUseDescription:speechInstruction:shortSpeechInstruction:duration:recordingSettings:checkAudioLevel:options), the user makes a sustained sound, and an audio recording is made. Analysis of the audio data is not included in the ResearchKit framework, but might naturally involve looking at the power spectrum and how it relates to the ability to produce certain sounds. The ResearchKit framework uses the AVFoundation framework to collect this data and to present volume indication during recording. No data analysis is done by ResearchKit; you can define your analysis on this task according to your own requirements. Audio steps are shown in Figure 12.

Instruction step introducing the task

Instruction step introducing the task

Instruction step describes user action during the task

Instruction step describes user action during the task

Count down a specified duration to begin the task

Count down a specified duration to begin the task

Displays a graph during audio playback (audio collection step)

Displays a graph during audio playback (audio collection step)

Confirms task completion

Confirms task completion

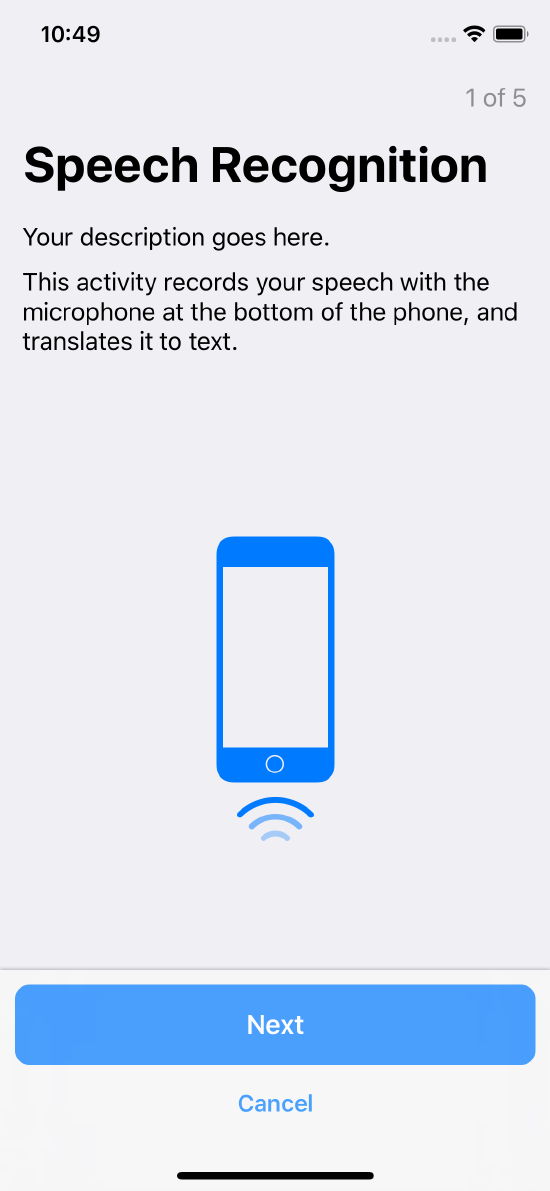

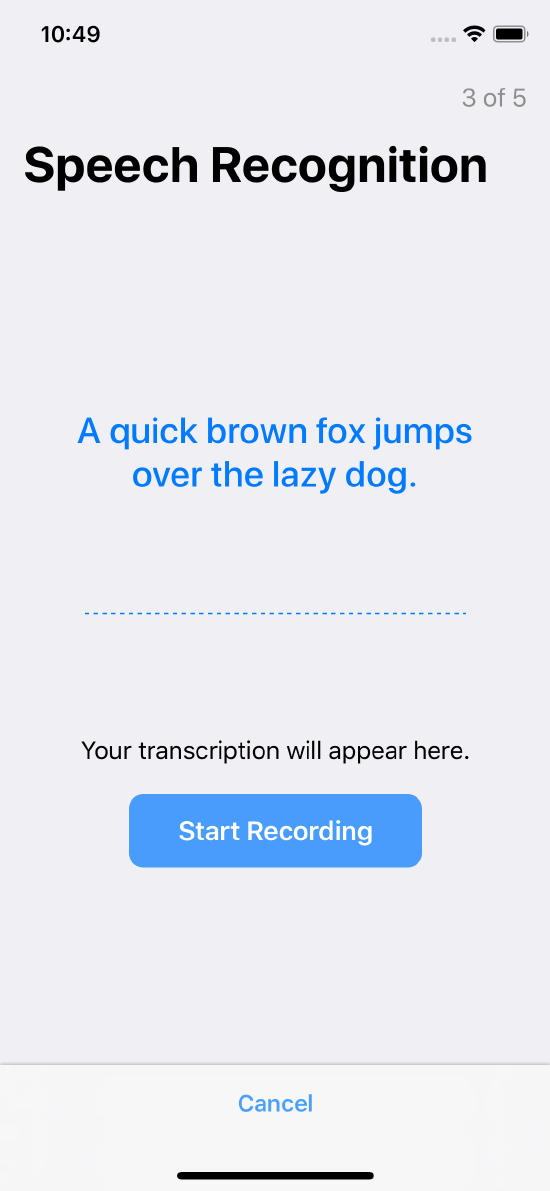

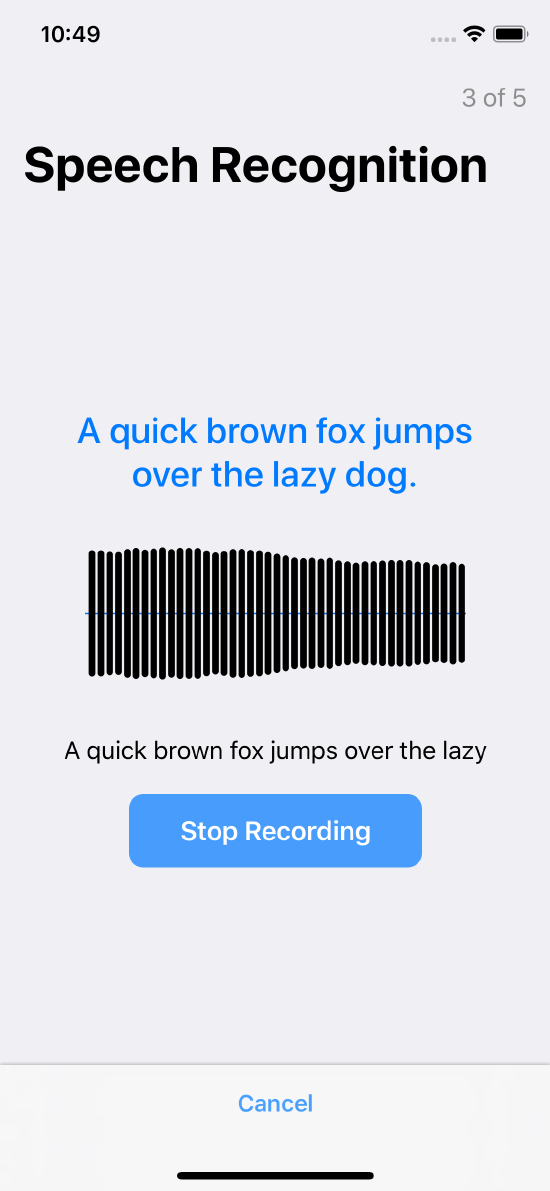

Speech Recognition

Researchers and developers can use ResearchKit to record audio data and produce transcriptions generated by Apple’s speech recognition system. ResearchKit also provides word alignments, confidence scores, and alternative speech recognition hypotheses in the form of an n-best list. Medical researchers and developers can leverage this information to analyze speech and language features like speaking rate, word usage, and pause durations.

The n-best list and the confidence measure detect uncertainty in the speech recognition system’s hypothesis in certain cases of unintelligible speech or speech containing word fragments or meaningless words. These conditions are found to be a useful indicator of cognitive decline associated with Alzheimer’s disease and related dementias (1, 2), as well as other mental health issues (3). Additionally, researchers and developers can use the raw audio data captured through ResearchKit to investigate and deploy speech indicators for research and system design.

The ORKSpeechRecognitionStep class represents a single recording step. In this step, the user’s speech is recorded from the microphone.

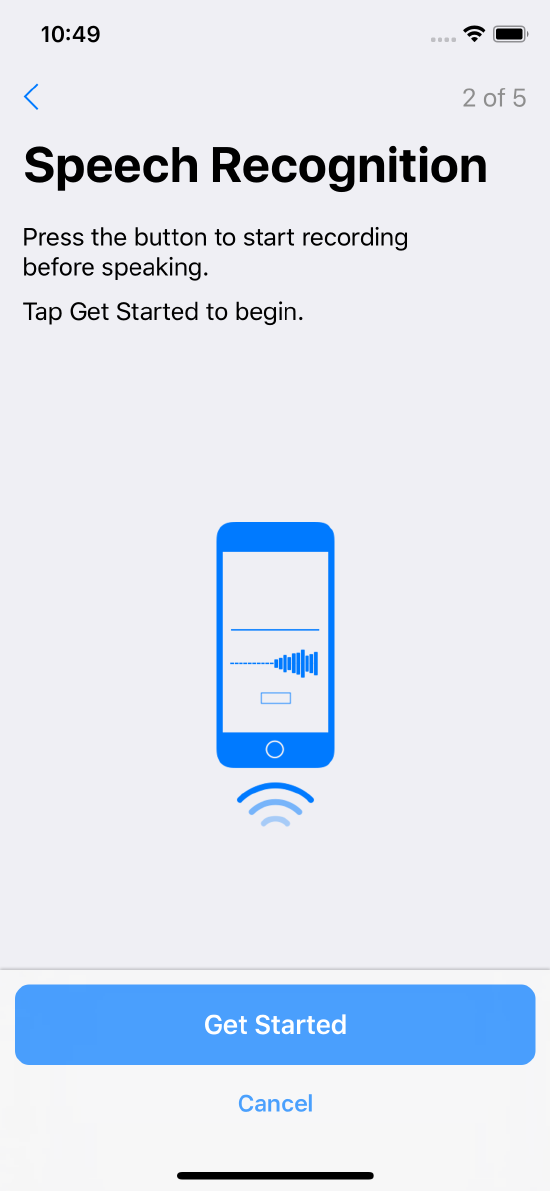

Speech recognition steps showing capturing and displaying recorded text are in Figure 13.

Instruction step introducing the task

Instruction step introducing the task

Instruct the user to prepare for recording

Instruct the user to prepare for recording

Prompts the user to start the recording

Prompts the user to start the recording

Records the user’s speech

Records the user’s speech

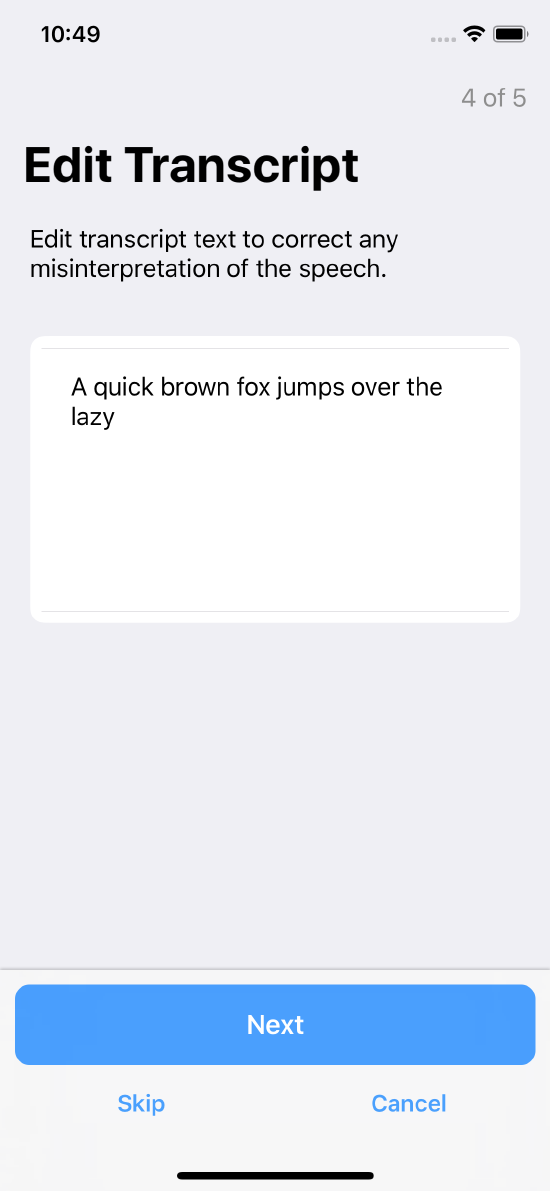

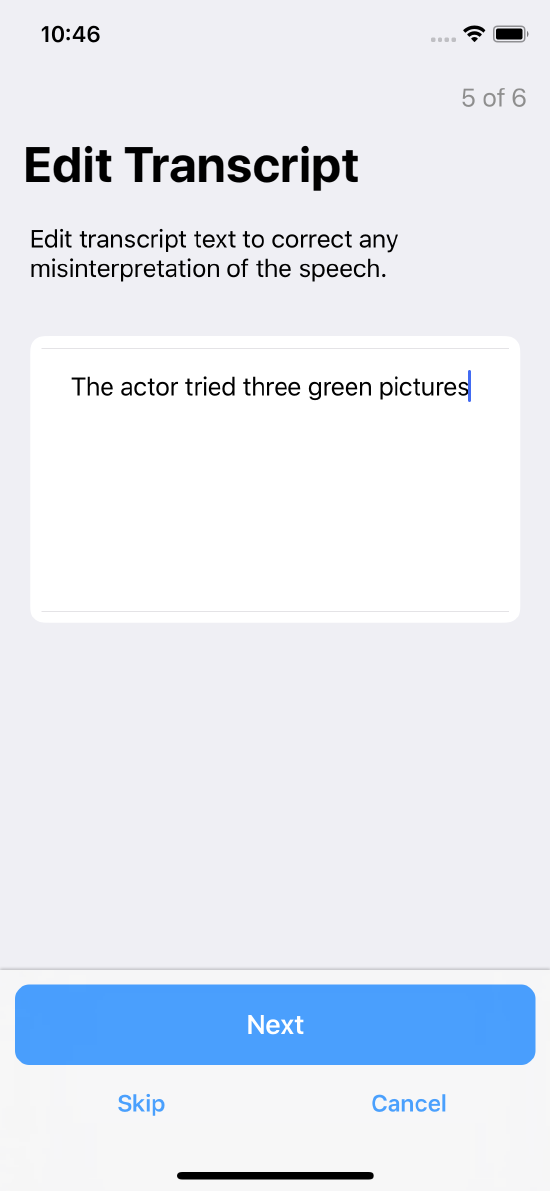

Provides the transcription and allows editing

Provides the transcription and allows editing

Task completion

Task completion

Once a user completes the recording, they are given the option to edit the transcript generated by the speech recognition engine. The data collected by this task consists of three main components:

- The raw audio recording of what the user said.

- The transcriptino generated by the speech recognition engine returned as an object of type

SFTranscript. - The edited transcript, if any, by the user.

Speech-in-Noise

Understanding speech in noisy environments depends on both the level of the background noise and the hearing health of the listener. A speech-in-noise test quantifies the difficulty of understanding speech in noisy environments.

A speech-in-noise test consists of presenting spoken sentences at different noise levels and asking listeners to repeat what they heard. Based on the sentence and word recognition rate, a metric is calculated. The speech intelligibility metric used in this test is the Speech Reception Threshold (SRT). It represents the SNR at which 50% of the words are correctly repeated by the user. The SRT is calculated using the Tillman-Olsen formula (4).

The ORKSpeechInNoiseStep class plays the speech from a file set by the speechFileNameWithExtension property mixed with noise from a file set by the noiseFileNameWithExtension property. The noise gain is set through the gainAppliedToNoise property. Use the filterFileNameWithExtension property to specify a ramp-up/ramp-down filter.

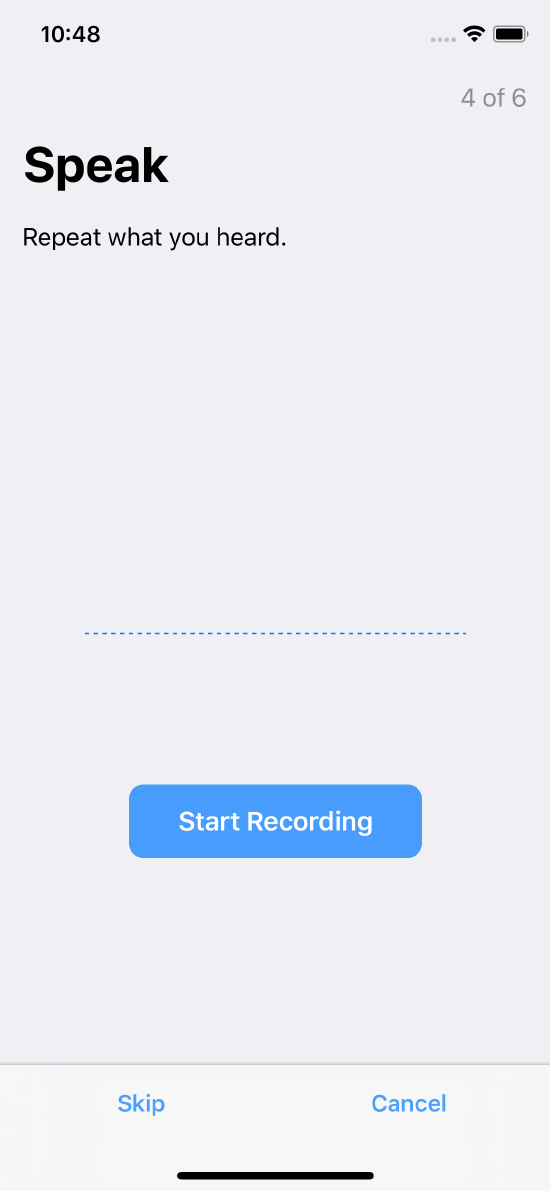

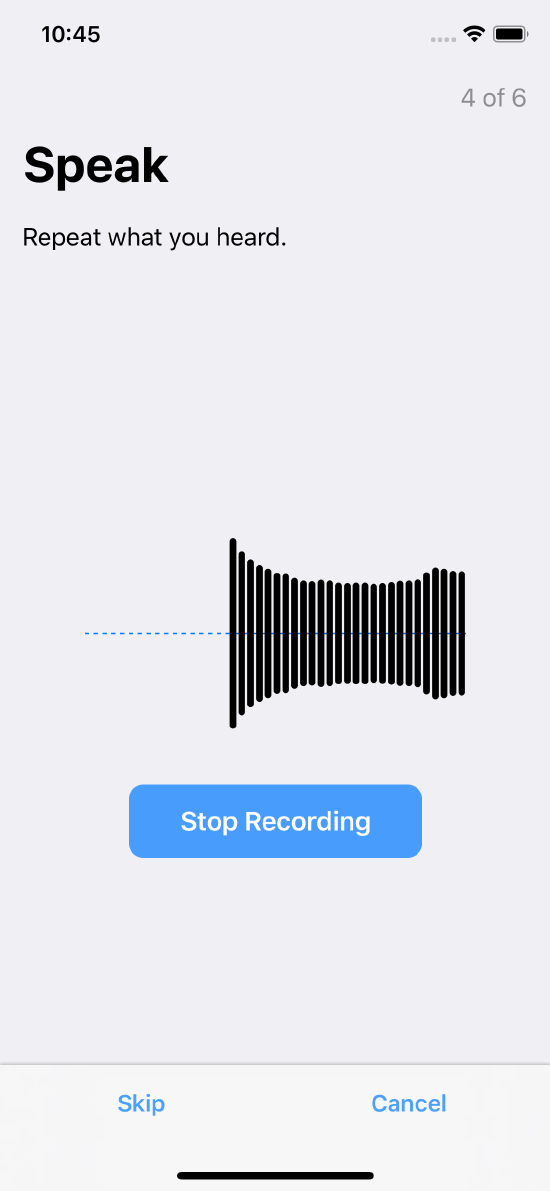

Speech-in-noise steps are shown in Figure 14.

Instruction step introducing the task

Instruction step introducing the task

Instructs the user how to proceed with the task

Instructs the user how to proceed with the task

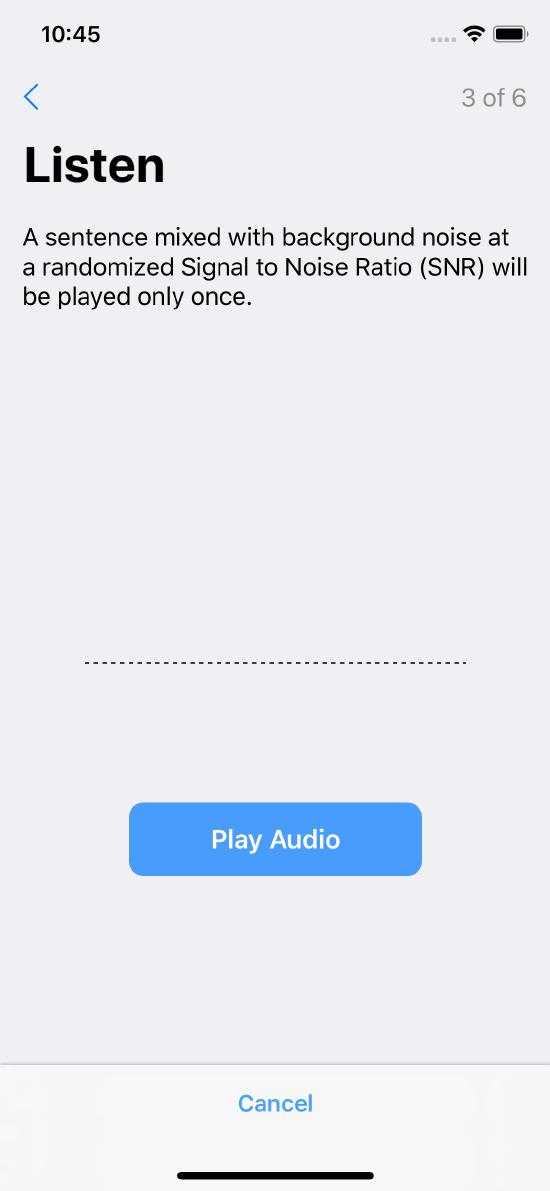

Prompts the user to play the spoken sentence

Prompts the user to play the spoken sentence

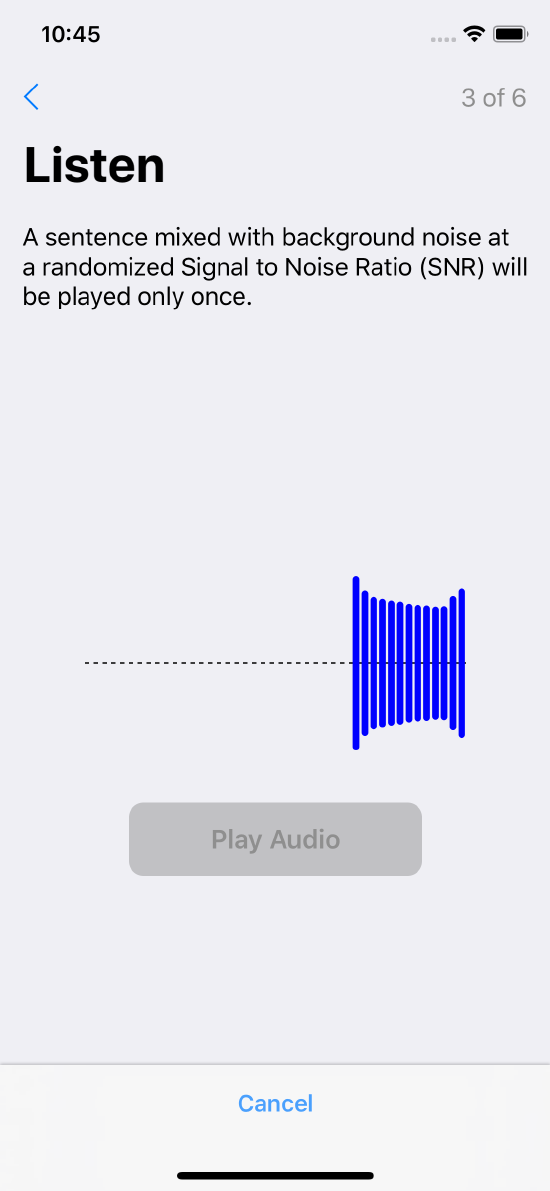

Plays the spoken sentence with background noise

Plays the spoken sentence with background noise

Prompts the user to record and repeat what they heard

Prompts the user to record and repeat what they heard

Records the user’s voice

Records the user’s voice

Task completion

Task completion

Displays spoken text and provides transcript editing

Displays spoken text and provides transcript editing

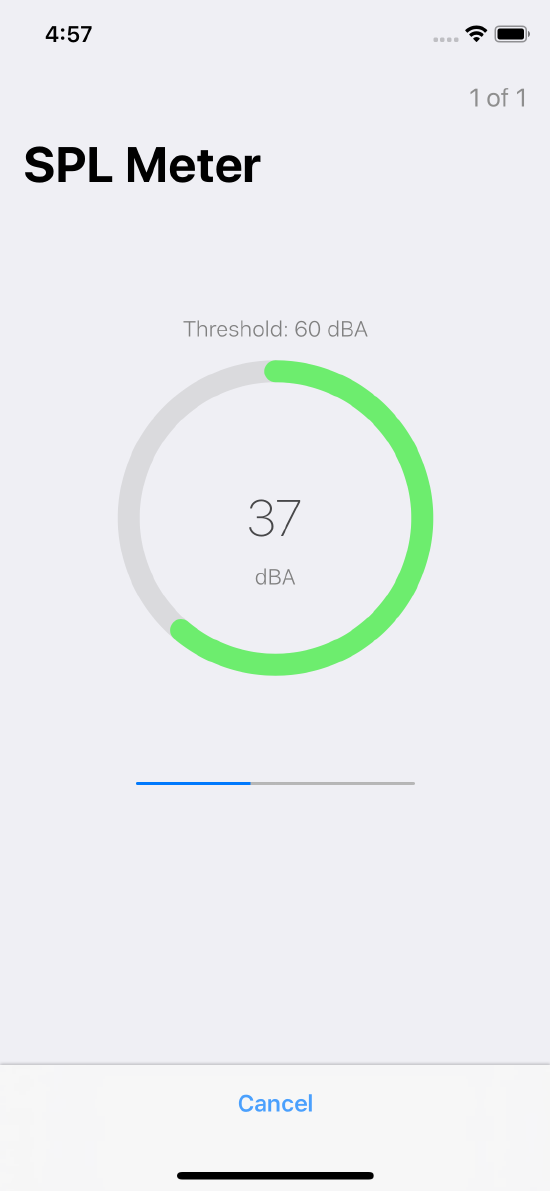

Environment SPL Meter

The Environment SPL Meter is not a task, but a single step that detects the sound pressure level in the user’s environment. Configure this step with the following properties:

thresholdValueis the maximum permissible value for the environment sound pressure level in dBA.samplingIntervalis the rate at which theAVAudioPCMBufferis queried and A-weighted filter is applied.requiredContiguousSamplesis the number of consecutive samples less than threshold value required for the step to proceed.

The environment SPL meter step is shown in Figure 15.

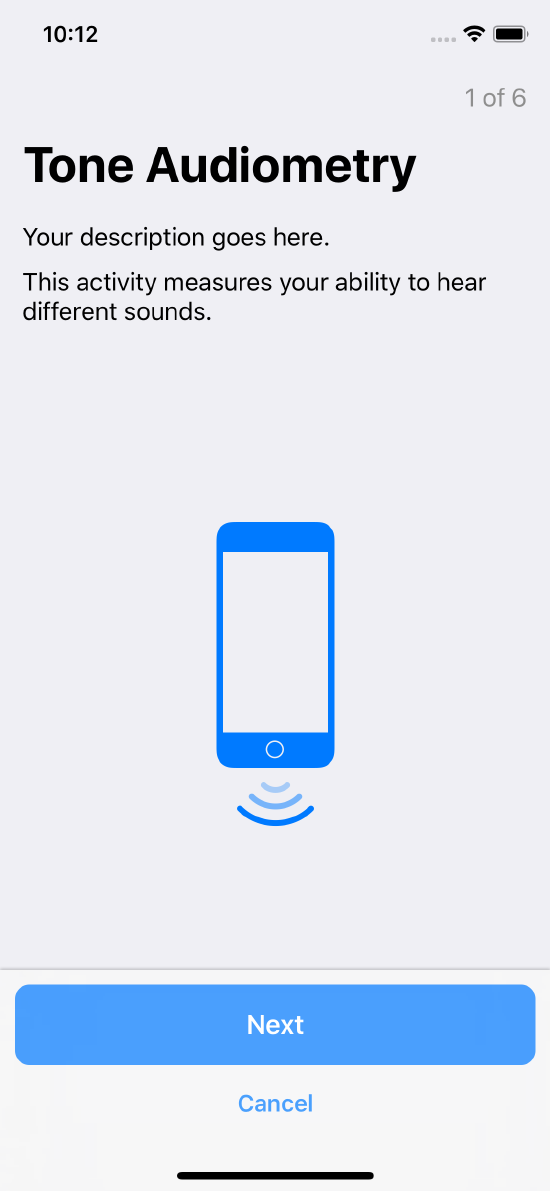

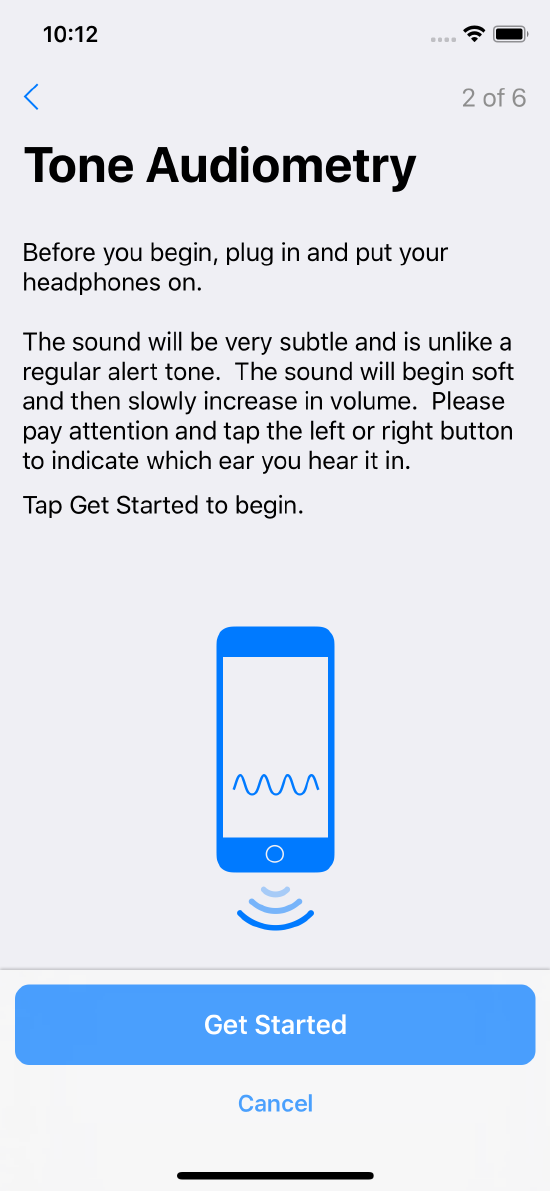

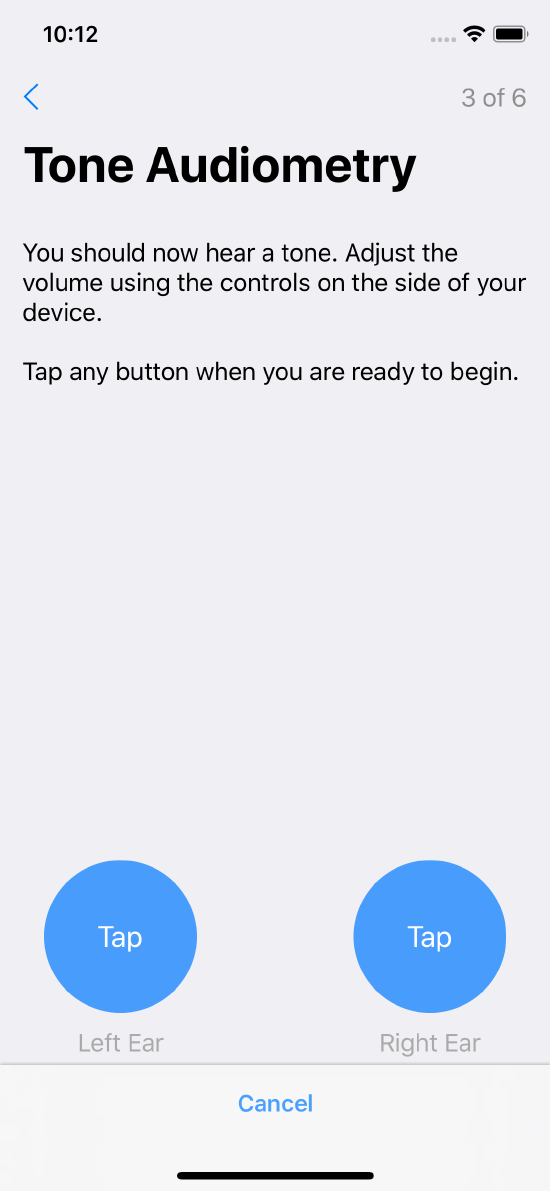

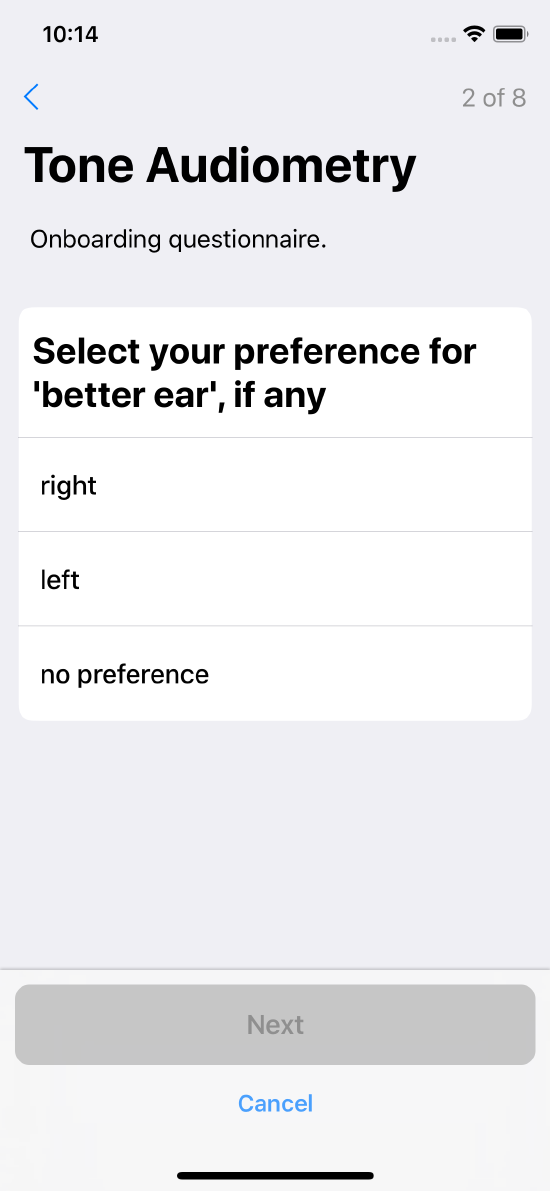

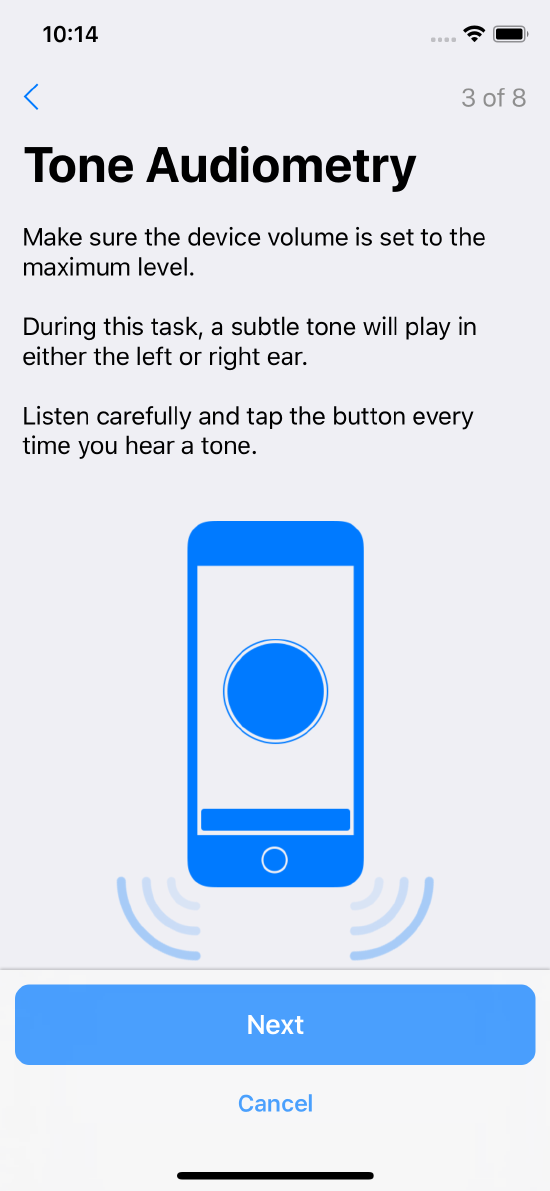

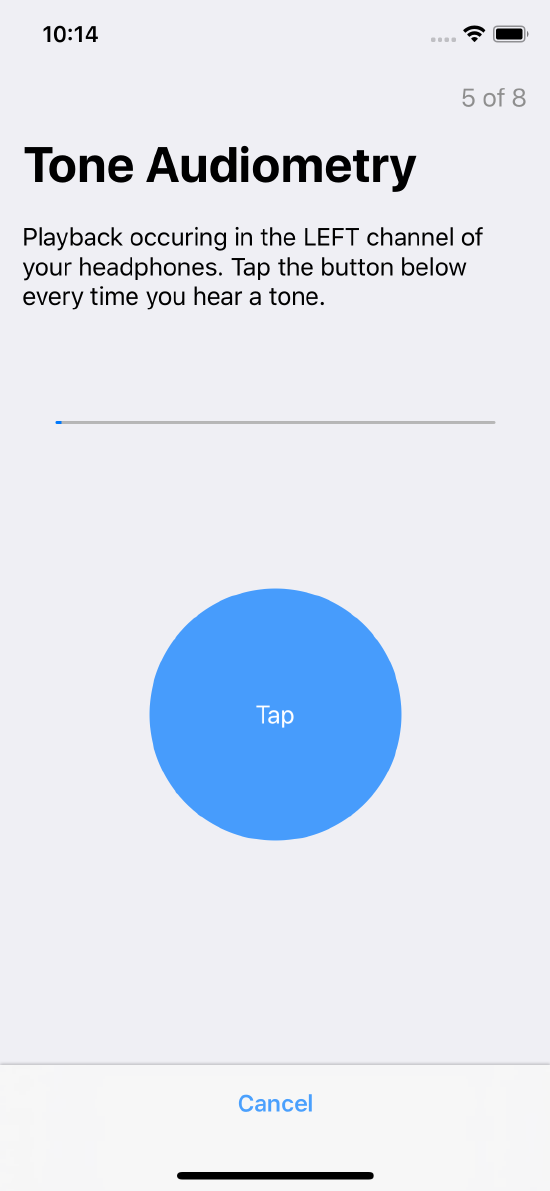

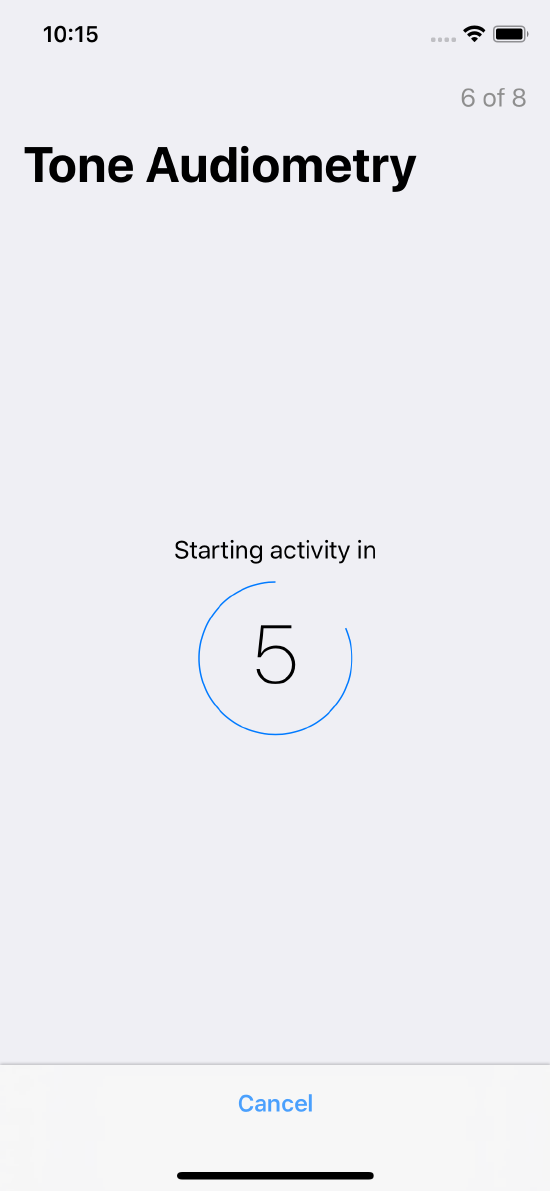

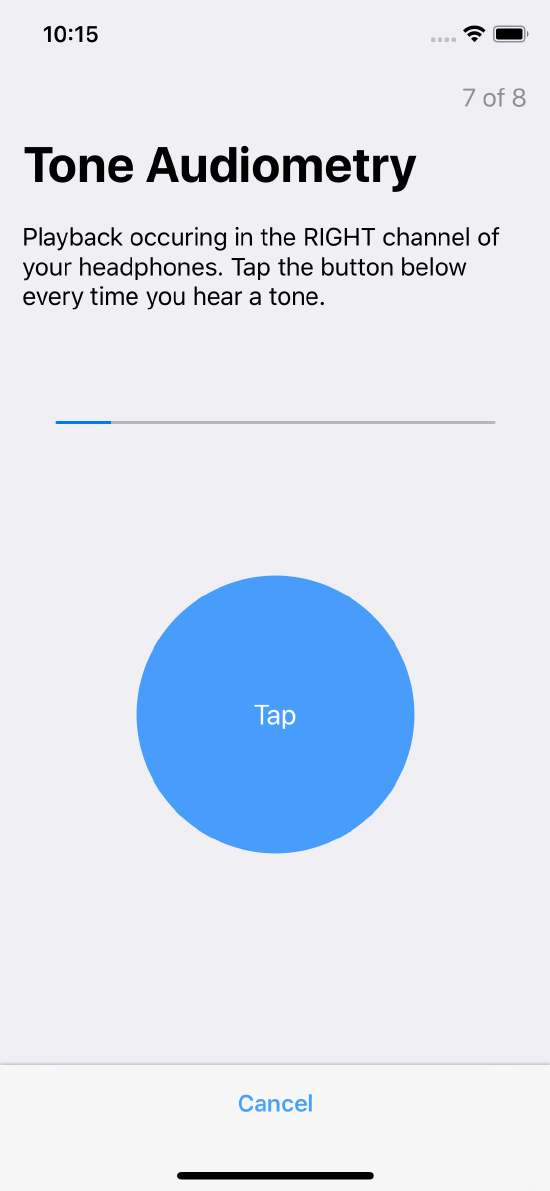

Tone Audiometry

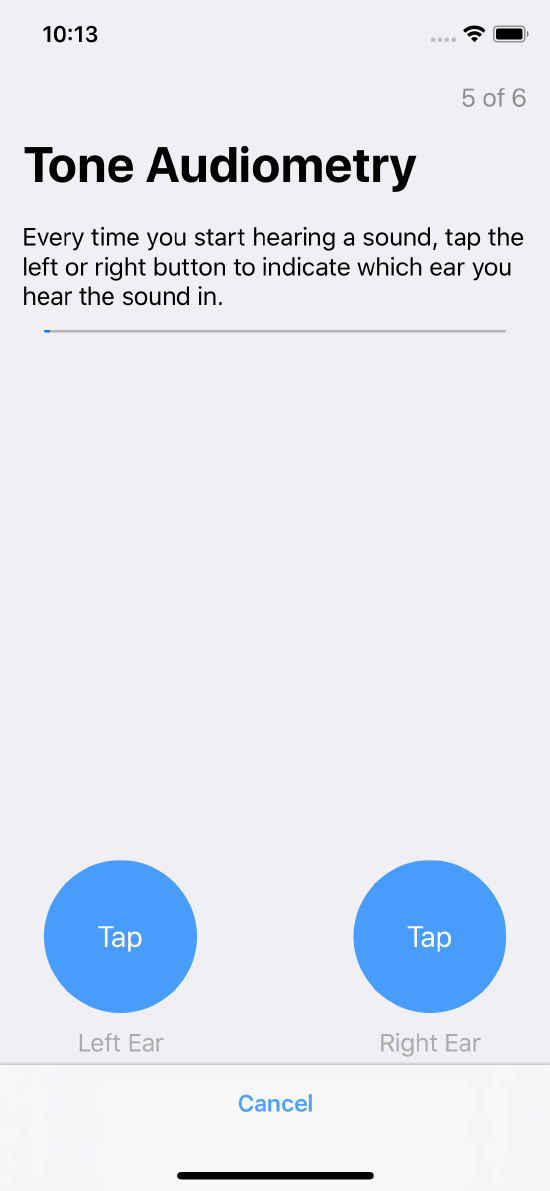

In the tone audiometry task users listen through headphones to a series of tones, and tap left or right buttons on the screen when they hear each tone. These tones are of different audio frequencies, playing on different channels (left and right), with the volume being progressively increased until the user taps one of the buttons. A tone audiometry task measures different properties of a user’s hearing ability, based on their reaction to a wide range of frequencies. (See the method ORKOrderedTask toneAudiometryTaskWithIdentifier:intendedUseDescription:speechInstruction:shortSpeechInstruction:toneDuration:options:).

Data collected in this task consists of audio signal amplitude for specific frequencies and channels for each ear.

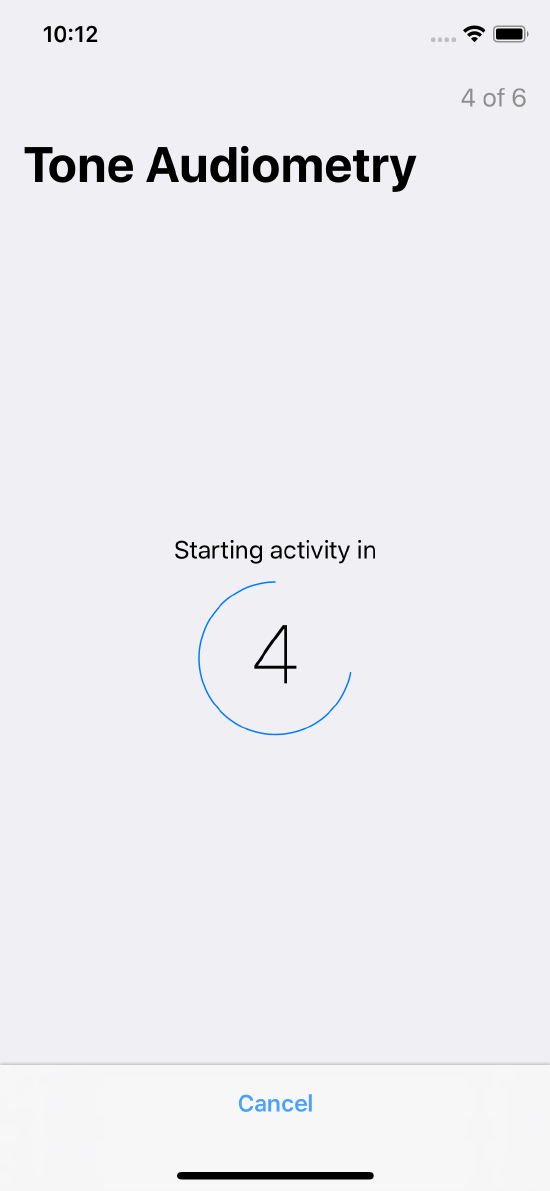

Tone audiometry steps are shown in Figure 16.

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Instruction step introducing the task

Further instructions

Further instructions

Count down a specified duration to begin the activity

Count down a specified duration to begin the activity

The tone test screen with buttons for left and right ears

The tone test screen with buttons for left and right ears

Confirms task completion

Confirms task completion

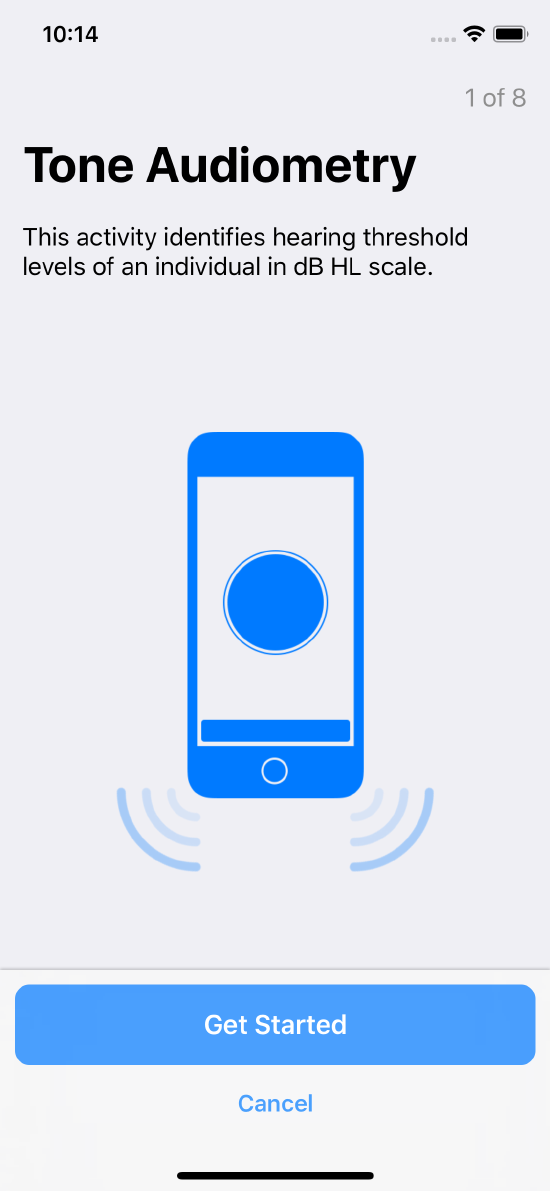

dBHL Tone Audiometry

The dBHL tone audiometry task implements the Hughson-Westlake method of determining hearing threshold. It is similar to the tone audiometry task, except that it utilizes a dB HL scale. (See the method ORKOrderedTask dBHLToneAudiometryTaskWithIdentifier:intendedUseDescription:options:).

Data collected in this task consists of audio signal amplitude for specific frequencies and channels for each ear.

dBHL tone audiometry steps are shown in Figure 17.

Instruction step introducing the task

Instruction step introducing the task

Instruction step allowing the user to select an ear

Instruction step allowing the user to select an ear

Further instructions

Further instructions

Count down a specified duration to begin the activity

Count down a specified duration to begin the activity

The tone test screen with buttons for the left ear

The tone test screen with buttons for the left ear

Count down a specified duration to begin the activity

Count down a specified duration to begin the activity

The tone test screen with buttons for the right ear

The tone test screen with buttons for the right ear

Confirms task completion

Confirms task completion

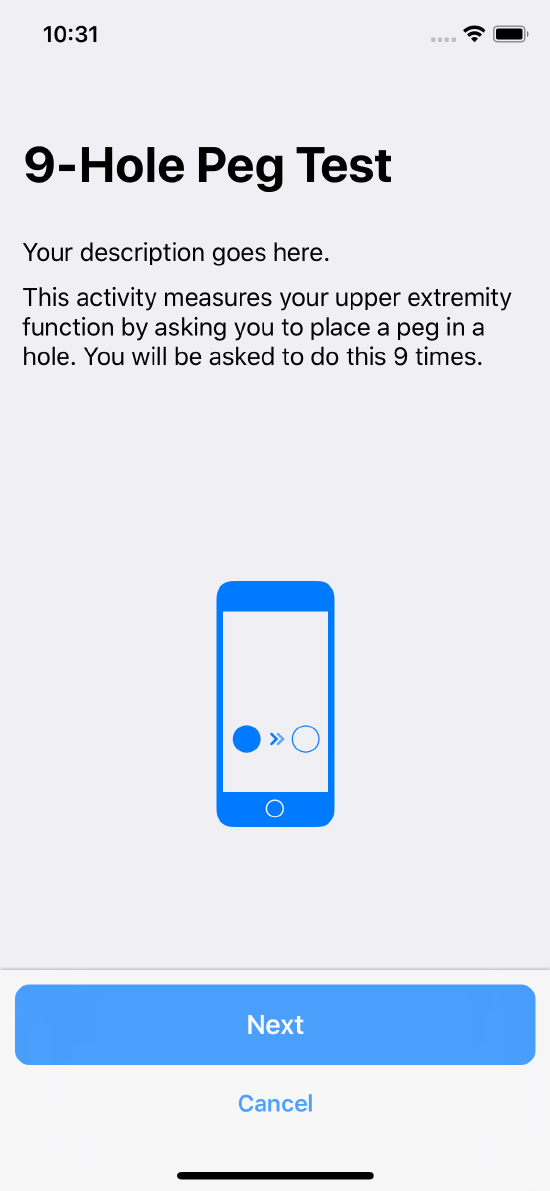

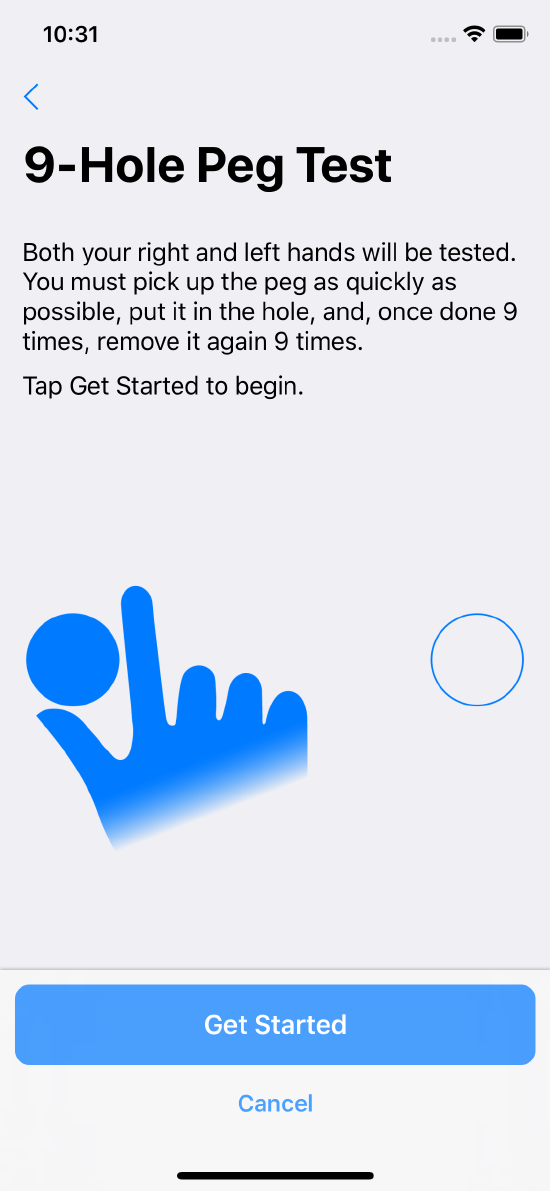

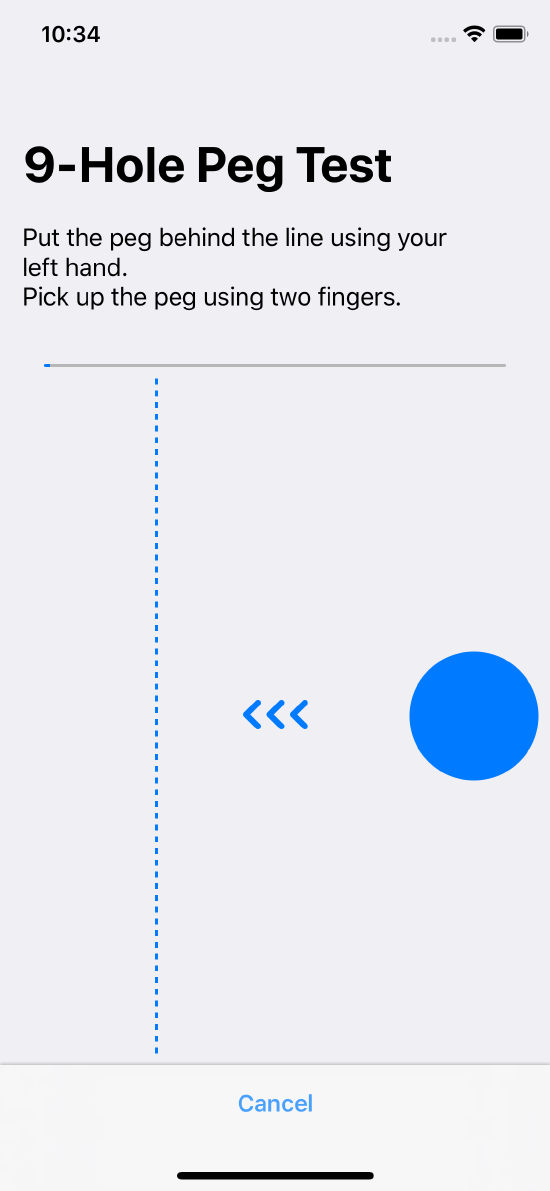

9-Hole Peg Test

The 9-hole peg test is a two-step test of hand dexterity to measure the MSFC score in Multiple Sclerosis, or signs of Parkinson’s disease or stroke. This task is well documented in the scientific literature (see Earhart et al., 2011).

The data collected by this task includes the number of pegs, an array of move samples, and the total duration that the user spent taking the test. Practically speaking, this task generates a two-step test in which the participant must put a variable number of pegs in a hole (the place step), and then remove them (the remove step). This task tests both hands.

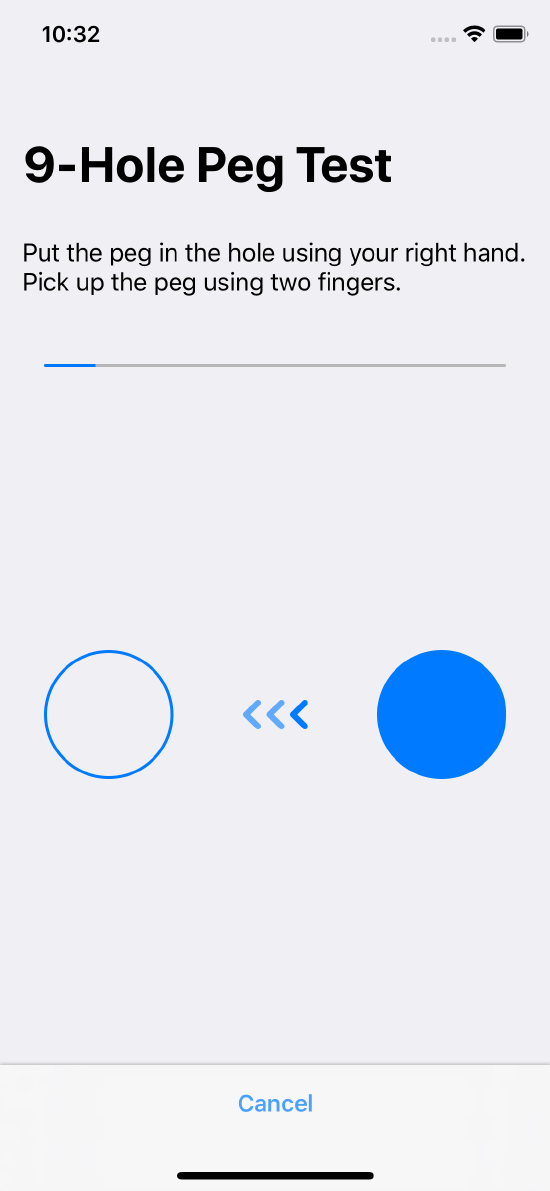

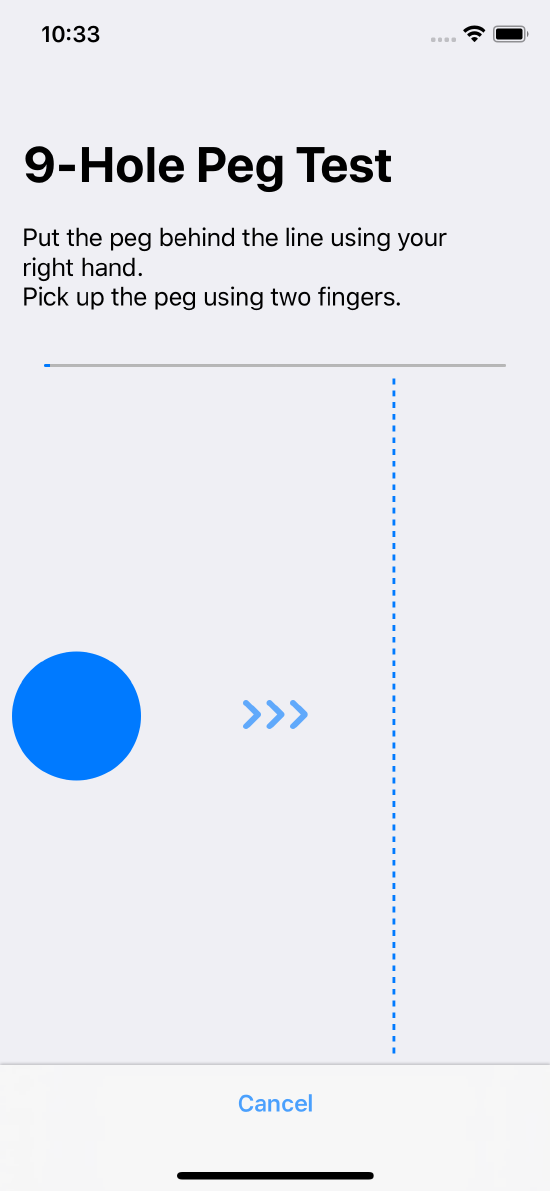

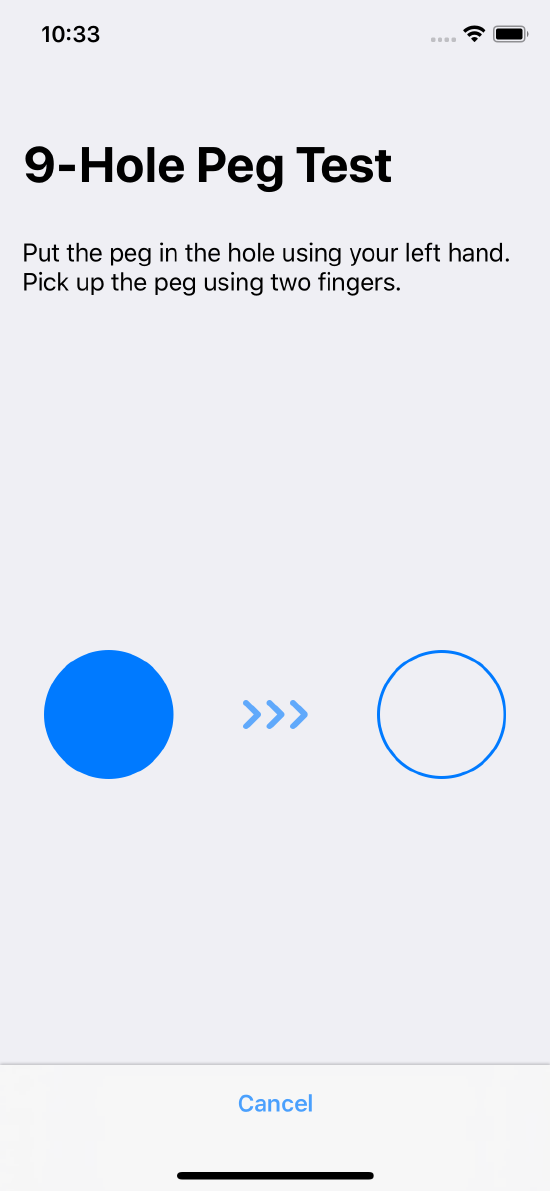

The ORKHolePegTestPlaceStep class represents the place step. In this step, the user uses two fingers to touch the peg and drag it into the hole using their left hand. The ORKHolePegTestRemoveStep class represents the remove step. Here, the user moves the peg over a line using two fingers on their right hand.

9-Hole peg test steps are shown in Figure 18.

Instruction step introducing the task

Instruction step introducing the task

Describes what the user must do

Describes what the user must do

Instructs the user to perform the step with the right hand

Instructs the user to perform the step with the right hand

Instructs the user to perform the step with the right hand

Instructs the user to perform the step with the right hand

Instructs the user to perform the step with the right hand

Instructs the user to perform the step with the right hand

Instructs the user to perform the step with the left hand

Instructs the user to perform the step with the left hand

Task completion

Task completion

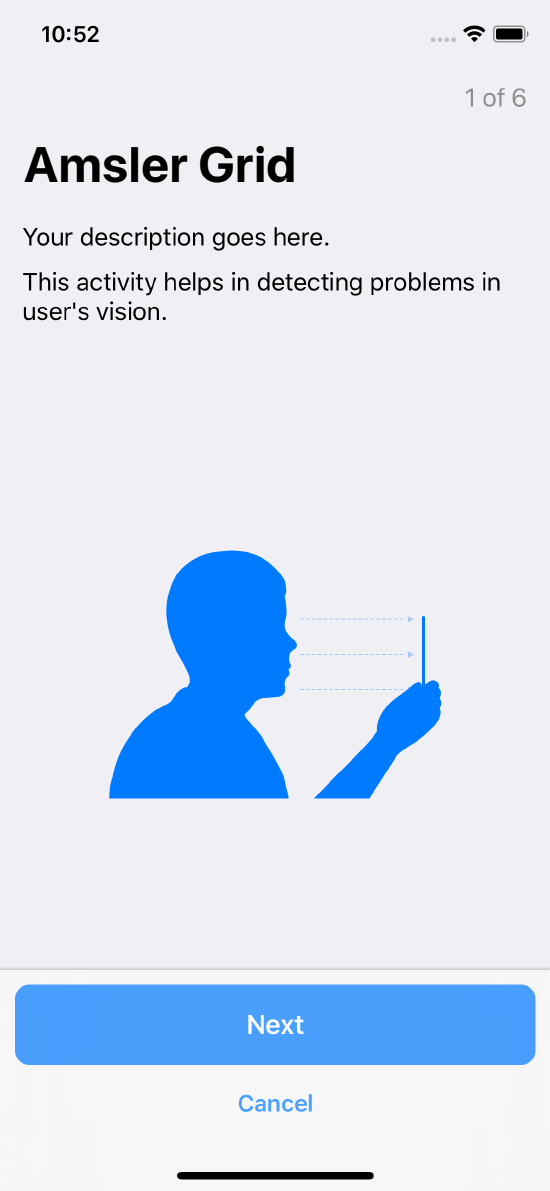

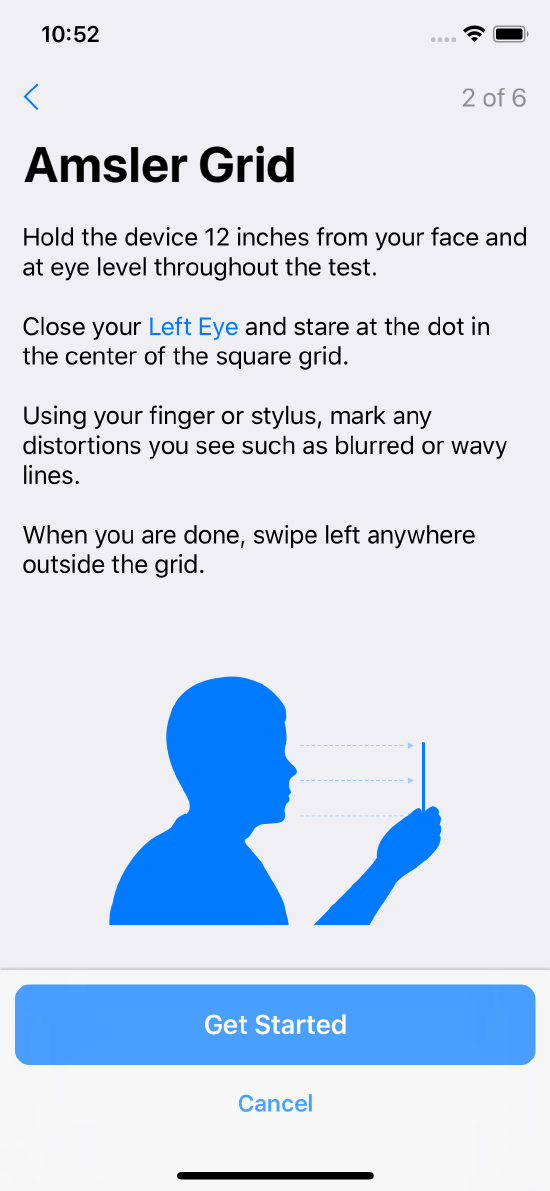

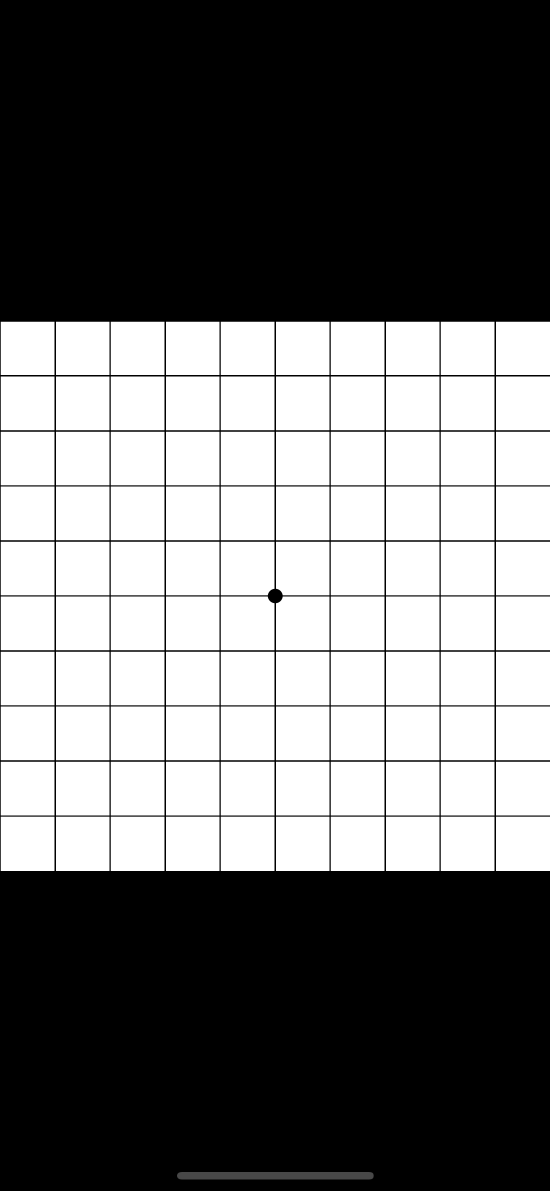

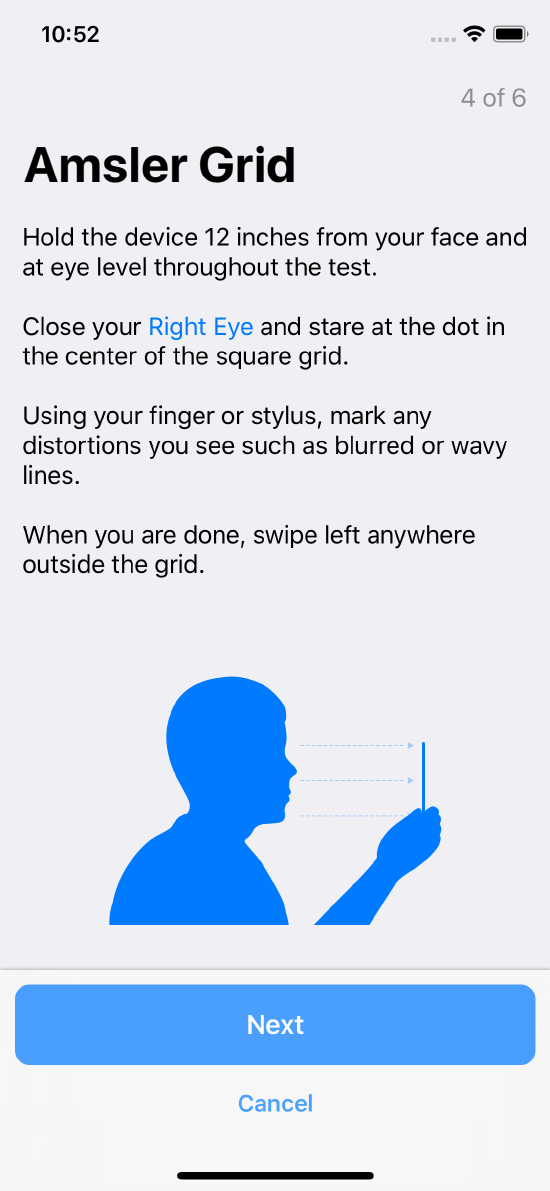

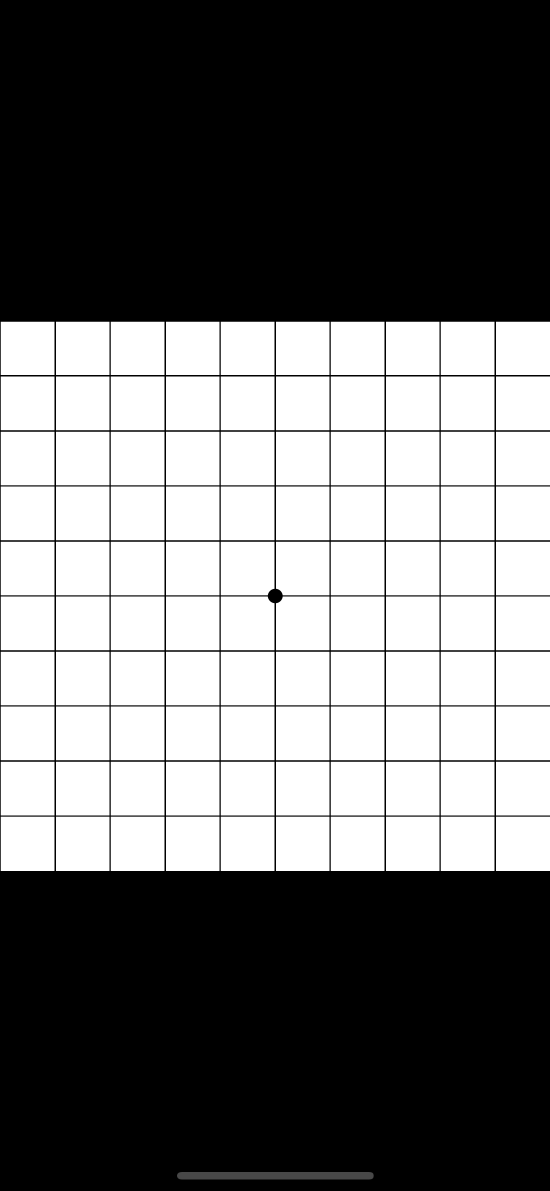

Amsler Grid

The Amsler Grid task is a tool used to detect the onset of vision problems such as macular degeneration.

The ORKAmslerGridStep class represents a single measurement step. In this step, the user observes the grid while closing one eye for any anomalies and marks the areas that appear distorted, using their finger or a stylus.

Data collected by this task is in the form of an ORKAmslerGridResult object for the eye. It contains the eye side (specified by ORKAmslerGridEyeSide) and the image of the grid, along with the user’s annotations for the corresponding eye.

Amsler grid steps for the left and right eyes are shown in Figure 19.

Instruction step introducing the task

Instruction step introducing the task

Instruct the user how to measure the left eye

Instruct the user how to measure the left eye

Perform the left eye test

Perform the left eye test

Instruct the user how to measure the right eye

Instruct the user how to measure the right eye

Perform the right eye test

Perform the right eye test

Task completion

Task completion

Collect the Data

The data collected in active tasks is recorded in a hierarchy of ORKResult objects in memory. It is up to you to serialize this hierarchy for storage or transmission in a way that’s appropriate for your application.

For high sample rate data, such as from the accelerometer, use the ORKFileResult in the hierarchy. This object references a file in the output directory (specified by the outputDirectory property of ORKTaskViewController) where the data is logged.

The recommended approach for handling file-based output is to create a new directory per task and to remove it after you have processed the results of the task.

Active steps support attaching recorder configurations

(ORKRecorderConfiguration). A recorder configuration defines a type of

data that should be collected for the duration of the step from a sensor or

a database on the device. For example:

- The pedometer sensor returns a

CMPedometerDataobject that provides step counts computed by the motion coprocessor on supported devices. - The accelerometer sensor returns a

CMAccelerometerDataobject that provides raw accelerometer samples indicating the forces on the device. - A

CMDeviceMotionobject provides information about the orientation and movement of the device by combining data collected from the accelerometer, gyroscope, and magnetometer. - HealthKit returns sample types, such as heart rate.

- CoreLocation returns location data (combined from GPS, Wi-Fi and cell tower information).

The recorders used by ResearchKit’s predefined active tasks always use

NSFileProtectionCompleteUnlessOpen while writing data to disk, and

then change the file protection level on any files generated to

NSFileProtectionComplete when recording is finished.

Access Health Data

For HealthKit related data, there are two recorder configurations:

ORKHealthQuantityTypeRecorderConfigurationto access quantity data such as heart rate.ORKHealthClinicalTypeRecorderConfigurationto access health records data.

Access to health quanity and records data requires explicit permission that the user must grant explicitly. More information about accessing health record data can be found here.

Create Custom Active Tasks

You can build your own custom active tasks by creating custom subclasses of ORKActiveStep and

ORKActiveStepViewController. Follow the example of active steps in ResearchKit’s predefined tasks. (A helpful tutorial on creating active tasks can be found here).

Some steps used in the predefined tasks may be useful as guides for creating your own tasks. For example:

ORKCountdownStepdisplays a timer that counts down with animation for the step duration.ORKCompletionStepdisplays a confirmation that the task is completed.

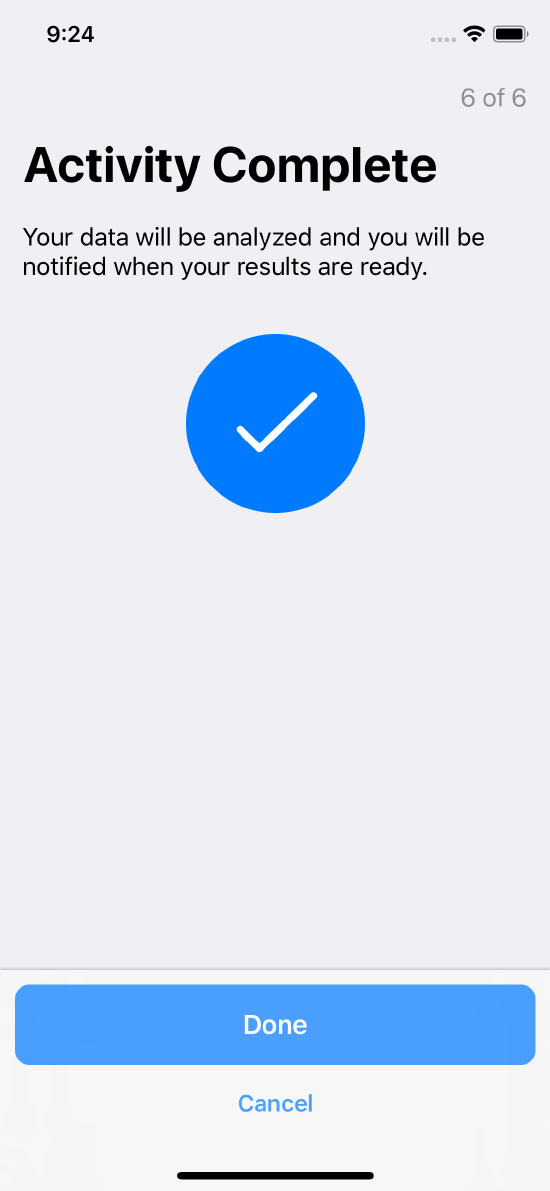

Figure 20 shows examples of custom tasks.

Countdown step

Countdown step

Task completion step

Task completion step

–

References

[Yancheva et. al., 2015] M. Yancheva, K. C. Fraser and F. Rudzicz, “Using linguistic features longitudinally to predict clinical scores for Alzheimer’s disease and related dementias,” Proceedings of SLPAT 2015: 6th Workshop on Speech and Language Processing for Assistive Technologies, 2015.

[Konig et al., 2015] A. König, A. Satt, A. Sorin, R. Hoory, O. Toledo-Ronen, A. Derreumaux, V. Manera, F. Verhey, P. Aalten, P. H. Robert, and R. David. “Automatic speech analysis for the assessment of patients with predementia and Alzheimer’s disease,” Alzheimers Dement (Amst). 2015 Mar; 1(1): 112–124.

[Gong and Poellabauer’ 17] Y. Gong and C. Poellabauer, “Topic Modeling Based Multi-modal Depression Detection,” AVEC@ACM Multimedia, 2017.

[T.W. Tillman and W.O. Olsen] “Speech audiometry, Modern Development in Audiology (2nd Edition),” 1972.