Sep 14, 2018

Back in June, we revealed the all new ResearchKit 2.0 and now we are happy to announce that as of last week, the improved stable branch is available on Github. Along with the additions and advances released in June, we announced a new development release schedule which moved our stable push out a few months after the June master. Over the last three months, the ResearchKit community has actively participated in our efforts to improve the overall stability, quality and support of the framework. ResearchKit 2.0 now includes support for over 40 major languages and numerous updates to aid accessibility features. What’s even more is that during this time new additions and contributions have also been incorporated into the stable branch. Check out some of the highlights below.

New Additions and Upgrades- Required Scrolling: This contribution enables developers to require that users to scroll to the bottom of the consent document before the “Agree” button becomes activated. This new functionality helps emphasize the importance that users read and understand the full content of the study they are consenting to participate in.

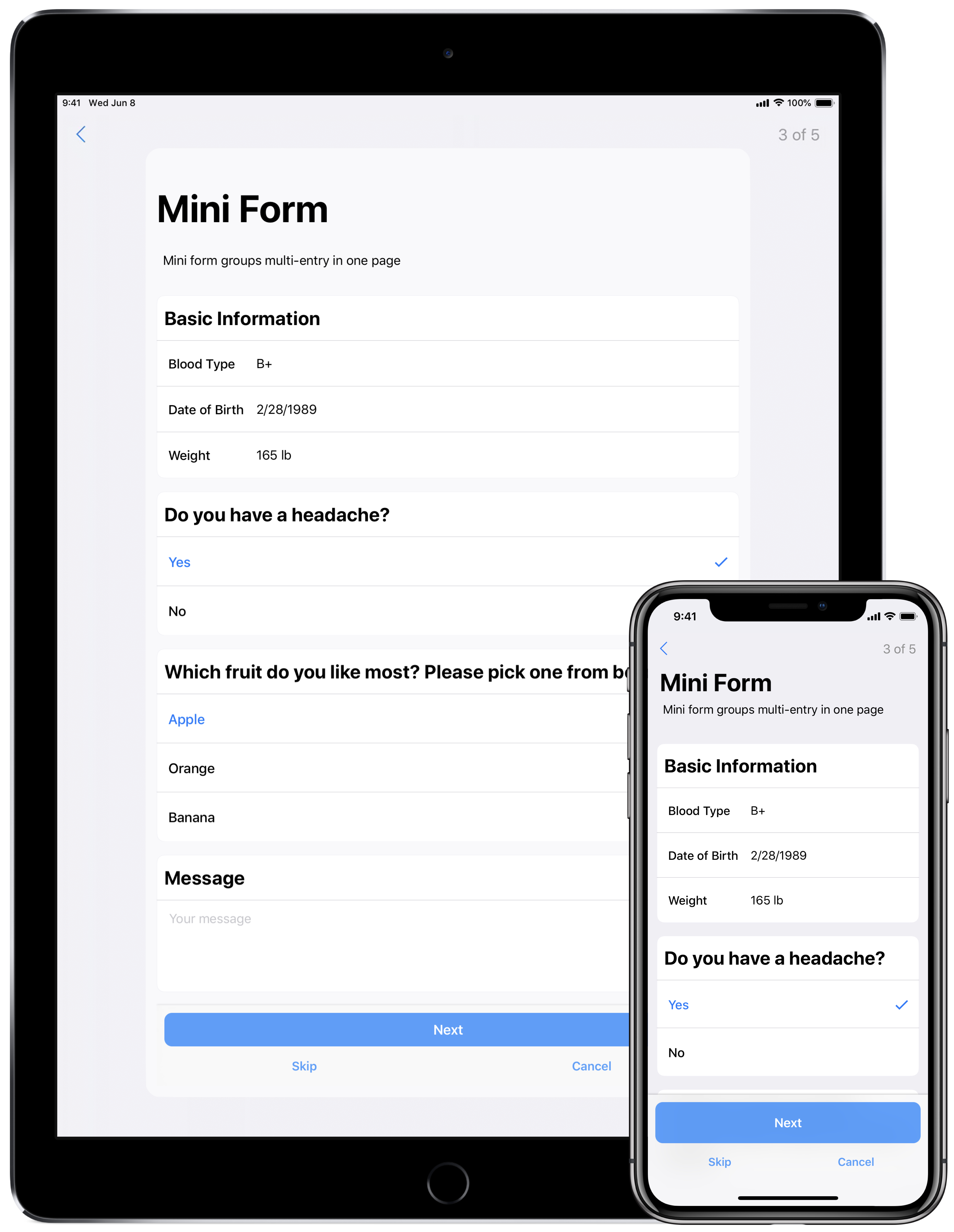

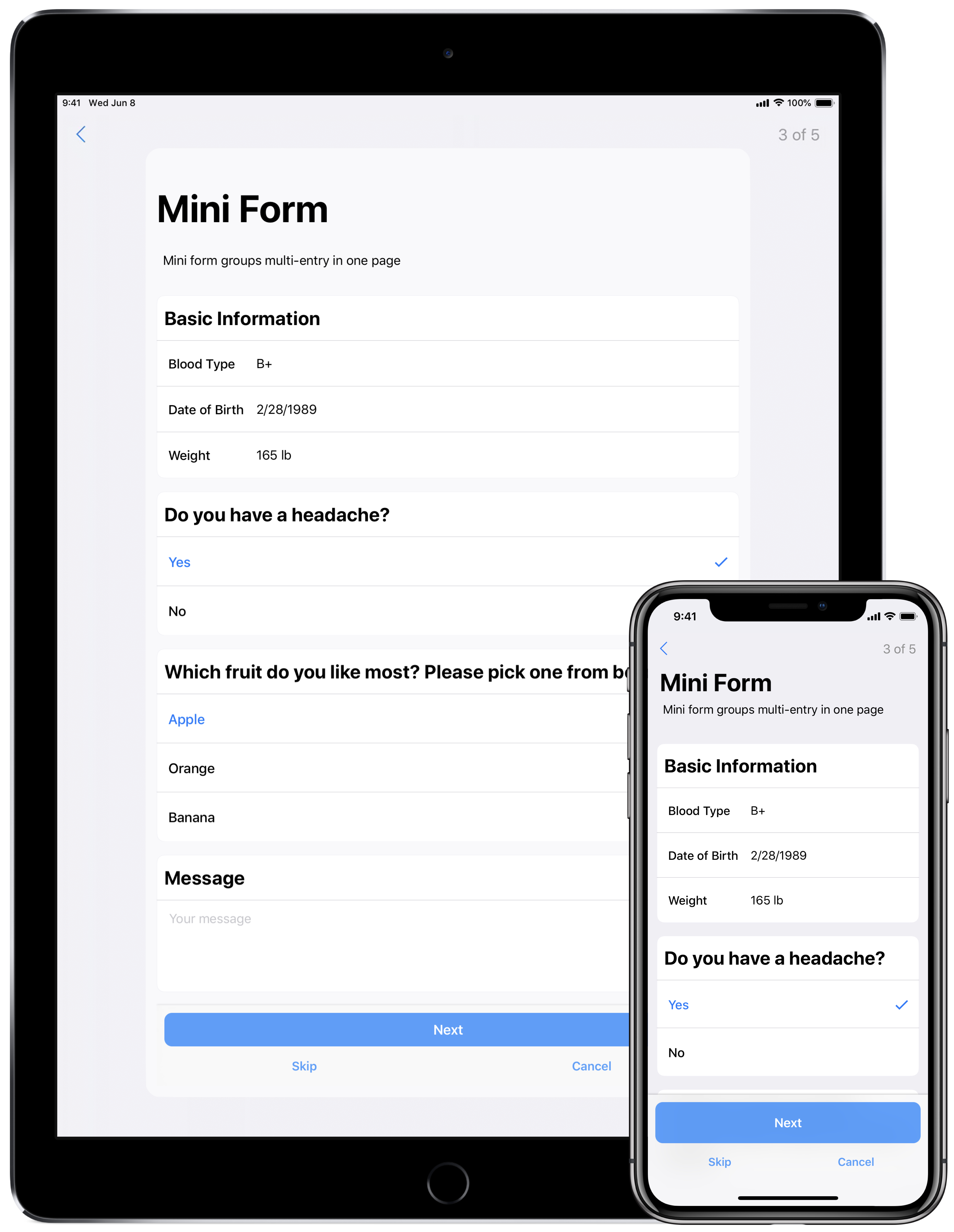

- iPad UI:The new look and feel that you saw last June on the iPhone has now made its way to the iPad. All new card views have been brought to the center and maintain the latest style guidelines to make all of the forms and tasks easy to view and navigate.

- Calibration Data: The AirPods calibration data has been updated with improved accuracy based on internal validation studies and we have also added calibration data for EarPods!

- Speech in Noise: There are now 175 audio files available in ResearchKit using Git-LFS which allows for the storage of these files while maintaining the size of the repository. These files are now accessible for use in the new Speech in Noise active task.

- The ResearchKit Team

Jun 13, 2018

Last week we announced the latest ResearchKit 2.0 release that includes updates across the framework, including community GitHub updates, UI improvements and several new active tasks.

CommunityWe have made changes that we think will benefit our community in a big a way. Earlier this year, we granted GitHub privileges to five all-star community members (check out the announcement details here) and moving forward we are changing our release schedule for ResearchKit 2.0 to allow for more participation from the community. By pushing to the stable branch some time after our initial push to master, we are enabling the developer community to become fully versed in the new changes, make suggestions and pull requests and then see those changes reflected in the official stable release.

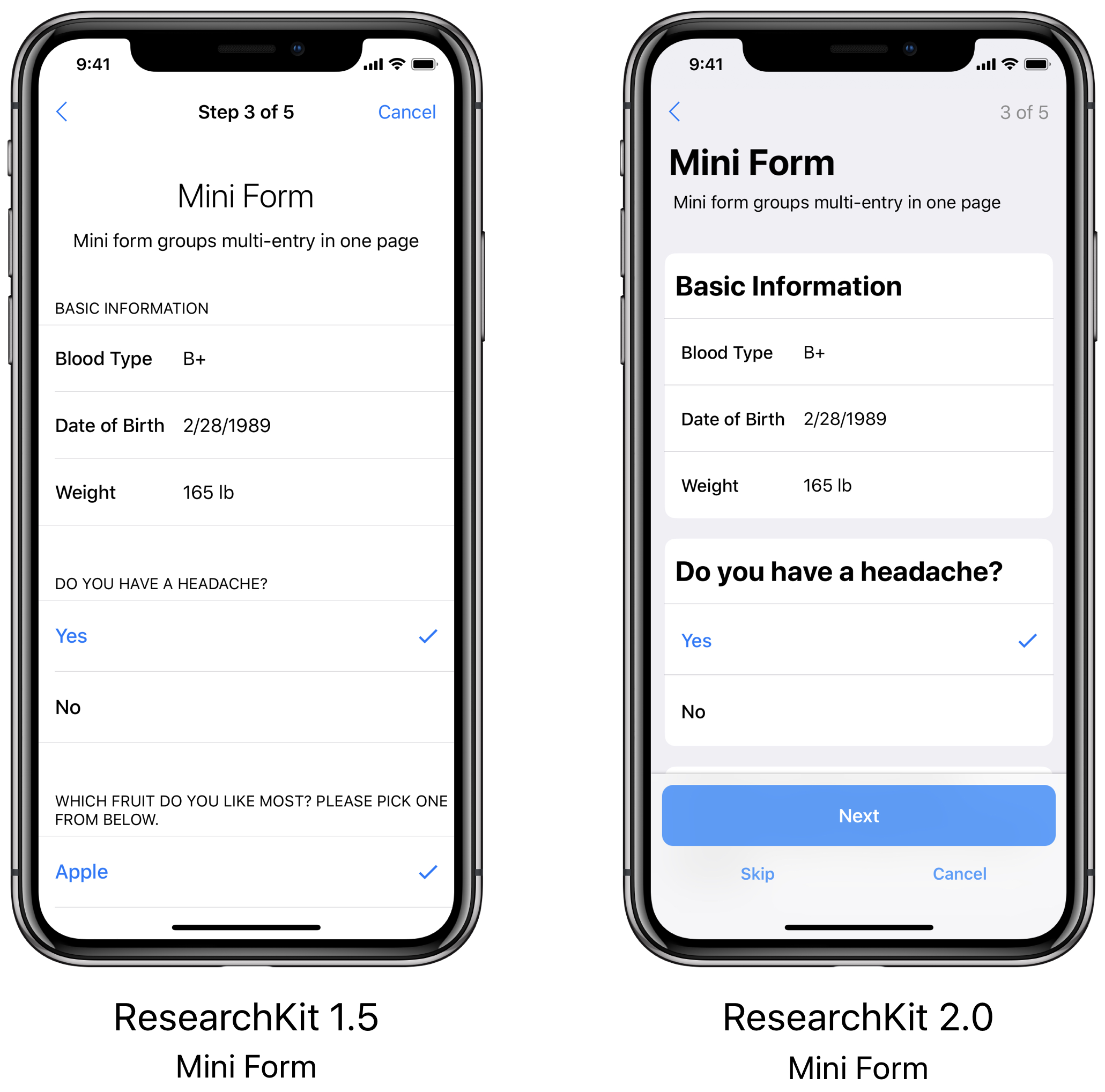

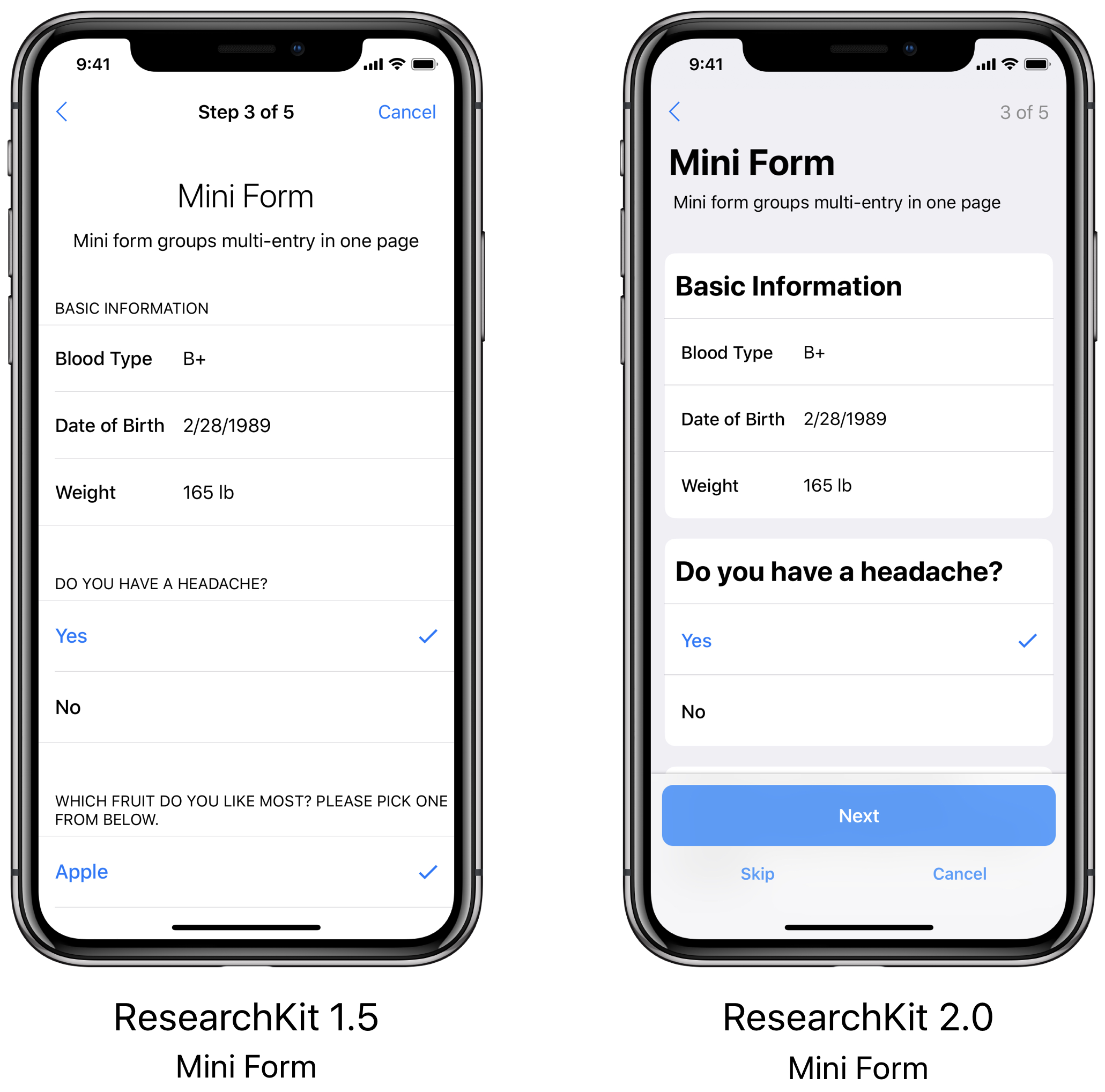

UI ImprovementsResearchKit 2.0 has a whole new look and feel! The UI has been updated across the entire framework to closely reflect the latest iOS style guidelines. Footers are now sticky to the bottom of all views with filled button styles and the ‘cancel’ and ‘skip’ buttons have relocated under the continue button to allow for easier navigation. Additionally, a new card view enhances the look of forms and surveys. All of our updates are aimed at making the experience more enjoyable and intuitive for ResearchKit app users.

New Features

New FeaturesResearchKit 2.0 includes several new tasks and features that enables researchers to collect information in innovative ways that will lead to more insightful results.

- PDF Viewer: A step that enables users to quickly navigate, annotate, search and share PDF documents.

- Speech Recognition: A task that asks participants to describe an image or repeat a block of text and can then transcribe users’ speech into text and allow editing if necessary.

- Speech in Noise: A task that spans speech and hearing health and allows developers and researchers to assess results on participants’ speech reception thresholds by having participants listen to a recording that incorporates ambient background noise as well as a phrase, and then asking users to repeat phrases back.

- dBHL Tone Audiometry: A task that uses the Hughson Westlake method for determining the hearing threshold level of a user in the dB HL scale. To facilitate this task we have also open-sourced calibration data for AirPods.

Apr 24, 2018

Novartis, a global leader in ophthalmology, is launching FocalView, an ophthalmic app created with Apple ResearchKit and designed to modernize clinical trials in ophthalmology.

Using an open-source platform created by Apple, Novartis has made the app freely available to the scientific community, allowing researchers to collect real-time, self-reported data, directly from consenting patients. By potentially enabling more accurate tracking and measurement of disease progression and creating more sensitive clinical trial endpoints, the aim is to gain a more nuanced understanding of ophthalmic diseases, thereby potentially accelerating the development of novel treatments.

The app will track patient-reported outcomes related to ocular symptoms, visual functions and quality of life:

- Visual Acuity and Contrast Sensitivity: Using the Landolt C eye test, the app aims to assess the user’s ability to identify the gap in the C at various sizes as well as contrast levels

- Steps Outside the Home: Designed to track the activity of a user, the app sets a home area and uses geo-fenced technology to track when they leave their home and how many steps they take. For those participating in the clinical trial, this activity is designed to identify potential links between the efficacy of the medicine and a participant’s confidence in leaving the home

- Mood Survey and Questionnaire: weekly reminders to allow users to track their mood status, including ‘sad’, ‘somewhat sad’, ‘somewhat happy’ and ‘happy. A questionnaire (VFQ-25) also enables users to evaluate the impact of visual function on daily activities

- Adjusting to Darkness: Measures the length of time required for the user’s eyes to adjust to darkness and identify an object

Previously, options for capturing data in traditional ophthalmic clinical trials have been inflexible and infrequent, making it difficult for researchers to monitor patients’ disease activity and understand real-world patient experiences. FocalView could provide researchers with a greater volume of real-world, patient-reported data, creating the opportunity for more flexible and accessible clinical trial designs.

FocalView will be tested in a prospective, non-interventional study to evaluate the app’s efficacy and usability in assessing visual function. Researchers will in turn assess ease of use, level of enrollment and the ability to obtain important documentation for future clinical trial research, such as informed consent. In the next phase, the app will be validated against traditional visual testing that takes place within conventional clinical settings.

The app is now available to download from the App Store in the US. Consent to contribute to research data will be required before a user can interact with the tool.

To learn more, visit Novartis

- Novartis Ophthalmology

Jan 25, 2018

To kick off the New Year and celebrate the developer community that has helped contribute to and grow ResearchKit, we are granting write level permissions to some of the most active members within the ResearchKit GitHub community. This core group will collaborate with Apple team members to review and merge pull requests, assist in developing guidelines to showcase best practices, and help prioritize new features for the framework.

Please join us in congratulating Nino Guba, Erin Mounts, Ricardo Sanchez-Saez, Fernando Waigandt, and Shannon Young! We very much appreciate your engagement, thoughtfulness and contributions, and look forward to the great work you’ll continue to do on the platform.

Over time we fully expect this core group to grow and include more outstanding contributors. This is an exciting step for the thriving ResearchKit community, and we look forward to building and growing more, together.

- The ResearchKit Team

Nov 1, 2017

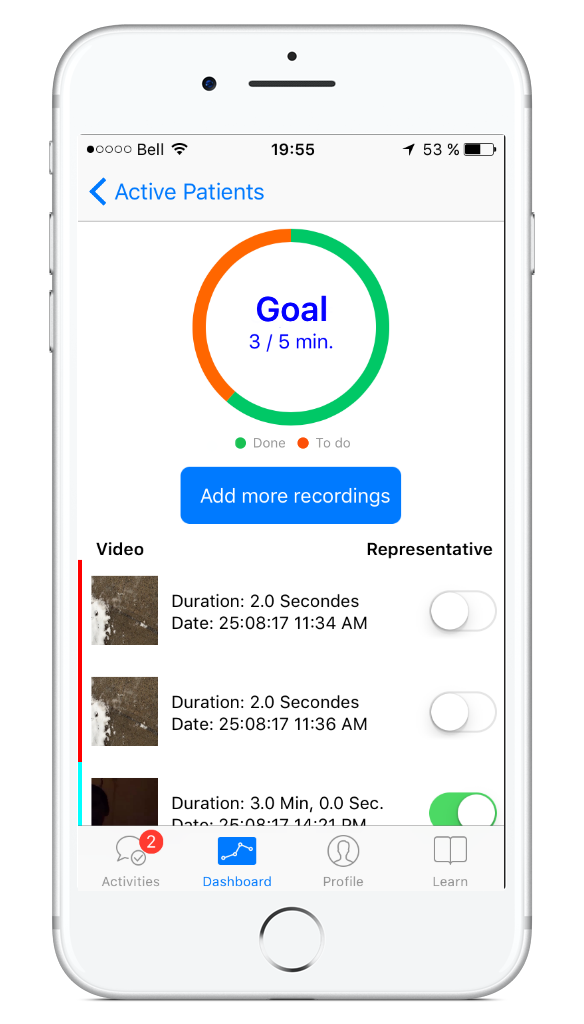

As per the white coat syndrome at your doctor’s office, children with speech-language problems do not always speak at their best during their first diagnostic appointment with the Speech-Language Pathologist (SLP). This increases assessment time and affects validity of the speech-language assessment. Capturing a child’s spontaneous speech-language in a natural environment improves the child’s diagnostic assessment. Hence, our challenge was to help parents record the speech of their child in a natural environment like home prior to the first appointment with the SLP. Our team developed ELMo, a platform that allows parents to capture video recordings of their child's speech-language at home with their iPhone or iPad. The video is sent to the SLP on a web portal and app prior to their first appointment.

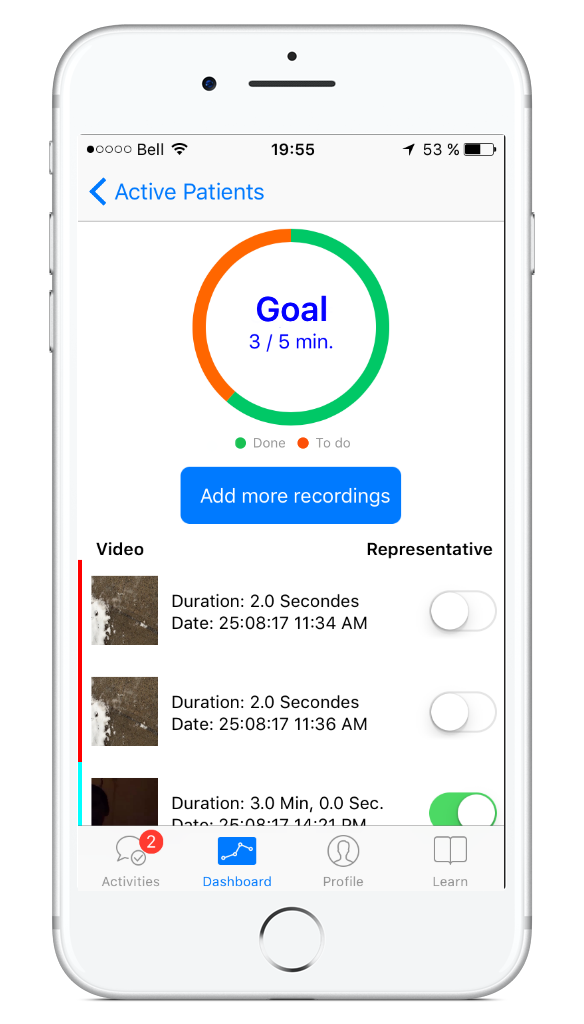

ELMo was developed in 2015 and piloted with the SLPs and patients at CHU Sainte-Justine Mother and Child University Hospital Center. We extended the open source ResearchKit framework with a custom active task, so we could record a video of the child’s speech-language at home. The ELMo app will also ask parents to confirm that the video recording is representative of their child’s normal speech-language.

We have also added a specific goal for participants to track and complete 3 to 5 minutes of video recordings before the first appointment, using notifications to remind them of their progress. All of the recordings are sent to a back end server to be transcribed and statistically processed for the SLP. To support the SLP in this workflow, we also created an SLP dashboard that facilitates the assessment process and makes it even easier. We have many plans to continue the development and improve the ELMo app and in the next iteration we will add CareKit modules, like the care plan, to help participants manage different activities to help with their individual care.

- Kathy Malas, Speech-Language Pathologist and Michel Bilodeau, IT architect and developer GoELMo team

Oct 4, 2017

Cancer patients, survivors, and their caregivers frequently suffer from symptoms of post-traumatic stress disorder (PTSD) as a result of their cancer diagnosis and treatment. A team at Duke Health seeks to empower cancer patients, survivors, and their caregivers by putting tools and educational resources to help cope with these symptoms right in their hands. Cancer Distress Coach, a mobile app developed by Principle Investigator Sophia Smith (Duke School of Nursing) and mobile app developers, Jamie Daniel and Mike Revoir at Duke Institute for Health Innovation, launched in June 2017.

Cancer Distress Coach is built using ResearchKit, an open source framework designed by Apple and adapted for Android using ResearchStack. Activities in the app include guided imageries, meditation exercises, inspirational quotes as well as music and photos. As participants complete the activities, they will learn more about their symptoms and available resources, better understand their levels of stress, build a network of support and gain new skills to help manage stress in the moment.

The app, which is currently available in the US for download on the App Store, is an expansion and redesign of an earlier app that Sophia Smith, Ph.D., associate professor of nursing at Duke, and a team of researchers developed in partnership with the National Center for PTSD and U.S. Department of Veterans Affairs. They tested their app in 2015-16 with 31 Duke cancer patients. Results from that study indicated that most participants (86 percent) found the app reduced their anxiety and provided practical solutions to PTSD symptoms.

Aguinita Aiken was one of those patients and says the app helped her overcome the panic attacks and fear of socializing, which she developed in the wake of her breast cancer diagnosis in late 2014.

“The app really helped me through my crisis,” Aiken said. “In particular, I found the resources that helped me calm down and do breathing exercises very helpful. I learned coping strategies and it was helpful to have reminders and encouragement to take care of myself.”

As users download and use Cancer Distress Coach, their experiences will help inform a national study to investigate the effectiveness of stress-reduction tools via mobile app. The researchers hope the app will one day be offered as a standard part of cancer care.

For inquiries about Cancer Distress Coach please contact Krista Whalen at krista.whalen@duke.edu

- Cancer Distress Coach Team